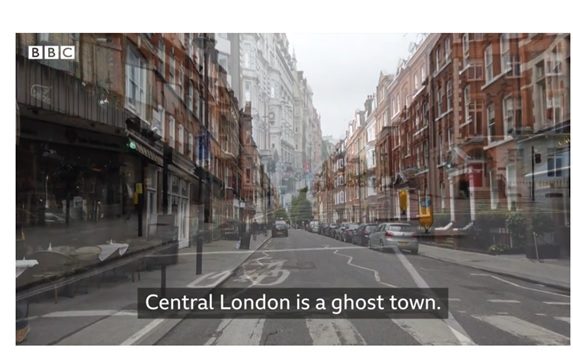

Earlier this week, I was speaking at an event on AI for Real Estate where I showed an example from a BBC clip which said that “central London is now a ghost town” (due to COVID 19)

A few months ago, this headline would have been laughable

In London, central London and the London underground are a key fabric of daily life

So, my hypothesis to this conference was:

- In a post COVID world, the past does not equal the future

- frequentist approaches rely on data (past)

- In many cases (ex: Real estate), now that does not hold true and hence, we need new approaches

- Specifically, we need to look at Bayesian approaches which are not so common in the current curricula of data science and in practitioners

To expand on the reasons,

- Typically, data scientists lack the data to model a process well. Now, the situation has got worse because the data we have (if we could get hold of enough of it in the first place) – may not hold true anymore because we face a discontinuity

- Bayesian techniques allow us to encode expert knowledge easier

- Bayesian techniques perform better with sparse data

- Bayesian models are more easily interpretable

- Bayesian techniques allow for smaller datasets

- You could combine Bayesian techniques with other models like Hidden Markov models

- Bayesian techniques allow you to model rare/non-repeatable events

All these characteristics have become relevant today

In any case, it’s a viewpoint that needs greater emphasis in both practice and education.