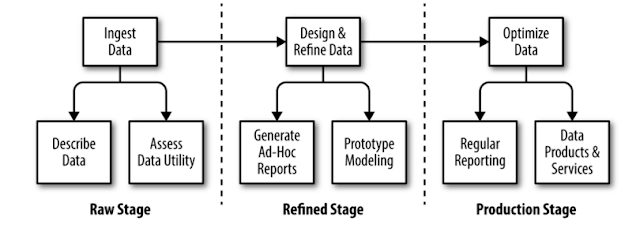

Data wrangling is the most important step for business personnel to further analyze data or enter BI system. With the advent of the data age, data sources are becoming more and more diverse (files, big data platforms, databases, etc.). It brings a lot of challenges to data wrangling. In an enterprise, data is usually managed by IT personnel, who unifies the process of data extraction, data transformation and load warehouse, namely ETL, and then provides it to business personnel or visual system. Data can only be used after three stages: Raw Stage, Refined Stage and Production Stage. The whole process is integrated into automatic management. In fact, this centralized automatic management process relies on IT personnel and ETL-specific software and hardware for each additional data requirement. The lengthy data development cycle and common ETL software and hardware become more and more time-consuming with the increase of demand, which makes business personnel unable to perceive the changes of data in time.( For example, the sales department wants to get information about sales changes before and after a promotional event.)

Therefore, it is becoming more and more important to deviate from the exclusive personnel and equipment and find a new way to carry out agile data collation to provide strong support for data-driven business departments. That is, data from Raw Stage to Refined Stage is directly used by business personnel for desktop analysis or self-help analysis after importing into BI system.

.

After data is imported into BI system, the self-service part of BI system only meets the business needs at the two levels of multi-dimensional analysis and related query. Experience shows that in best situation this can solve about 30% of the problems, and the remaining 70% or more needs, such as “finding out the first n customers whose sales account for half and sorting them by sales volume”, will involve many steps and procedured calculation. The procedured calculation is beyond the design goal of BI products, and can not even be regarded as data analysis, but it is a problem that users particularly want to solve. In the face of such problems, usually the data is exported and analyzed on the desktop by the business personnel themselves with Excel, but Excel is not good at dealing with multi-level data association operations, and sometimes the amount of data is too large to support, and it is not competent in many application scenarios. This kind of problem still needs technical personnel to solve. It is difficult for SQL to deal with procedured calculation. Java code is too long for structured calculation, and the code is not easy to be reused and maintained. The positioning of Python (pandas)/R is the statistical analysis of mathematical style. Although data frame object is provided for structured data processing, it is not as simple and intuitive as SQL, which is easy to learn and use.

.

So, is there a tool that can not only organize data agilely, but also deal with complex business computing easily? The goal of the Raqsoft esProc is to provide the most convenient way for ordinary technical personnel from data collation to business calculation. It has the following characteristics to meet such technical needs.

1. Connectivity

Ablility to connect various data sources

Files(CSV,JSON,Excel…)

Big data platform(Hive,HDFS,MongoDB…)

Cloud platform(AWS Redshift,AWS S3,Azure ADLS)

Database(Oracle,DB2,Mysql,PostgreSQL,TD…)

Applications(Salesforce,Tableau Server…)

2. Easy to use

▪︎ Install-and-use

No additional dependency toolkits need to be installed

▪︎ Step-by-step processing

Avoid SQL nesting and one-way pipeline processing. The results of each step can be referenced at any time. Complex problems are reduced into parts.

▪︎ Easy to understand and reuse

Easy to understand is bound to be easy to learn and master.

Use the least and intuitive script to solve the problem, the process of sorting out is clear at a glance, and the script can be reused with little change for similar problems.

▪︎ Complete core operations

The core operations of data wrangling are Structuring, Enriching, Cleaning.

▪︎ Easy debugging

We can’t debug programs only by output, and esProc supports advanced debugging modes such as single-step and breakpoint.

▪︎ Modular development

Tasks are separated by modules and can be centrally integrated to form a processing flow.

3. Big data

Single-computer processing capability, support GB scale, convenient data segmentation, multi-threaded/multi-process parallel computing, external storage computing.

Cluster processing capability,support TB scale, flexible data distribution and distributed computing.

4. Integration

Integration in reporting tools: The completed data consolidation script can be integrated with reporting tools, as a report data source, and the results of the operation directly provide data for the report.

Integration in ETL tools: The completed data wrangling script can be integrated with ETL tools, and automatically run after ETL scheduling, putting temporary data collation into the daily batch processing.