Following on from the article last week Deep learning in biology and medicine we discuss another sobering trend – according to some recent research shared on MIT technology review Hundreds of AI tools have been built to catch covid. None of them helped.

While we have been here before with Googles flu trends episode, the article makes sobering reading

Some notes from the article

- Algorithms worked in theory but not in real life.

- The shortcomings were not due to lack of effort or motivation.

- In the end, many hundreds of predictive tools were developed. None of them made a real difference, and some were potentially harmful.

- Tools were not fit for clinical use

what went wrong?

- Lack of data was the main issue

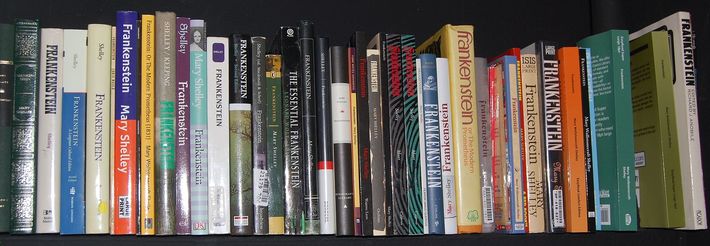

- The article talks of Frankenstein data setse. data sets, which are spliced together from multiple sources and can contain duplicates. Hence, in some cases, models were tested on the same datasets that they wee developed on

- Also, in other cases, some AIs were found to be picking up on the text font that certain hospitals used to label the scans i.e. the font was incorrectly used as a relevant feature leading to the usual suggestion that AI can make errors that humans probably would not

And how do we bridge that gap

The article makes the usual suggestions more data, more cooperation etc.

My analysis

The article, although insightful, misses the point by asking the wrong question. The question asked is: Was AI useful in diagnosis of COVID on its own? But the better qs is: Did AI tools assist in diagnosing COVID?

If that question were considered the responses would be a lot more useful and balanced

Also, as I said in a previous article, many technical solutions are possible for lack of data for example Probabilistic Bayesian models

The MIT review article is HERE

Image source: wikipedia