Summary: GANs (Generative Adversarial Nets) originally thought to be a tool for inexpensively creating DNN training data have instead become the tools for creating deep fake images. However the deep fake technology is now finding uses in the commercial world and promises valuable results.

Generative Adversarial Nets (GANs) are now five years old, dating from the original paper by Ian Goodfellow, then at Google. Since then there have been a number of technical innovations making GANs more flexible and easier to access.

Generative Adversarial Nets (GANs) are now five years old, dating from the original paper by Ian Goodfellow, then at Google. Since then there have been a number of technical innovations making GANs more flexible and easier to access.

While GANs have yet to find any real footing in the commercial world they have become the personification of our concerns and fears about the future of AI. Quite literally the AI with the potential to do the most harm.

If you have any doubt about what I’m referring to, it’s the explosive growth of ever more perfect deep fakes. Changing an image, video, or an audio recording so that it appears to be something that it’s not.

We’re talking celebrity faces transposed onto porn films, videos of our current and recent Presidents saying things they clearly did not say, people or things where they never have actually been or performing acts they have never truly done.

The outcome is that we can no longer believe our eyes and ears which have always been the source of our ground truth. While some of this faking is done in good fun, we are at that ‘genie is out of the bottle’ moment, and it’s never going back.

Worse, despite our best efforts to use AI tools against it, so far we have no reliable technology to identify GAN-generated deep fakes.

The Original Promise of GANs

The original promise of GANs was to create labeled training data where not enough existed.

Our image and speech processing with DNNs have always required really large amounts of labeled training data. A 2016 study by Goodfellow, Bengio and Courville concluded you could get ‘acceptable’ performance with about 5,000 labeled examples per category BUT it would take 10 Million labeled examples per category to “match or exceed human performance”.

That data can be prohibitively expensive or even impossible to obtain. So the thought that GANs could simply generate training data based on a relatively few examples has been very enticing.

A number of successful approaches to producing GAN-generated training data have been introduced. Snorkel from the Stanford Dawn project is a good example.

Another area in which GAN-generated training data has had good success is in creating training data for self-driving cars.

However, a variety of other techniques have also arisen during this half-decade to address this same problem, solutions like active learning to create new data and transfer learning and one-shot learning to reduce the amount of data necessary to train. Even reinforcement learning is being used to create training data from automated game play of driving games for self-driving cars.

In short, while GANs can indeed generate DNN training data other techniques are being used more widely.

An Important Limitation to GAN-Generated Training Data

A question that has not received nearly enough attention is whether GAN-generated training data is sufficiently accurate to generalize.

If you need training data to differentiate dogs from cats, staplers from wastebaskets, or Hondas from Toyotas then by all means to ahead. However, I was asked by a Pediatric Cardiologist if GANs could be used to create training data of infant hearts for the purpose of training a CNN to identify the various lobes of the tiny heart.

She would then use the inferenced CNN image to guide an instrument into the infant heart to repair defects. She didn’t yet have enough training data based on real CT scans of infant hearts and her application wasn’t sufficiently accurate.

It’s tempting to say that GAN generated images would be legitimate training data. The problem is that if she missed her intended target with her instrument it would puncture the heart leading to death.

If the relatively few seed images used to start the GAN contained sufficient detail of all the possible abnormalities then this might be successful. However, if professional knowledge is still short on what all those abnormalities look like then caution is needed.

When we’re looking at generated faces or animals or furniture it’s easy for us to spot something that doesn’t look like it fits. If it’s a medical application where we have very limited experience actually looking at true and false examples, then think about the impact of false positives and false negatives that are present in all probabilistic models. There was no way I was comfortable advising that artificially created training data for this application was OK.

Commercial Uses of Deep Fake GANs for Good – Synthetic Media

Recently Axios reported on a handful of startups that are trying to create commercial businesses out of deep fake GAN technology. The new PC title for this movement is ‘Synthetic Media’.

Radical: Turns 2D videos into 3D scenes.

Auxuman: Plays GAN generated music.

Dzomo: Replacing expensive stock photography with ‘deep fake’ images.

Synthesia: Dub videos into new languages. Their demo includes David Beckham delivering a PSA about malaria in nine languages.

Lyrebird: Creates digital voices that mimic actual speakers. They are cloning the voices of people with ALS in order to allow them to continue communicating once they can no longer speak.

Some other capabilities that may lead to commercialization:

- Automated generation of anime characters.

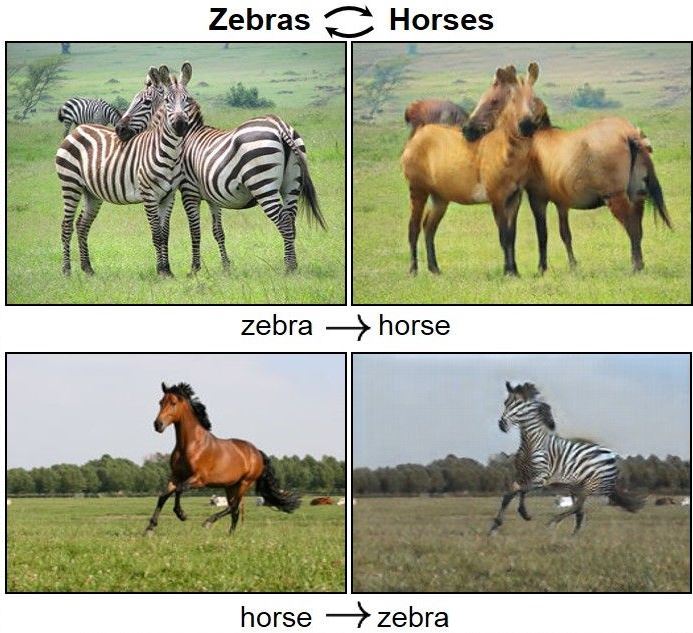

- Transforming the image of a person into different poses.

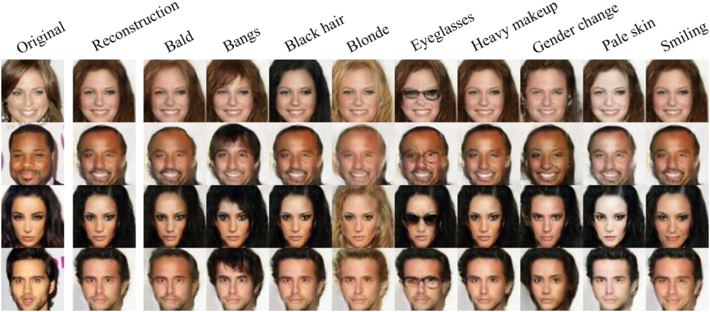

- Reconstructing images for specific attributes (as in this example of Invertible Conditional GANs for image editing)

And one final application that I personally find compelling – Super Resolution.

Police used Super Resolution GANs to identify the license plate number of a kidnapper although the only available images were a tiny fraction of low res frames taken from storefront surveillance cameras. Each number on the plate was only a few pixels, but with careful application of GANs to compare those to similar images of numbers and letters of known value (training data), the police were able to generate a sufficiently accurate full plate that led to the capture of the kidnapper.

A Primer in Adversarial Machine Learning – The Next Advance in AI

Other articles by Bill Vorhies

About the author: Bill is Contributing Editor for Data Science Central. Bill is also President & Chief Data Scientist at Data-Magnum and has practiced as a data scientist since 2001. His articles have been read more than 1.5 million times.

He can be reached at: