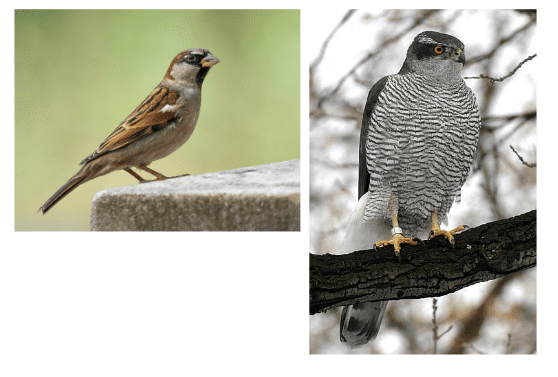

What to make of Deepmind’s Sparrow: Is it a sparrow or a hawk? ie a chatGPT killer

Recently, Demis Hassabis from DeepMind has been urging caution (DeepMind’s CEO Helped Take AI Mainstream. Now He’s Urging Caution Time magazine/Davos)

DeepMind also announced a new chat engine called Sparrow – supposedly a chatGPT killer

Sparrow is not available in the same way as chatGPT – and DeepMind is urging caution. There is also talk of something superior in the interview bordering AGI. So, what to make of this?

Here are my comments:

1) Both Sparrow and chatGPT appear to be trained by Reinforcement Learning with Human Feedback (RLHF)

2) Much of what’s coming in sparrow is already there in chatGPT

3) Sparrow appears to have 23 safety rules. These seem also to be consistent with what chatGPT already does

Now, the choice of DeepMind to launch a product of this nature is interesting

a) DeepMind excels in AI for fundamental science (like protein folding)

b) however, it has not launched a product

c) DeepMind is highly secretive and has historically not engaged with the community

d) In contrast, openAI has been a much more open and engaging organization

The sudden interest in responsible AI from Google/DeepMind is also interesting. Timnit Gebru and Margaret Mitchell may have differing views! The the biggest issue for Google/DeepMind is still the same. Its not a technical problem, rather a business model problem (impact on advertising). So, as it stands, Sparrow does not seem to matter one way or the other.

References

Demis Hassabis interview https://time.com/6246119/demis-hassabis-deepmind-interview/

Sparrow https://www.deepmind.com/blog/building-safer-dialogue-agents

RLHF https://scholar.harvard.edu/saghafian/blog/analytics-science-behind-chatgpt-human-algorithm-or-human-algorithm-centaur

23 rules https://storage.googleapis.com/deepmind-media/DeepMind.com/Authors-Notes/sparrow/sparrow-final.pdf

Image source wikipedia