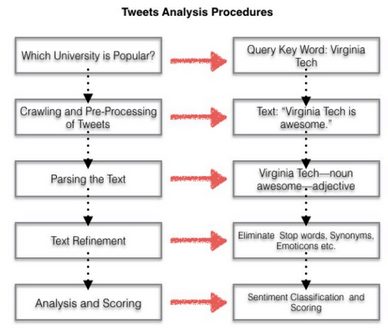

To start with Sentiment Analysis, what comes first to our mind is where and how we can crawl oceans of data for our analysis. Normally, web crawler or crawling from web social media should be one reasonable way to get access to the public opinion data resource. Thus, in this writing, I want to share with you about how I crawled the website using web crawler and proceeded to deal with those data for Sentiment Analysis to develop an application which ranks universities based on users’s opinions crawled from social media website – Twitter.

To crawl the Twitter data, there have been several methods that we can adopt – Build a web crawler on our own by programming or choosing an automated web crawler, like Octoparse, Import.io and etc. Also we could use the public APIs provided by certain websites to get access to their data set.

First, as well known, Twitter provides public APIs for developers to read and write tweets conveniently. The REST API identifies Twitter applications and users using OAuth; After learn about this, we can utilize twitter REST APIs to get most recent and popular tweets, Twitter4j has been imported to crawl twitter data through twitter REST API. And Twitter data can be crawled according to specific time range, locations, or other data fields. And the data crawled will be returned as JSON format. Note that APP developers need to generate twitter application accounts, so as to get the authorized access to twitter API. By using a specific Access Token, the application made a request to the POST OAuth2 to exchange credentials so that users can get authenticated access to the REST API .

This mechanism will allow us to pull users’information in the data resource. Then we can use the serach function to crawl these structured tweets related with university topics.

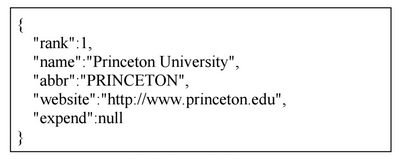

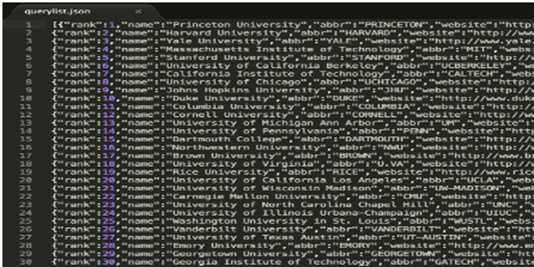

Then, I generated the query set to crawl tweets, which is like the figure below. I collected University Ranking data of USNews 2016, which includes 244 universities and their ranking. Then, I customized the data fiels I need to use for crawling tweets into JSON format.

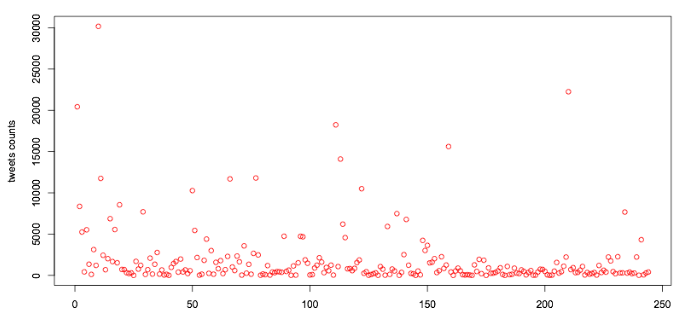

For my tweets crawled results, I extracted 462,413 tweets results totally. And most universities’ tweets crawled number is less than 2000.

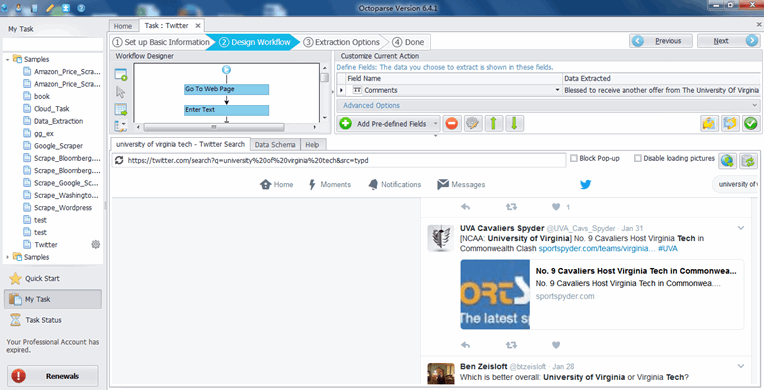

So far, someone may feel that the whole process to crawl Twitter is troublesome already. This method requires people with good programming skills and knowledge on regular expression to crawl the websites for structured and formatted data. This may be tough for someone without any coding skills and related knowledge. Here, for your reference, I’d like to propose some automated web crawler tools which can help you crawl websites without any coding skills, like Octoparse, Import.io, Mozenda. This local machine installed web crawler tool can automatically crawl the data, like tweets, from the target sites. Its UI is user-friendly as below and you just need to learn how to confiugre the crawling settings of your tasks which you can learn by reading or watching their tutorials. The data crawled can be exported to various structure formats as you need, like Excel, CSV, HTML, TXT, and database(MySQL, SQL Server, and Oracle). Plus, it provides IP rotations which automates IPs leaving without being traced by the target sites. This mechanism serves as an important role, since it can prevent us from getting blocked by certain aggressive sites which doesn’t allow people to crawl any data from them.

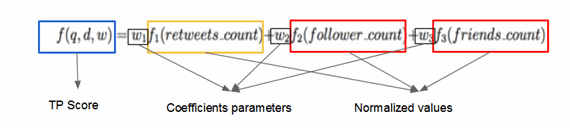

Back to the University Ranking of my designed application. Ranking technology in my application is to parse tweets crawled from Twitter and then rank related tweets according to their relevance to a specific university. I want to filter high-related tweets (topK) to do the Sentiment Analysis, which will avoid trivial tweets that make our results inaccurate. There are may ranking methods actually, such as rank them based on TF-IDF similarity, text summarization, spatial and temporal factors or machine learning ranking method. Even Twitter itself has provided a method based on time or popularity. However, we need a more advanced method which can filter out the most spam and trivial tweets. In order to measure the trust and popularity of a tweet, I use the following features from tweets: retweets count, followers count, friends acount. Assuming that a trust tweet should be posted by a trust user. And a trust user should have enough friends and followers, then a popular tweet should have high retweet-count. According to my assumption, I builit a model combining the trust and popularity (TP Score) for a tweet. Then I rank those tweets based on TP score. Noteworthy that report news usually has high retweet-count, and this type of score will be useless for our Sentiment Analysis. Thus, I assigned a relatively lower weight on this portion when computing the TP score. I designed and derived a formula as below. This Twitter supported method has considered the presence of query words, the recency of tweets. Thus, the tweets we crawled has been filtered by query words and posting time. All we need is to consider about the retweets countsm, followers counts, friends counts.

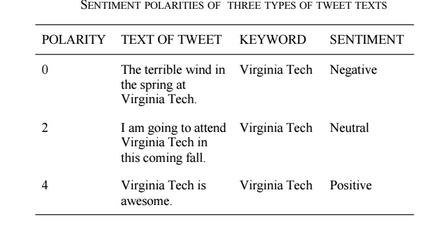

I make my own university ranking according to public reputation which is represented by sentiment score. However, public reputation is only on of the factors that should be referenced when evaluating a university. Thus, I want to present an overall ranking which combined both commercial ranking and our ranking. And there are three main types of tweets texts as below.

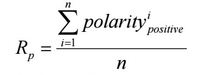

Sentiment Score: the Sentiment Score was calculated for public reputation. The positive rate of each university was used as the sentiment score for the public reputation ranking. The formula below defines the positive rate. Note that the negative polarity was not considered since it is equal to zero.

Where n is the total number of tweets for each university; And represents the positive polarity for a tweet, 4.

After I completed the Sentiment Anlysis, I would proceed to build a classifier for Sentiment Analysis using machine learning algorithm. This algorithm I will discuss further in the next writing, which is about Maximum Entropy classifier.

Author: The Octoparse Team

– See more at: Octoparse Blog