Accurate multichannel campaign attribution has stumped the online marketing industry for years. But what if the solution is to stop worrying about attribution, and move to an optimization-driven approach?

You know those photo mosaic images, which suddenly became terribly popular a few years back? They cleverly use lots of individual tiny images to make up one large image. If you look closely you can make out the individual images, but you have to stand back to take in the full picture.

You know those photo mosaic images, which suddenly became terribly popular a few years back? They cleverly use lots of individual tiny images to make up one large image. If you look closely you can make out the individual images, but you have to stand back to take in the full picture.

The same is true for measuring the impact of digital marketing. When you step back, techniques like Marketing Mix Modeling can show that, in aggregate, digital marketing works as a part of the overall marketing mix – it complements other elements of the mix such as television and retail to drive sales.

On the other hand, zooming in, it’s fairly straightforward to understand the impact of individual digital marketing campaigns at a user level, using various forms of instrumentation and tagging to link user actions to the marketing that they’ve seen. These techniques have become so common that it’s a brave marketer today who spends money on a digital campaign without providing some kind of performance reporting.

The problem comes in the middle. If you zoom out of a mosaic picture, there is a point where you lose the detail of the individual photos but the bigger picture has not yet emerged. And so it is with digital marketing; understanding the way that multiple campaigns, across multiple digital channels, interact to influence behavior at the user level is a very challenging problem that has stumped the industry for years – the so-called “attribution problem“.

To put it another way, we’ve moved on from deciding whether to do digital marketing; it’s which digital marketing to do which is the conundrum today, and especially understanding which mix of digital marketing will drive the best results.

The attribution problem is a really tough one for a few reasons:

- Digital marketing channels don’t drive user behavior independently, but in combination, and also interfere with each other (for example, an email campaign can drive search activity);

- User “state” (the history of a user’s exposure and response to marketing) is changing all the time, making taking a snapshot of users for analysis purposes very difficult;

- Attribution models end up including so many assumptions (for example, “decay curves” or “adstock” for influence of certain channels) that they end up being a reflection of the assumptions rather than a reflection of reality.

The trouble is, most organizations understand that they can’t just continue to invest in, execute and analyze their digital marketing in a siloed, channel by channel fashion; they want to create a consistent, coherent dialog with their audience that spans channels and devices. But how to do it?

Digital Marketing as an Optimization Problem

The answer to this dilemma lies in thinking differently about digital marketing, and treating it as a user-centric optimization problem instead of a descriptive analytics problem.

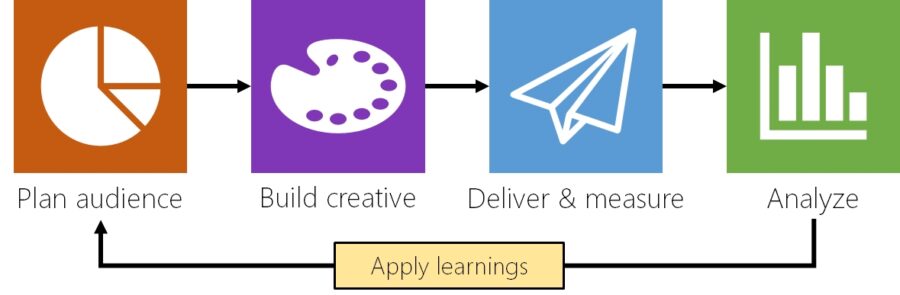

To understand how this is different from traditional digital marketing, let’s first look at how most digital marketing campaigns are set up today:

In a traditional digital campaign, a specific audience is identified by a marketer (either from first or third-party data, or a combination of the two) and a set of creative (ads, emails, etc.) is then delivered to that audience. After some time (measured in days or weeks) the marketer looks at the results and makes decisions about how to improve the next campaign, or make adjustments to the current campaign, to improve effectiveness.

The performance of the campaign can be improved in-flight by using techniques like dynamic creative optimization to weed out low-performing creatives before the campaign has finished. But overall insights about the campaign are usually left to the end. Most campaigns are analyzed on a channel-by-channel basis, and even if they’re using control groups to measure lift, can’t take into account the impact of other channels in their analysis.

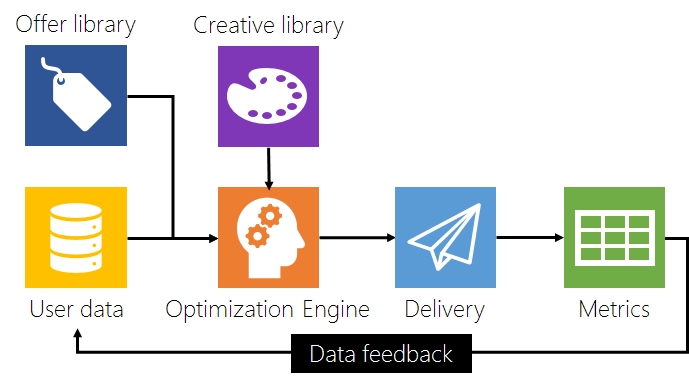

With an optimization-driven approach, instead of the marketer creating a series of discrete campaigns for individual products or offers, each with its own target audience and its own outcome measurement, the marketer creates a series of “offers” (essentially, product messages) which can be delivered to users. The offers – together with a set of creative assets – are made available to an optimization engine, which continually tries to predict the combination of offer, creative and channel (email, web etc.) which will deliver the best outcome (click, conversion, revenue, etc.) with users.

A good example of this in a single digital channel is Amazon’s product recommendation feature on its website, which combines information that it has about you (your previous purchases, demographic information, what you’re currently purchasing) and information about the products to present a series of “suggested products” (in other words, offers) to you.

Multi-armed banditry

There are a number of things you need in place to make the above model work, such as a single creative repository, and a consistent execution model across multiple channels. The magic at the center of the picture, however, is the optimization engine. This is a piece of software that is capable of running multiple concurrent combinatorial tests of your creative, offers, user segments and channels, to find the combinations that deliver the best results. This is a classic multi-armed bandit problem.

This statistical problem is so called because it is based on the idea of an imaginary gambler at a row of slot machines, trying to decide which ones to put money into to generate the best return. The gambler could use one of the following strategies:

- Pick a slot machine at random and stick with it, which would mean he’d most likely miss the most generous machine, and could pick a terrible one

- Spread his money equally between all the machines, minimizing his chances of putting all his money into a bad machine, but ensuring he doesn’t strike it rich, either

The smarter thing for the gambler to do is to start by putting a little money into each machine, and then, based on the results he gets, divert the remainder of his money to the machine that delivered the best return. The first of these two phases is known as the “explore” phase; the second, the “exploit” phase.

Multi-armed bandit experimentation is good for situations where conditions can change over time. Our gambler can choose to continue to divert a little money to the other machines even once he’s identified the “best” machine, since slot machines can vary their payout over time; this minimizes his chance of losing out if conditions change. As a result, multi-armed experimentation is well-suited to campaign optimization because the users’ state is changing all the time – a user who has already received three emails about a product is much less likely to click on a fourth than a user who has never received an email about the same product, for example. Multi-armed bandit experimentation methodologies can be slower to deliver statistically significant results than traditional A/B or multivariate testing, but they are more robust in dynamic environments.

Dimensions of optimization

When we apply multi-armed bandit experimentation to campaign optimization, it’s helpful to think of an overall “optimization space” that is comprised of all the attributes that we can optimize over. Broadly, these attributes fall into three categories:

- Audience attributes: Information that we have about the audience for the marketing, at the individual level, such as previous purchases, demographic data, product/website usage, or marketing engagement

- Offer attributes: Information about the offers themselves, such as product category, price range, or purchase model

- Tactic attributes: Information (reflecting choices made) about the tactics that we are using in our campaigns, such as channel, creative, format, or timing

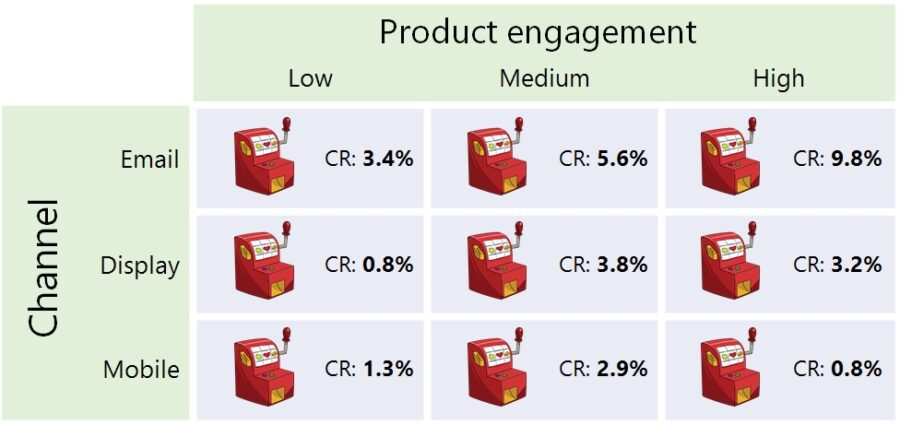

The first task of the optimization engine is to carve up this multi-dimensional space into a (quite large) number of virtual “bandits” or treatments, run concurrent marketing tests in each of the treatments, and measure the results. To visualize this with a simple example, let’s imagine we’re just using two dimensions to carve up the space:

- User product engagement level (low, medium or high)

- Marketing channel (email, advertising, mobile)

Because each of these dimensions has just three members each, there are 9 treatments in total, as in the diagram below:

For each treatment, the engine calculates the value of a success metric (in this example, conversion rate) based on delivery of messaging in each treatment. So in the example above, emails sent to the “Low” product engagement group of users resulted in a 3.4% conversion rate, while mobile messages to the Medium group generated a 2.9% conversion rate.

Based on these results, the optimization engine then needs to decide which treatment(s) it should focus its delivery on going forward to generate the best outcome overall. In the table above, the winning treatment is Email to High engaged users, generating a conversion rate of 9.8%. But of course the engine just can’t put all its eggs in this one basket, for a couple of reasons: Firstly, we want our marketing to cover all the addressable audience, not just one part of it; and secondly, it’s likely that there is some interaction between the effects of the different treatments – for example, a user who has received an email and a mobile message may be more likely to convert than one who has just received an email.

So what the engine really needs to do is decide which combination of treatments it should go forward with. This is called Combinatorial Multi-Armed Bandit experimentation, or CMAB for short, and is the subject of much academic study at the moment. If you’d like to learn more about this, my colleague Wei Chen of Microsoft Research has published a paper on it, which you can read here.

No humans required?

Advocates of optimization-based marketing are liable to get a bit over-excited and say that this means that humans will no longer be needed to build campaign plans or audiences, and that in the future we’ll just be able to toss offers into a giant hopper and watch them all be delivered to the perfect audience with no human intervention (though others disagree).

Fortunately for digital marketers, and especially digital marketing analytics professionals, optimization-driven campaigns don’t remove the need for human involvement, though they do change its nature. Instead of creating complex audience segments up front for a campaign, these people will need instead to identify the attributes that campaigns should use for optimization.

What this does mean for marketers is that the bar is being raised on the level of data-savviness required to do the job; no longer is it sufficient to say “Well, my product is aimed at younger people, so I’m going to target the 18-25 demo and hope for the best”. Marketers will increasingly need to work with data scientists (or pick up some data science skills themselves) to set up effective optimization-driven campaigns.

Getting started

This new approach to digital marketing optimization is a big change from the way that marketers have worked up until now. Fortunately, you don’t have to change everything at once in order to start gaining benefits from this approach.

The best way to get started is to identify which attributes of your offers, audience or tactics you are able to experiment over most easily. If you have a lot of rich data about your audience, for example, you can use that as your experimentation space, carving your users up into many small segments and experimenting with creative variations and other delivery aspects like timing to get the best results. On the other hand, if you have a large and diverse product catalog, you can experiment within that domain, attempting to find the product offers that work best in different circumstances or with different creative.

Once you have built up experience in experimentation in these areas, you can tackle multi-channel experimentation. In addition to rich data on your users and products, this requires you to be able to execute experiments across channels easily, which means that you need an integrated campaign execution system, and an integrated marketing operations function to go with it. Right now, this is the biggest impediment to true cross-channel optimization: Most companies run their digital marketing in separate, channel-focused silos. Building a campaign that can execute seamlessly across multiple channels thus requires lots of cross-organization cooperation, which can be tough to pull off.

Fortunately there are a few companies which are starting to offer solutions for optimization-driven marketing and can start to help you down this path:

| Amplero | Digital campaign intelligence & optimization platform based on predictive analytics & machine learning. |

| Optimove | Multichannel campaign automation solution, combining predictive modeling, hypertargeting and optimization |

| Kahuna | Mobile-focused marketing automation & optimization solution |

| IgnitionOne | Digital marketing platform featuring score-based message optimization; ability to activate across multiple channels |

| BrightFunnel | Marketing analytics platform focusing on attribution modeling |

| ConversionLogic | Cross-channel marketing attribution analytics platform, using a proprietary ML-based approach |

If you know of other players in this space, please let me know in the comments.

Multichannel campaign optimization using combinatorial multi-armed bandit experimentation is a powerful, though nascent, alternative to traditional campaign attribution approaches for maximizing marketing ROI. Although performing true multichannel optimization requires significant investment and maturity in data, marketing automation technology and organizational alignment, it’s possible to get started in a more limited fashion by taking an optimization-driven approach in existing channels, and growing from there.