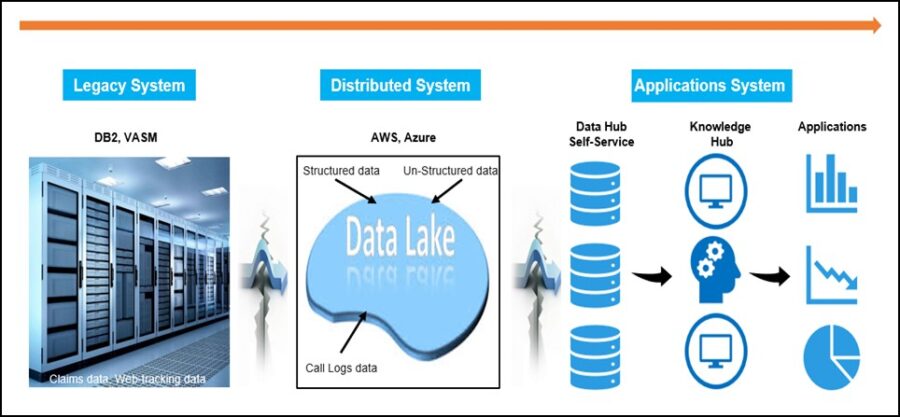

The information technology (IT) infrastructure in traditional organizations, particularly in the insurance industry, typically contains three components: 1) the legacy system, e.g. IBM mainframe for storing transactional data; 2) the distributed system, e.g. Microsoft Azure or Amazon Web Services for data governance and usage; and 3) the custom-made application system for analytics and reporting. In most of the organizations these three parts are still functioning as three disjoint pieces which I tried to depict pictorially in the image above. From my experience as a hands-on Data Scientist in a large insurance company I can tell with reasonable confidence that this is a serious problem. This setback simply means that the attempts of process automation are basically the automation of units but not the entire system. The industry needs an advanced technology that can thread all three units together to exploit automation in its fullest power.

What I call the Master Technology Solution (MTS) is an intelligent process where data collection, acquisition, preparation, data governance as well as the execution of advanced analytics and reporting will be one seamless progression where one can start from 1) extracting data in the legacy system, then 2) push data to the distributed system through ETL processes for profiling and governance etc., and finally 3) generate and host machine learning models, reporting, and maintaining versioned results in the application system. To me the desired tool of integration would be the one, such as the MTS, that can be leveraged to have accelerated solutions to all types of business problems.

In any corporate environment a coherent and seamless integration of technology, data, and business solutions is needed to enable data scientists, business analysts, or managers to contribute to the improved decision making. Despite the phenomena of data overload, outstanding advancement in Artificial Intelligence (AI), emergence of highly sophisticated Business Intelligence (BI) tools, it is still extremely challenging to identify one technology solution that is currently available in the AI or BI market or in the advanced technology space that can address data, analytics, and reporting issues starting all the way from the legacy system and subsequently connecting the distributed system as well as the application system in a coherent manner.

Although a unison of three components is absent in most of the organizations, some corporations are successful in combining the latter two components. However, these corporations still use human resources to connect to the legacy system. Therefore, despite a success by the few, the current scenario represents a clear obstacle in process automation for the majority. The reality of such limitation needs to be addressed and the industry must find the best way to eliminate human dependencies in the process workflow. I believe that a technology with the capabilities of the MTS is critically required to address the current barrier and thus to achieve IT process automation in its truest sense.

There are lot of discussions happening these days about AI, its role on process automation as well as scaling advanced analytics processes. However, the key point to have in mind is that these advancements are all siloed, tailored to one of those three components. I simply can’t identify a sole technology that is currently available in the market that can be associated with the MTS. Until the industry has such a single and all-in-one capability, true automation will remain a far cry.

I can highlight some additional benefits of the MTS other than its role in automation. These include: 1) access to the single version of the truth with regards to the data, 2) no time lapse between the latest data to the generation of the latest insight, 3) ability to develop sustainable & governed analytics solution, and 4) democratization of analytics across the organization.

I know that there are two important questions that need to be addressed when someone think to build any technological tool. These are: 1) What is the best way to build a solution? 2) what is the cost and the time needed to build it? In my opinion, the vendors who have been providing mainframe related software support to the corporations have a clear advantage in building a solution like this. They have the experience and the expert manpower to develop some intelligent, yet cost-effective APIs, to fuse their outputs with the inputs of the distributed systems. It can be done in isolation by the vendors themselves or it can be a collaborative effort with the organizations they have been providing support to. I must admit that I have yet to come with an answer for the second question. The answer(s) will shape up when discussions of such initiatives will be on the table.

Someone may critic that it is impractical to think to develop a solution like the MTS. In these days of Cloud (Amazon, Microsoft, Oracle etc.) existing problems like this will be resolved by the technology sooner or later and it will be a lot cheaper as well. Frankly, I will be happy to see the problem gets explained by whatever means. However, until that happens, I see opportunities of collaboration in developing the MTS either internally or by partnering with the vendors. And I will address the criticism using an adage from Nelson Mandela: “It always seems impossible until it is done”. I will even go one step further and paraphrase the maxim to express my view: “It always seems impossible or impractical or unnecessary for some (tech) problems to be solved until someone or a small group of people create some workable solutions of those problems and make fortune out of it”.

Think about it. Why can’t that small group contain ‘you and your team’?

Image Sources:

Mainframe: https://www.techrepublic.com/article/the-mainframe-evolves-into-a-n…

Data Like : https://www.intricity.com/data-warehousing/what-is-a-data-lake/

This blog first appears in LinkedIn.