During Season 5 of Saturday Night Live, Steve Martin and Bill Murray performed a skit in which they comically pointed at something in the distance and asked, “What the hell is that…?” While the humor in the skit may have been somewhat obscure, their confusion accurately mirrors today’s confusion with the concept of “transparency.” What the heck is transparency?

Transparency is a subject that comes up frequently when talking about ensuring the responsible and ethical use of AI. But it’s a topic that seems only to get cursory attention from people in power while the dangers of unfettered AI models continue to threaten society.

Cathy O’Neil’s book “Weapons of Math Destruction” is a brilliant and eye-opening book that exposes the dangers of relying on algorithms to make crucial decisions that shape our lives and society. The book reveals the many challenges and pitfalls of algorithms, such as:

- Algorithms are often black boxes, concealed from the scrutiny and understanding of the people they affect and those who implement them.

- Algorithms can use flawed, incomplete, and biased data to generate unfair and harmful outcomes.

- Algorithms can have far-reaching and pervasive effects on millions of people across various domains and sectors.

- Algorithms can undermine people’s opportunities, rights, and well-being.

- Algorithms can diminish trust and accountability and marginalize people.

- Algorithms can destabilize social and economic systems.

Examples of areas where analytic algorithms or AI models are already making decisions that can adversely and unknowingly affect people are:

- Employment: Algorithms can discriminate against applicants based on race, gender, age, or other factors. For instance, Amazon scrapped a hiring tool that favored men over women.

- Health Care: Algorithms can deny or limit access to quality health care for certain groups of people. For example, a study found that a widely used algorithm was biased against black patients.

- Housing: Algorithms can exclude or exploit potential tenants or buyers based on income, credit score, or neighborhood. For instance, Facebook was sued for allowing landlords and realtors to discriminate against minorities.

- College Admissions: Algorithms can favor or disadvantage applicants based on their academic performance, extracurricular activities, or personal background. For example, a lawsuit alleged that Harvard discriminated against Asian-American applicants.

- Lending & Financing: Algorithms can charge or refuse loans or credit cards to people based on their financial history, spending habits, or social network. For example, Apple was accused of giving women lower credit limits than men.

- Criminal Justice: Algorithms can predict or influence the likelihood of recidivism, bail, sentencing, or parole for defendants or offenders. For example, a report found that a risk assessment tool was biased against black and Hispanic people.

- Education: Algorithms can assess or affect the learning outcomes, feedback, or recommendations for students or teachers. For example, an algorithm that graded English exams was found to be unreliable and inconsistent.

- Social Media: Algorithms can shape or manipulate users’ or influencers’ content, opinions, or behaviors. For example, a documentary revealed how social media algorithms can polarize and radicalize people.

So, what inalienable rights are mandatory in the 21st century, where more and more of the decisions and actions that impact us and society are being driven by AI models?

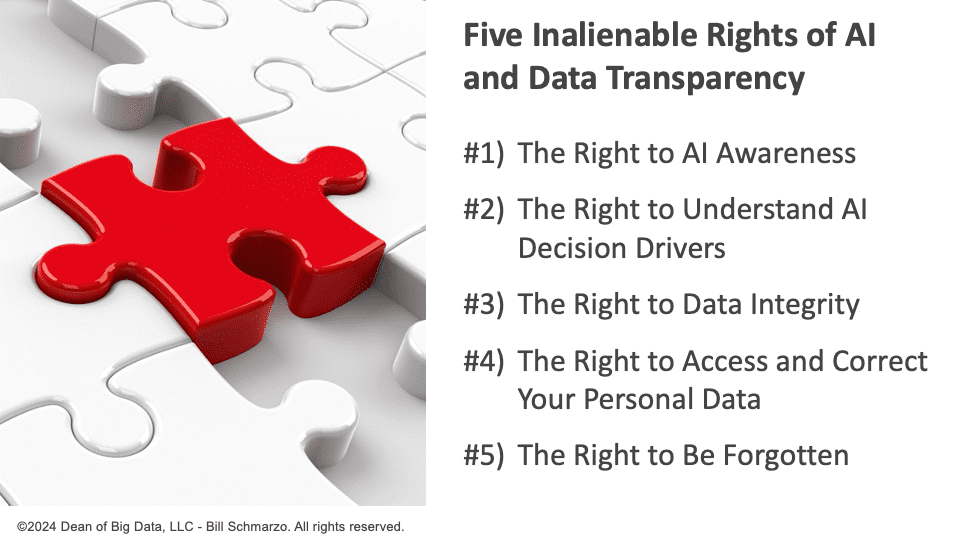

Five Inalienable Rights of AI and Data Transparency

Transparency is essential if organizations want to create AI models people can trust and will comfortably and confidently embrace. Understanding how the AI models reason and make decisions can determine whether people embrace them and have moral implications. Unfortunately, explanations of transparency can be too complicated and focus too much on technical details about data and AI technologies.

Consequently, here is my attempt to simplify the concept of transparency for everyone to understand:

Transparency is the ability to see and understand the rationale behind a decision or action

As a Citizen of Data Science, that means:

- The Right to AI Awareness: Citizens have the right to know when decisions or actions that impact them are made with the help of AI. This transparency is essential if citizens are to develop trust and confidence in the results of the AI models.

- The Right to Understand AI Decision Drivers: Citizens have the right to know the variables and metrics that determine the AI model’s decisions and actions. This helps individuals understand the reasoning behind actions affecting them, evaluate the fairness and relevance of criteria used, and demystify the decision-making process.

- The Right to Data Integrity: Citizens have the right to understand the credibility, trustworthiness, and reliability of the data used in the AI model’s decision-making determination. This point seeks to address the potential for misinformation or bias to influence decisions, emphasizing the need for integrity and vigilance in guarding against the use of unbiased, engineered, or altered data.

- The Right to Access and Correct Your Personal Data: Citizens have the right to access their personal data held by organizations, to request a copy of their data being processed, and, if the data is inaccurate, to request corrections.

- The Right to Be Forgotten: Citizens have the right to request the removal of their personal data. Individuals should be able to request the deletion of their personal data if the data is no longer relevant or necessary or falls into specific categories specified by law.

Transparency In Action: Social Media

Social media emerged as a powerful platform for sharing news and ideas and fostering collaboration. Its potential for education and growth seemed limitless. However, the landscape has shifted. Radical individuals, fringe organizations, and nefarious actors now weaponize social media. Their intent? To disseminate lies, misinformation, and disruptive narratives that undermine societal trust and destabilize governments.

So, what if we applied the five inalienable rights for AI and data transparency to social media platforms? What might we expect?

| Transparency Rights | Impact on Social Media Experience |

| 1. The Right to Awareness | Social media platforms should clearly disclose when AI algorithms influence content presentation. Users should be informed when AI-driven decisions impact the content that is displayed to them. This transparency would build trust and helps users understand why certain posts appear or are suppressed. |

| 2. The Right to Understand Decision Drivers | Social media companies should reveal the factors that determine content ranking and recommendations. Users have the right to know why a specific post or ad appears in their feed. Understanding the decision factors allows users to judge fairness and relevance. |

| 3. The Right to Data Integrity | Social media platforms should ensure the credibility of data sources that underpin their content recommendations. They must proactively guard against misinformation, bias, and altered data that could skew viewing recommendations. Users should be confident that the information they see is reliable, unbiased, and accurate. |

| 4. The Right to Access and Correct Their Data | Social media users should have the ability to review their personal data stored by the social media platform. Corrections can be requested if inaccuracies exist. This right empowers users to verify and manage their own data. |

| 5. The Right to Be Forgotten | Individuals should be able to request removal of specific content or personal data from social media. This protects users’ privacy and enables users to control over their digital footprint. |

Heck, we could even leverage Data Science and AI to create a “BS Meter” (BS does not mean Bill Schmarzo in this case). Social media platforms are inundated with content, making it challenging for users to discern fact from fiction. A “BS Meter” could be a valuable tool to encourage critical thinking and empower users to evaluate information more effectively (Figure 1).

Figure 1: BS Meter

The meter could analyze various factors, including:

- Source Credibility: Assess the reliability of the content creator or publisher.

- Fact-Checking: Compare claims against verified information.

- Consistency: Detect contradictions or inconsistencies.

- Emotional Language: Identify sensationalism or bias.

- User Feedback: Consider community ratings and reports.

The meter would assign a score or label (e.g., “Highly Reliable,” “Questionable,” or “Debunked”) that would help users make informed decisions about the content they consume, while enabling Social media platforms to enhance their accountability and trust.

AI and Data Transparency Summary

Transparency is not only a technical requirement but also a cultural one. It promotes a spirit of openness and collaboration to create a culture where data-driven insights are easily accessible and understandable. The goal is to foster an environment where decisions are made based on the clear, understandable, and justifiable use of data. This approach builds trust and encourages informed and engaged participation in data-driven initiatives.

Unfortunately, we don’t apply these five inalienable transparency rights to all of society and the policy decisions made by our politicians. Should we expect less from our leaders than we do from our AI models?