In this article, I discuss the main problems of standard LLMs (OpenAI and the likes), and how the new generation of LLMs addresses these issues. The focus is on Enterprise LLMs.

LLMs with Billions of Parameters

Most of the LLMs still fall in that category. The first ones (ChatGPT) appeared around 2022, though Bert is an early precursor. Most recent books discussing LLMs still define them as transformer architecture with deep neural networks (DNNs), costly training, and reliance on GPUs. The training is optimized to predict the next tokens or missing tokens. However, this task is remotely relevant to what modern LLMs now deliver to the user, see here. Yet it requires time and intensive computer resources. Indeed, this type of architecture works best with billions or trillions of tokens. In the end, most of these tokens are noise, requiring smart distillation for performance improvement.

The main issues are:

- Performance: Requires GPU and large corpuses as input data. Re-training is expensive. Hallucinations are still a problem. Fine-tuning is delicate (Blackbox). You need prompt engineering to get the best results. Mixtures of experts (multiple sub-LLMs, DeepSeek) is one step towards improving accuracy.

- Cost: Besides the GPU costs, the pricing model charges by the token, incentivizing vendors to use models with billions of tokens. Yet, a mere million is more than enough for a specialized corporate corpus, especially when not relying on DNNs. While there is a trend to switch between CPU and GPU as needed to minimize costs, we are still a long way from no-latency, in-memory LLMs.

- Old architecture: Innovation consists of improvements to the existing base model. Evaluations metrics miss important qualities such as depth or exhaustivity, and do not take into account the relevancy score attached to prompt results. There is no real attempt to truly innovate: DNNs, old-fashioned training and transformers are the norm. Standard methods such a fixed-size embeddings, vector databases, or cosine similarity are present in most models. Contextual elements such as corpus taxonomy are not properly leveraged. Chunking, evaluation and reinforcement learning haven’t changed much.

- Adaptability: Generic models are not well suited to particular domains such as healthcare or finance. They may be hard to deploy on-premises. Few models communicate with others to get the best of all. Also, they rely on the same stemming, stopwords and other NLP techniques, without adaptation to specific sub-corpuses. Perhaps the most prominent mechanism for adaptability is the use of agents.

- Usability: UIs consist of a prompt search with limited functionalities. While it takes into account past prompts within a session, it comes with few if any parameters for fine-tuning by the end user. Typically, there is no or limited user customization.

- Security: Hallucinations are still present but tend to become more subtle. It makes it difficult to detect them. Access to external APIs, external data storage or processing, augmentation with external corpuses, business decisions based on hallucinations, and extensive rewording of the original material — without accurate referencing — create risks and liabilities. Python libraries (stemming, autocorrect and so on) are faulty. Developers may not always be aware of important glitches in these libraries, and workarounds. Replicability is sometimes an issue.

In the next section, I discuss truly innovative developments to LLMs. These are techniques and features that power the next generation of large language models, that is, LLM 2.0.

LLM 2.0, The NextGen AI Systems for Enterprise

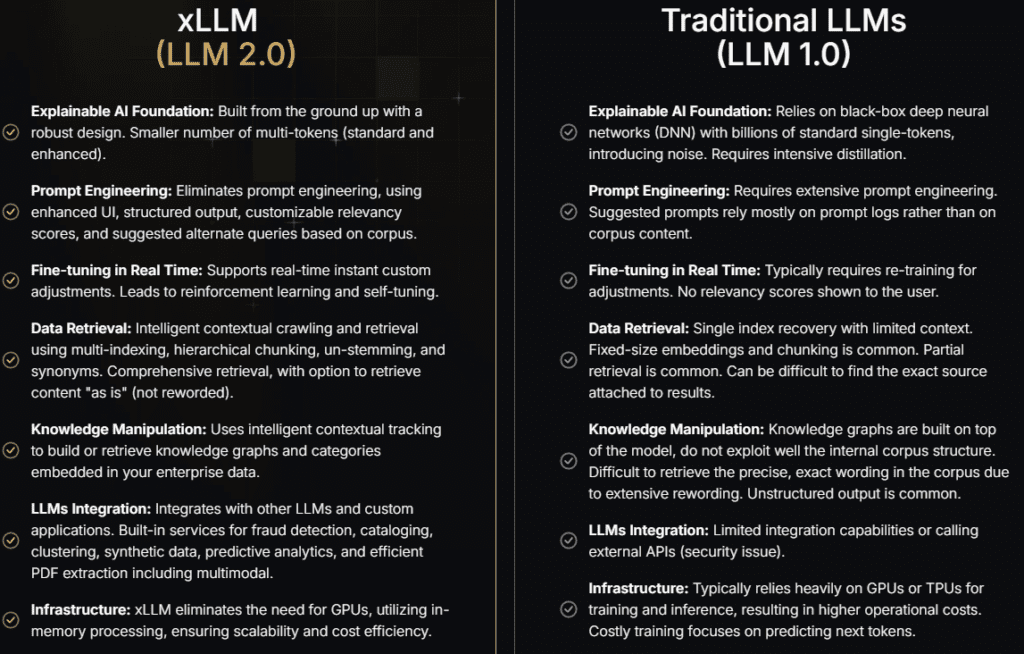

Here, I describe some of the powerful features and components that we recently implemented in our LLM 2.0 technology, known as xLLM for Enterprise. We are moving away from “bigger is better”, abandoning GPU, next-token prediction via costly training, transformers, DNNs, and a good chunk of standard techniques. To mirror the previous section, I break it down into the same categories.

- Performance: In-memory LLMs with real-time fine-tuning, zero weight, no DNN, no latency. Short lists of long tokens and multi-tokens rather than long lists of short tokens. No hallucinations, see here. Focus on depth, exhaustivity yet conciseness, and structured output in prompt results. Contextual and regular tokens, with the former found in contextual elements retrieved from the corpus, or added post-crawling: categories, tags, titles, index keywords, glossary, table of contents, search agents (“how to”, “what is”, “case studies”, “find table”) and so on. One sub-LLM per top corpus category (HR, marketing, finance, sales, and so on).Infinite contextual window thanks to contextual elements attached to chunks during the crawl or post-crawling.

- Cost: Focus on ROI. Far less expensive than standard models, yet much faster, more accurate, exhaustive, with results ordered by relevancy.

- New architecture: Use of sorted n-grams, acronyms and synonyms tables as well as smart stemming to guarantee exhaustivity in prompt results. Relevancy engine to select few but best items to show to the user, out of a large list of candidates. Hierarchical chunking and multi-index. Vector databases replaced by very fast home-made architecture ideal for sparse keyword data. Cosine similarity replaced by PMI. Variable-length embeddings. Un-stemming. Backend tables stored as nested hashes with redundant tables for fast retrieval, including transposed nested hashes. Input corpus transformed into standardized JSON-like format, whether it comes from PDF repository, public or private website, several databases, or a combination of all. Self-tuning.

- Adaptability: Stopwords and stemmers specific to corpus or sub-corpus. Home-made action agents for enterprise customers. In particular: predictive analytics on retrieved tables, best tabular synthetic data generation on the market (NoGAN, see here), taxonomy creation or augmentation, unstructured text clustering, auto-tagging. On-premises built. User customization via intuitive parameters. Names and number of contextual elements specific to each chunk.

- Usability: Rich UI allows the user to select agents, select sub-LLMs, fine-tune parameters in real time, run suggested alternate prompts in one click from keyword graph linked to prompt. Display 10-20 cards with summary information (title, tag, category, relevancy score, time stamp, chunk size, and so on) allowing the user to click on the ones he is interested in to get full information with precise corpus reference. Options: search by recency, exact or broad search, negative keywords, search with higher weight on first prompt token. No-code debugging mode for end users. Pre-made results and caching for top prompts. All this significantly reduces the need for prompt engineering.

- Security: Full control on all components, no external API calls to OpenAI and the likes, no reliance on external data. Home-made, built from scratch with explainable AI. On-premises implementation is possible. Encryption.

Despite specialization, the technology adapts easily to many industries. Calling it a small LLM is a misnomer, since you can blend many of them to cover a large corpus or multiple corpuses. Finally, while a key selling point is simplicity, the technology is far from simplistic. In some ways, while almost math-free, it is more complex than DNNs, due to the large number of optimized components — some found nowhere else — and their complex interactions.

For more about this new technology, see here for technical articles and books, and here to request more information. A PowerPoint presentation is available here.

About the Author

Vincent Granville is a pioneering GenAI scientist, co-founder at BondingAI.io, the LLM 2.0 platform for hallucination-free, secure, in-house, lightning-fast Enterprise AI at scale with zero weight and no GPU. He is also author (Elsevier, Wiley), publisher, and successful entrepreneur with multi-million-dollar exit. Vincent’s past corporate experience includes Visa, Wells Fargo, eBay, NBC, Microsoft, and CNET. He completed a post-doc in computational statistics at University of Cambridge.