For the past several years I’ve been teaching myself guitar. I’d studied piano in school, so I didn’t think that I’d run into the issue of making some fundamental mistakes as I was learning. I figured I’d learned from all the basic mistakes I made studying piano earlier.

But after struggling to memorize fretboard patterns in isolation, I realized I wasn’t learning in sufficient context. I learned chords. I studied arpeggios. I studied scales. I learned songs. But I still really didn’t have mastery of the fretboard.

What was missing from my method of learning? I wasn’t studying all the associations I needed to in one area of the fretboard.

Fundamentally, there are only 12 notes that make up an octave, and then those notes repeat at higher and lower octavies.That was the base map I needed to start with. What I needed to build up over time was a mappings of mappings–how chords related to arpeggios, scales and….locations on the fretboard as well. The guitar’s layout made this difficult.

I didn’t have this same issue with the piano. Why? The piano keyboard is a foundational, logical, visual map to the notes and the intervals between them. And octave by octave, that same map repeats over and over again, up and down the 88 keys.

Composers can model their music in a logically consistent way on top of and because of this foundation. No wonder composers often use pianos instead of other instruments.

Music consists entirely of the characteristics of notes and the relationships between those notes. Without hearing the continual comparison of one note to another, music makes no sense.

Once basic comparisons become possible with the help of relationships, creation of context and comparisons between contexts become possible. Mussorgsky’s PIctures at an Exhibition is an excellent example of a panoply of different musical contexts woven together in a consistent fashion–there’s a story about the pictures together in sequence and how they compare to one another. Then there’s a story within each picture.

Each context is distinctive, with different musical elements, all composed at the piano. But the pictures that inspired Mussorgsky were all by one artist–Viktor Hartmann. The pictures in that sense are each a context nested within another larger context.

Contextual computing as a mapping of mappings

Similarly, effective, scalable data integration and interoperability hinges on the ability to compare one entity to another. That’s what makes basic integration possible. What are the similarities, and what are the differences? What belongs together, and why? How do those comparisons change over time?

Ambiguity thrives where clear comparisons between entities can’t be made. And where there is ambiguity, computation can’t be useful.

Key to reducing ambiguity and enabling interoperability at scale is multiple tiers of comparisons that are made logically consistent. At the bottommost tier is the most detail–the individual notes and how they relate to one another. In the middle tiers are musical phrases, melodies, harmonies and rhythms representing what’s in the pictures themselves. At the topmost tier is the shape of the overall composition and how it tells the larger story.

Making comparisons logically consistent within tiers, between tiers and encompassing tiers requires the right degrees of disambiguation, abstraction and synthesis. Describing relationships between the entities explicitly makes this tiering possible, and it’s how different contexts get built and related between one another. How these relationships work together constitutes a mapping of mappings.

The third phase of AI–contextual computing

Machines can only solve problems within their frame of reference. Contextual computing would allow them to work within an expanding frame of reference. In the process, machines could get closer to understanding in the way that humans do.

How to get to contextual computing? Several years ago, John Launchbury when he was at DARPA put together a video explaining the various approaches to AI over history and how those approaches must come together, if we’re to move closer to artificial general intelligence (AGI).

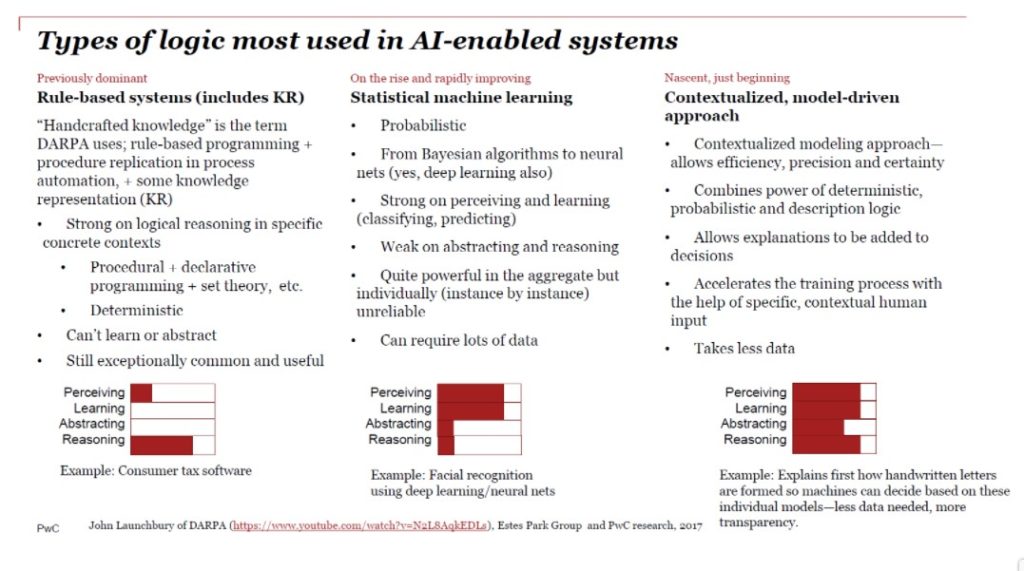

Launchbury’s someone who remembers the first phase of AI–rule-based systems that include knowledge representation (KR). Those systems, he pointed out, were quite strong when it came to reasoning within specific contexts. Rule-based systems continue to be quite prominent–TurboTax is an example he gave. And knowledge representation using declarative languages in the form of knowledge graphs continues to be the most effective way of creating and knitting together contexts.

The second phase of AI Launchbury outlined is the phase we’re in now–statistical machine learning. This stage includes deep learning or multi-layered neural networks and really focuses on the probabilistic rather than the deterministic. Machine learning as we’ve seen can be quite good at perception and learning, but even advocates admit that deep learning, for example, is poor at abstracting and reasoning.

The third phase of AI Launchbury envisions blends the techniques in Phases I and II together. More forms of logic, including description logic, are harnessed in this phase. Within a well-designed, standards-based knowledge graph, contexts are modeled, and the models live and evolve with the instance data.

In other words, more logic becomes part of the graph, where it’s potentially reusable and can evolve.

As with the layout of the piano keyboard, one model is essential and should inform the rest. But in this case, each model is both machine and human readable, with rich relationships making each context explicit, as well as between contexts.

The overall system is therefore reliant on a mapping of mappings, ideally 360 degrees of desiloed, dynamically improving context, rather than just a static frame of reference.