This article was written by Fjodor Van Veen.

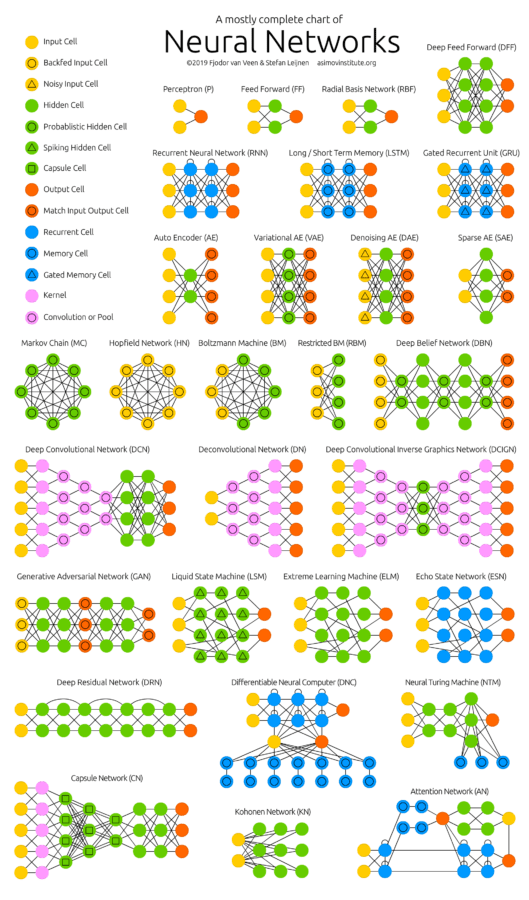

With new neural network architectures popping up every now and then, it’s hard to keep track of them all. Knowing all the abbreviations being thrown around (DCIGN, BiLSTM, DCGAN, anyone?) can be a bit overwhelming at first.

So I decided to compose a cheat sheet containing many of those architectures. Most of these are neural networks, some are completely different beasts. Though all of these architectures are presented as novel and unique, when I drew the node structures… their underlying relations started to make more sense.

One problem with drawing them as node maps: it doesn’t really show how they’re used. For example, variational autoencoders (VAE) may look just like autoencoders (AE), but the training process is actually quite different. The use-cases for trained networks differ even more, because VAEs are generators, where you insert noise to get a new sample. AEs, simply map whatever they get as input to the closest training sample they “remember”. I should add that this overview is in no way clarifying how each of the different node types work internally (but that’s a topic for another day).

It should be noted that while most of the abbreviations used are generally accepted, not all of them are. RNNs sometimes refer to recursive neural networks, but most of the time they refer to recurrent neural networks. That’s not the end of it though, in many places you’ll find RNN used as placeholder for any recurrent architecture, including LSTMs, GRUs and even the bidirectional variants. AEs suffer from a similar problem from time to time, where VAEs and DAEs and the like are called simply AEs. Many abbreviations also vary in the amount of “N”s to add at the end, because you could call it a convolutional neural network but also simply a convolutional network (resulting in CNN or CN).

Composing a complete list is practically impossible, as new architectures are invented all the time. Even if published it can still be quite challenging to find them even if you’re looking for them, or sometimes you just overlook some. So while this list may provide you with some insights into the world of AI, please, by no means take this list for being comprehensive; especially if you read this post long after it was written.

For each of the architectures depicted in the picture, I wrote a very, very brief description. You may find some of these to be useful if you’re quite familiar with some architectures, but you aren’t familiar with a particular one.

Table of contents

- Feed forward neural networks (FF or FFNN) and perceptrons (P)

- Radial basis function (RBF)

- Recurrent neural networks (RNN)

- Long / short term memory (LSTM)

- Gated recurrent units (GRU)

- Bidirectional recurrent neural networks, bidirectional long / short term memory networks and bidirectional gated recurrent units (BiRNN, BiLSTM and BiGRU respectively)

- Autoencoders (AE)

- Variational autoencoders (VAE)

- Denoising autoencoders (DAE)

- Sparse autoencoders (SAE)

- Markov chains (MC or discrete time Markov Chain, DTMC)

- Hopfield network (HN)

- Boltzmann machines (BM)

- Restricted Boltzmann machines (RBM)

- Deep belief networks (DBN)

- Convolutional neural networks (CNN or deep convolutional neural networks, DCNN)

- Deconvolutional networks (DN)

- Deep convolutional inverse graphics networks (DCIGN)

- Generative adversarial networks (GAN)

- Liquid state machines (LSM)

- Extreme learning machines (ELM)

- Echo state networks (ESN)

- Deep residual networks (DRN)

- Neural Turing machines (NTM)

- Differentiable Neural Computers (DNC)

- Capsule Networks (CapsNet)

- Kohonen networks (KN, also self organising (feature) map, SOM, SOFM)

- Attention networks (AN)

To read the whole article, with each point explained and illustrated, and links to original papers PDF, click here.