Reading the academic literature Text Analytics seems difficult. However, applying it in practice has shown us that Text Classification is much easier than it looks. Most of the Classifiers consist of only a few lines of code.In this three-part blog series we will examine the three well-known Classifiers; the Naive Bayes, Maximum Entropy and Support Vector Machines. From the introductionary blog we know that the Naive Bayes Classifier is based on the bag-of-words model.

With the bag-of-words model we check which word of the text-document appears in a positive-words-list or a negative-words-list. If the word appears in a positive-words-list the total score of the text is updated with +1 and vice versa. If at the end the total score is positive, the text is classified as positive and if it is negative, the text is classified as negative. Simple enough!

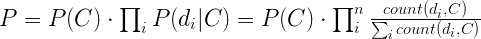

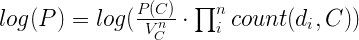

With the Naive Bayes model, we do not take only a small set of positive and negative words into account, but all words the NB Classifier was trained with, i.e. all words presents in the training set. If a word has not appeared in the training set, we have no data available and apply Laplacian smoothing (use 1 instead of the conditional probability of the word). The probability a document belongs to a class C is given by the class probability P(C) multiplied by the products of the conditional probabilities of each word for that class.

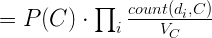

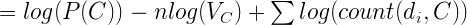

Here count(d_i, C) is the number of occurences of word d_i in class C , V_C is the total number of words in class C and n is the number of words in the document we are currently classifying.

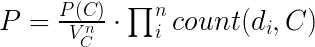

V_C does not change (unless the training set is expanded), so it can be placed outside of the product:

Handling large training sets:

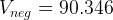

In theory we want a training set as large as possible, since that will increase the accuracy. In practice this results in large numbers for V_C and n. For example, for our training set with 5000 reviews we got

Taking the n-th power of such a large number, will definitely result in computational problems, so we should normalize it. We can divide it by a number so that it becomes a number close to 1. In our case, this number is 100.000 and this normalization results in:

However, if the number of words in the document  is large, this can still lead to computational problems:

is large, this can still lead to computational problems:

>>> 4.59**500Traceback (most recent call last): File "<stdin>", line 1, in <module>OverflowError: (34, 'Result too large')>>> |

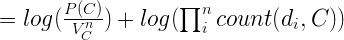

In Python there are a few modules which can handle large number (like Decimal), but a better solution would be to take the logarithm. This will not affect the outcome of the classification process; if a document has the highest probability for a specific class, the logarithm of the probabilities will also be the highest for that class.

This results in:

Implementing Naive Bayes

With this information it is easy to implement a Naive Bayes Classifier algorithm.

Our training set consists in the form of a list of tuples, where each tuple contains two elements; the tokenized text and the label.