This article was written by Tristan Handy.

Over the past nine months I’ve worked with over a dozen venture-funded startups to build out their internal analytics. In doing so, there’s a single SQL function that I have come to use surprisingly often. At first it wasn’t at all clear to me why I would want to use this function, but as time goes on I have found ever more uses for it.

What is it? md5().

Give md5() a varchar and it returns its MD5 hash. Simple…but seemingly pointless. Why exactly would I want to use that?!

Great question. In this post I’m going to show you two uses for md5() that make it one of the most powerful tools in my SQL kit.

#1: Building Yourself a Unique ID

I’m going to make a really strong statement here, but it’s one that I really believe in: every single data model in your warehouse should have a rock solid unique ID.

It’s extremely common for this not to be the case. One reason is that your source data doesn’t have a unique key—if you’re syncing advertising performance data from Facebook Ads via Stitch or Fivetran, the source data in your ad_insights table doesn’t have a unique key you can rely on. Instead, you have a combination of fields that is reliably unique (in this case date and ad_id). Using that knowledge, you can build yourself a unique id using md5().

The resulting hash is a meaningless string of alphanumeric text that functions as a unique identifier for your record. Of course, you could just as easily just create a single concatenated varchar field that performed the same function, but it’s actually important to obfuscate the underlying logic behind the hash: you will innately treat the field differently if it looks like an id versus if it looks like a jumble of human-readable text.

There are a couple of reasons why creating a unique id is an important practice:

- One of the most common causes of error is duplicate values in a key that an analyst was expecting to be unique. Joins on that field will “fan out” a result set in unexpected ways and can cause significant error that is difficult to troubleshoot. To avoid this, only join on fields where you’ve validated the cardinality and constructed a unique key where necessary.

- Some BI tools require you to have a unique key in order to provide certain functionality. For instance, Looker symmetric aggregates require a unique key in order to function.

We create unique keys for every table and then test uniqueness on this key using dbt schema tests. We run these tests multiple times per day on Sinter and get notifications for any failures. This allows us to be completely confident of the analytics we implement on top of these data models.

#2: Simplifying Complex Joins

This case is similar to #1 in its execution but it solves a very different puzzle. Imagine the following case. You have the same Facebook Ads dataset as referenced earlier but this time you have a new challenge: join that data to data in your web analytics sessions table so that you can calculate Facebook ROAS.

In this case, your available join keys are the date and your UTM parameters (utm_medium, source, campaign, etc). Seems easy, right? Just do a join on all 6 fields and call it a day.

Unfortunately that doesn’t work, for a really simple reason: it’s extremely common for some subset of those fields to be null, and a null doesn’t join to another null. So, that 6-field join is a dead end. You can hack together something incredibly complicated using a bunch of conditional logic, but that code is hideous and performs terribly (I’ve tried it).

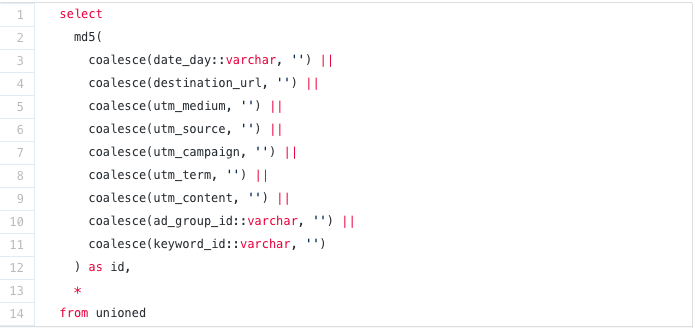

Instead, use md5(). In both datasets, you can take the 6 fields we mentioned and concatenate them together into a single string, and then call md5() on the entire string.

To read the whole article, with illustrations and examples, click here.