My first computer was a Commodore Vic-20 in 1981. I bought the device because of this incredible urge to program in BASIC as a result of Mr. Ted Becker’s course on computer programming. I vaguely remember the leap from the pain-staking process of programming using punch cards to writing code and watching your program run immediately, once you resolved all of the syntax errors of course. Nonetheless, it was thrilling and addictive! In hindsight, a part of that addiction was a result of being able to code these complex calculations involving products and sums to ridiculously large numbers.

Indeed, my first use of a home computer was defined by my vision for the ultimate calculator. I spent hours performing complex calculations that I would never have been able to perform with a calculator. But why was this the case? What choice would I make today if I were presented with a new technology that is so different that it blows away anything that would be considered light years ahead, given all of the current advances in technology? What role did the scientific calculator play in defining how we view the modern computer? Finally, what would be the ideal device for today’s data analytics professional? The answers to these questions will be the focus of this article.

History of Portable Calculators

The historical roots of the need for calculating on-the-fly by using a portable device can be traced back to the early origins of mathematics. When early man began the process of trading goods, keeping track of amounts became a vitally important task in order to prevent losses. Early man began using his hands to count small quantities then moved to counting the joints of the entire body as the need for larger numbers arose. The first evidence of shifting to a dedicated device called an abacus can be found in Sumerian culture dating back to around 2,500 BC. These devices used a combination of beads and rods to keep track of much larger numbers. As our lives became more complex and science and mathematics continued to be developed, devices were created to meet that need. In 1916 Napier’s Bones, the Slide rule (1622), Pascal’s Calculator (1642), Colmar’s calculator (1850), Curta Calculator(1942), and the digital calculator (1970), Graphing Calculator (1985) are all examples of major leaps in technology arising from a major shift in newly invented mathematics technology. John Napier’s development of the logarithm, fundamentally changed how quickly large-scale multiplications and divisions could be performed. Subsequently, the development and impact of the slide rule is well documented as a key component to the U.S. Space program. In particular, the Mercury, Gemini and Apollo astronauts all had extensive training in processing rocket propulsion data, performing everyday calculations and calculating trajectories of re-entry and orbit. So prevalent was computation a part of astronaut training in the 60s, NASA decided to make the Picket slide rule a standard issue item for the Apollo program astronauts.

There was a tremendous amount of pride associated with owning a device that reflected the complex requirements of the scientific, engineering, mathematical or technological trade of its owner. If the pocket protector and its contents represented the mark of a genius, the slide rule and ultimately, digital calculator, signified the degree to which the genius was skilled at problem solving.

From Scientific Calculator to Smartphone

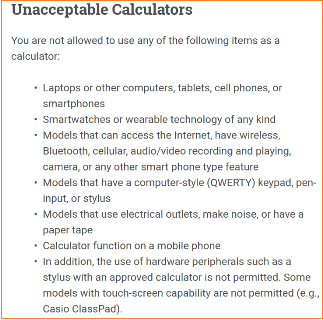

When HP developed the digital calculator in 1970, portability became the name of the game. From 1970 to 2008 several versions of the basic calculator include: calculator watches, graphing calculators, scientific calculators and calculators capable of running computer algebra systems (CAS) so that problem solving and deriving solutions or simplified equations can be performed with the push of a button. Most notably, the TI-Nspire CX II, the HP PRIME and the Casio fx-CG500 are all examples of calculators with these features. However, many of these calculator companies are very focused on supporting the educational testing industry in lieu of creating a feature set geared to professionals who have a need to analyze data on the fly. A recent visit to the college board website will allow you to see the list of permitted calculators by manufacturer. The list is long but the list of features for unacceptable calculators is a lot simpler. The graphic below is a screenshot of the details.

Source: here

With the advent of the buttonless, all glass cellphone, the notion of being confined to a device with a single button configuration was liberated. The iPhone changed the game in many ways. In a single device, one could have multiple versions of calculators that could serve differing needs. If you had a need to graph functions, you could download the graphing calculator software of your choice. You are now limited only by the creativity of app developers. Even so, the calculator hardware is not a dedicated solution for computation but merely an adaptation of telephone and mini pc hardware. What if a device was designed from the ground up to meet the computing demands of today’s analytics professional? What would the hardware and software requirements be?

A Proposed Device

We can safely assume that the requirements that I’m about to outline would require some major advances in hardware and networking. I’d like to also establish the premise that data professionals need hardware and software tools that are different from those required by students. Additionally, I propose to anchor our thinking in the notion that a handheld device on its own, may not adequately power the large scale computations required for today’s professional. Now that we have established our ground rules, I’d like to identify some of the tasks and roles that today’s data professionals perform regularly.

Tasks & Roles of a Data Analytics Professional

As a data scientist, the following are tasks and roles that are performed regularly:

Mathematical Modeling — Modeling is the collection and analysis of data that results in the creation of a mathematical equation that best describes the observed phenomenon. Often times, the modeling process involves the four steps of creating the model, validating the model, evaluating the model and refining the model. Having access to a device that is capable of observing real-world phenomena and constructing mathematical models that describe and capture the phenomena would be extremely valuable. To extend this notion to a device would mean that capturing photos or videos of phenomena would be useful but limited in its implementation. Though image processing for a wide class of images would be valuable, the challenge here would be to narrow the type of observable phenomena. For example, temporal texture recognition can distinguish between trees blowing in the wind and ripples of water in a pond. These phenomena are completely separate from the flow of water through a tube or the rate at which the diameter of a hot air balloon’s radius changes as it ascends. Another challenge will be to determine the form of the mathematical model. Should it be a graph, equation or description of the phenomena? I would argue, all three representations would provide insight to the relationships that we take for granted.

Signal Processing / Time Series Analysis — Having the ability to quickly create and analyze time-series on-the-fly would be tremendous and highly valued. A one dimensional time series can easily be generated currently from downloaded stock prices. Determining trends based on stock prices, while detecting false trend predictions due to random walks both visually and analytically would provide an advantage. Certainly, facial recognition technology that would allow one to quickly take a photo and validate it against a database of people, might provide an edge in social situations. We already have apps that allow the combination of a GPS, accelerometer and camera to observe constellations in the night sky. What if we could use a network of devices to observe the moon or a star that was synchronized for similar devices and included a series of world-wide networked, research telescopes as data for enhancing the observation? This may require a more powerful lens on a local device or the leveraging of networked cameras. What if we used such a feature to observe events in real-time? Multiple perspectives of a single story would change how news is covered and analyzed. For example, the audio from a single gunshot could be used on multiple devices to pinpoint the exact location of the source.

Machine Learning / Deep Learning — Supervised learning (regression, classification), clustering, dimensionality reduction, structured prediction, anomaly detection, artificial neural networks and reinforcement learning all require the ability to extract data from the real world and apply algorithms to determine relationships. In addition, having a device that can quickly identify relationships between variables with the ability to tap into regional, national or world-wide certified databases so that more complex studies may be performed on-the-fly, would be of tremendous value.

Statistics & Mathematics — The device should have the standard statistical packages that could be found on the most scientific calculators. In addition, more advanced applications tied to statistical packages such as R, Scikit-learn, Mlpy and Statsmodels. These packages will allow for various classification, regression and clustering algorithms including support vector machines, logistic regression random forests, gradient boosting, and Naive Bayes. Access to numerical algorithms through the various libraries supporting C++, Python, Java and R.

Creating Data APIs — Application Programming Interface (APIs) are critical components when dealing with clients who have a need to access their data analyses via the web or have a need for a new application. Being able to develop API’s in a robust development environment such as Anaconda, R, SAS or many other data analysis platforms is critical. At the same time, be mindful of any regulations that may govern your APIs. For example, there are strict regulations regarding the handling of financial data that are often overlooked by new practitioners.

Data Simulations, Data Cleaning & Warehousing and Data Interrogation — Having a device with the capability of handling enormous datasets will require much more RAM than what is available on current portable devices. Running simulations, cleaning, warehousing and querying will require a robust architecture. The device would need to be able to handle the demands of distributed computing implementations of MapReduce such as Hadoop and Spark. As an alternative, graph databases could provide computational efficiencies that could be leveraged

Operating System

The device will need to be able to run multiple operating systems, depending on the user requirements. A Unix-based operating system would be preferred. Being able to have a multi-boot System would be ideal giving access to iOS, Windows 10 and Unix applications.

Hardware Requirements

The physical device should have an all glass touch-screen with a portion of the device dedicated to having a host of sensors. These sensors should include the option to be modular based on the required application:

- Touchscreen Display with haptic feedback with the ability to toggle the feedback

- Proximity sensors — these sensors provide a quick way to toggle various modes of the device to prevent spurious input from a touchscreen.

- Cellular radio — although this device can serve as phone, the emphasis will be on collecting data in many forms.

- Multiple GPS antennae — passive with the option for connecting a powered amplifier to improve accuracy

- Microphones — High-quality microphones are not typically provided with smart devices. However, this device needs to have a high-quality lapel capacitor microphone as well as a portable condenser microphone that has a frontal cardioid pattern as well as two-capsule, two-directional patterns.

- Detachable Bluetooth accelerometers — to address device orientation and provide observational and experimental data depending on the application

- Infra-red emitter and sensor for detecting temperature fluctuations dynamically in real time. Seek Thermal and FLIR are two companies leading this effort. Having an optional sensor at the end of a cable for hard-to-reach places would also be of great value.

- Multi-camera array- wide angle, 35mm, 50mm, with attachment capability for 80mm and 200mm lenses. Having a

- Bluetooth Sensor Array — An array of detachable bluetooth sensors, all with a rate of 96–128 Mbps with a range of at least 300 feet, with the ability to customize the sensor type and resolution.

- Bluetooth writing pen/pencil, keyboard and mouse

- Medical application suite of sensors to include: body position, body temperature, electrocardiography, spirometer, blood pressure, pulse oximeter, glucometer and electroencephalogram

A mobile network card capable of 100 Mbps upload and 200 Mbps download speeds to address any on-the-fly computations would also be desirable. This would also require a host computer with a graphics card capable of performing Machine Learning algorithms.

RAM and Storage

The device should have enough memory to do large scale arrays and have the an Octa-core processor with at least 32GB of RAM with at least 2Tb of storage. Because of the processor demands and the concern for heat, the device need not take the form of a conventional cell phone or thin tablet but can approximate a slightly larger, thicker design.

Mobile Software

The choice and performance of software applications will be critical components of our proposed device. A mobile Integrated Development Environment (IDE) that is robust and reliable is needed. A commitment from and cooperation between developers of Matlab, R Studio, Spyder, MS Visual Studio, SPSS, SAS, MS Excel, Wolfram Alpha, MapReduce implementations and even data visualization applications such as Tableau, will be needed. These applications will require hardware that can manage very large computational tasks while balancing the needs of long battery-life of a mobile device. In addition, MacOS, iOS and Windows 10 need to evolve to address this need for a robust mobile operating system that is geared toward the professional data scientist.

Conclusion

There is a need for a new class of device for professionals who want to be able to perform significantly demanding computational tasks on a mobile device. Testing companies have had too much influence on the modern day scientific/graphing calculators. This has led to a limited scope of functionality and a severe lack of innovation in design and applications. Mathematics is a subject that seems to be stuck in traditional computational tasks despite the many technological advances over decades. Its time to make the shift to a device that brings us into a world of new possibilities. What are your thoughts? Would you be interested in such a device? What other hardware and software features can you see as vital to the success of such a device? Let’s work to make a new type of device a reality.

Originally posted here.