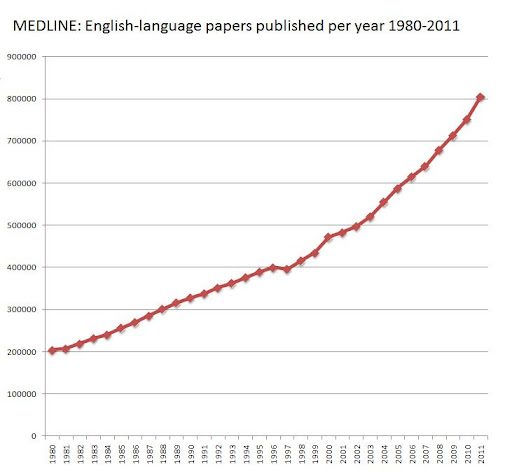

Remember the ASA statement on p-values from last year? The profession is getting together today and tomorrow (Oct. 11/12, 2017) for a “big think” on the problem. John Ionnidis, who has published widely on the reproducibility crisis in research, said this morning that “we are drowning in a sea of statistical significance” and “p-values have become a boring nuisance.” Too many researchers, under career pressure to produce publishable results, are chasing too much data with too much analysis in pursuit of significant results. The p-value has become a standard that can be gamed (“p-hacking”), opening the door to publication. P-hacking is quite common — the increasing availability of datasets, including big data, means the number of potentially “significant” relationships that can be hunted is increasing exponentially. And researchers rarely report how much they looked at before finding something that rises to the level of (supposed) statistical significance. So more and more artefacts of random chance are getting passed off as something real.

Sometimes p-hacking is not deliberately deceptive — a classic example is the examination of multiple subgroups in an overall analysis. Subgroup effects can be of legitimate interest, but you also need to report how many subgroups you examined for possible effects, and do an appropriate correction for multiplicity. However, standard multiple corrections are not necessarily flexible enough to account for all the looks you have taken at the data. So, when you see a study reporting that statistical significance was lacking in the main point of the study, but statistically significant effects were found in this or that subgroup, beware.

This may sound heretical, but the basic problem is that there is too much research happening, and too many researchers. Bruce Alberts et al alluded to this problem in their article discussing systemic flaws in medical research. As they put it,

“…most successful biomedical scientists train far more scientists than are needed to replace him- or herself; in the aggregate, the training pipeline produces more scientists than relevant positions in academia, government, and the private sector are capable of absorbing.”

The p-value is indeed a low bar to publication for the researcher who has lots of opportunities to hunt through available data for something interesting. However, merely replacing it with some different metric will not solve the supply-side problem of too many scientists chasing too few nuggets of real breakthroughs and innovations.