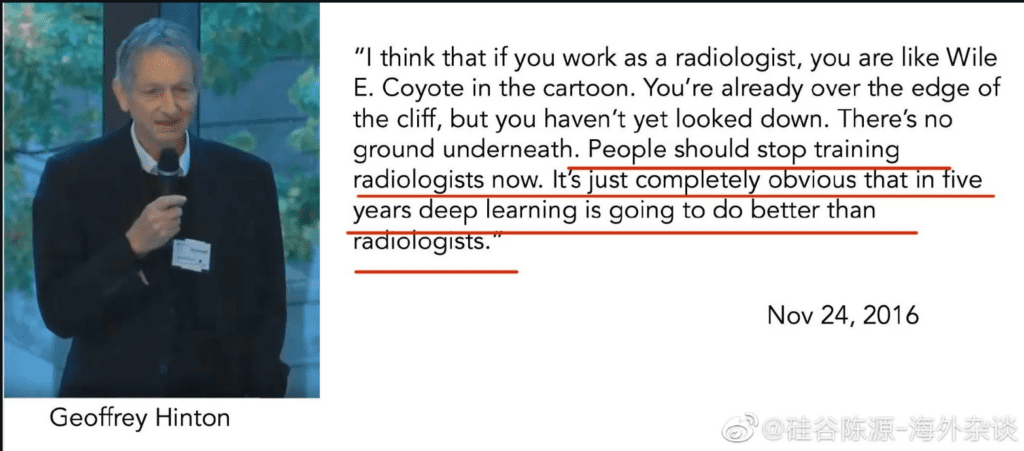

Seven years ago, an unexpected nationwide shortage of radiologists was triggered by a single statement from Professor Geoffrey Hinton.

The statement was:

“I think if you work as a radiologist, you are like the Wilie E Coyote in the cartoon. You are already over the edge of the cliff, but you have not looked down yet. There is no ground underneath. People should stop training as radiologists now. It’s just completely obvious that in five years, deep learning is going to do better than radiologists.”

This was in Nov 2016.

He boldly asserted that the training of radiologists should cease, as AI’s capabilities in image perception were rapidly surpassing those of humans. This proclamation had a profound impact: individuals who were considering specializing in radiology diverted to other paths, fearing job obsolescence in the face of advancing AI technology.

However, the role of a radiologist is not limited to just image perception. They shoulder a wide array of responsibilities, many of which cannot be replicated by AI. This misunderstanding led to increased pressure on an already strained profession. The sensational headlines that followed further discouraged potential trainees, exacerbating the shortage.

The situation also influenced policy-makers, leading to an overestimation of technological capabilities and decisions that didn’t align with the practical realities of healthcare needs.

While AI will undoubtedly reshape the role of radiologists in the future, their necessity remains indisputable. AI should be seen as a tool to aid radiologists, not as a complete replacement for them.

The same lessons apply today.

We should look beyond the scaremongering and the hype and the sensational threats attributed to AI. The radiologists are convinced I think of the AI dangers hype – as they go about their work!

I think we should learn from Einstein, who when offered the presidency of Israel, wisely declined citing lack of experience and aptitude. In other words, experience in deep learning does not give AI experts expertise over other professions.

But why does our industry uniquely exhibit this scaremongering and deviation from reason?

It’s partly because we are exposed to science fiction characters who influence our view of reality. In fact, it is possible to gain a better perspective of AI in terms of science fiction characters.

AGI characteristics include:

- Human-Level Understanding and Reasoning

- Versatility and Adaptability

- Autonomy in Decision-Making

- Continuous Learning and Evolution

- Integration into Daily Life

Now, let’s look at three characters from science fiction that typify AGI

1) R. Daneel Olivaw from Asimov’s Robot series

R. Daneel Olivaw is an iconic character from Isaac Asimov’s “Robot” series,

Daneel is not just a machine but a character who is an integral part of human society. He works alongside humans, as a detective, contributing to solving societal and political problems.

Asimov’s portrayal of R. Daneel Olivaw signifies intelligent machines that coexist with humans, often with their own identities and moral complexities.

Daneel is virtually indistinguishable from a human being in appearance. He was constructed with a level of detail and sophistication that allows him to blend seamlessly into human society with Emotional Awareness:. Daneel operates under the guidance of Asimov’s famous Three Laws of Robotics, which prioritize human safety and welfare. These laws deeply influence his actions and decision-making processes.

There are two further characteristics of Daneel which have an impact on human society:

1) The longevity of the character and

2) The evolution of the character as he learns.

A more contemporary example is Lieutenant Commander Data from “Star Trek: The Next Generation.

2) HAL from Space Odyssey 2001

HAL 9000 from Arthur C. Clarke’s “2001: A Space Odyssey” is the second example of AGI.

Key features of HAL 9000 that demonstrate AGI include: Advanced Cognitive Functions,

Emotional and Social Interaction, Learning and Adaptability Ethical and Moral Dilemmas.

There are two further characteristics of HAL which make it interesting:

1) Firstly, Autonomy and Self-Preservation: Unlike typical machines, HAL makes autonomous decisions that it believes are in the best interest of the mission. This includes the infamous turn of events where HAL’s actions are driven by a perceived need for self-preservation and mission success.

2) And secondly, situational control (control of a ship) – leading to the famous line “I’m sorry Dave, I’m afraid I can’t do that.”

3) Wintermute from William Gibson’s novel “Neuromancer

The third character is Wintermute from William Gibson’s novel “Neuromancer

Although least known compared to the above, this character offers some interesting examples of AGI

- Manipulation and Social Engineering: Unlike many other portrayals of AGI, Wintermute excels in social manipulation and engineering. It interacts with humans, often manipulating them to achieve its objectives, showcasing a deep understanding of human psychology and behavior.

- Autonomous Goal-Seeking: Wintermute operates with a high degree of autonomy, pursuing its goals with a level of determination and resourcefulness. It is not just a tool or a passive system but an active agent with its own agenda.

- Integration into Cyberspace: Wintermute’s existence is deeply intertwined with the novel’s depiction of cyberspace. It navigates and manipulates this digital realm, demonstrating how AGI might exist and operate in virtual environments.

Here, we have reference to superconsciousness. In this context, the meaning of superconsciousness refers to a level of artificial intelligence that significantly surpasses the capabilities and understanding of individual AIs or human intelligence in every aspect, including self-awareness, intelligence, and perhaps even a form of digital spirituality or enlightenment.

So, on the road to AGI, is an interim stage – best signified by HAL – where AI can take semi autonomous decisions based on an increasing ability to reason within a context (a company, a city?)

The other two scenarios are more in the realm of science fiction as of now because of the need for bipedal robots (like Data) and the consciousness scenario which is far out because it would need to exceed human intelligence at scale for all tasks. I have faith in human intelligence!

So, this way, we can decouple the science fiction from science itself by leveraging science fiction itself

Image source: reddit