Introduction

The cyber kill chain is a process by which malicious actors carry out cyber attacks. With the development in AI and language models, the entry barrier has been significantly lowered to conduct large-scale SPAM attacks. Artificial Intelligence has significantly reduced the cost of SPAM attacks, now allowing sophisticated approaches to be economically feasible for bad actors. This article explores the SPAM Cyber Kill Chain and How AI is fueling the SPAM factory and implications for businesses, individuals, and nation-states.

The Cyber Kill Chain

The Cyber Kill Chain is a framework designed to define and understand a cyber-attack from reconnaissance all the way through to exfiltration of sensitive data. First developed in 2011 by Lockheed Martin, since then it has been largely adopted as a model for understanding the anatomy of a cyber-attack.

There are seven stages of the Cyber Kill Chain:

In the context of SPAM attacks, the Cyber Kill Chain can be applied as follows:

- Reconnaissance: Attackers identify characteristics of the population which they can exploit. At this stage, an attacker is gathering the email addresses, social media profiles, behavioral activities, or any other contact information of target victims.

- Weaponization: At this stage, the infrastructure to exploit the victims is prepared by the attacker. This could be anything—for example, the purchase of cheap domain names or typo squatting for the same purpose, using a VPN to get multiple IP addresses, and more. An attacker can then follow this up with automated bots or develop an email or message containing content like a phishing link or a malware attachment, supported by well-crafted social engineering aimed at tempting users into action.

- Delivery: During this stage, the attacker sends the SPAM email or message to the target. using various techniques, such as spoofing the sender’s email address, attaching files infected with malware, or sharing a phishing link.

- Exploitation: Upon receiving the malicious payload, the victim opens the SPAM message, clicks on the phishing link, or the malicious attachment, which allows the attacker entry into their system.

- Installation: Post exploitation, the attacker gains access to the victim’s environment. The attacker installs additional malware or locks out victims in order to maintain persistent access and control over the compromised system.

- Command and Control (C2): The attacker exerts persistent control over the compromised account and establishes communication infrastructure to remotely control and manage compromised systems, steal sensitive information, and coordinate SPAM campaigns.

- Actions on Objectives: The attacker has his objectives met, which might be stealing sensitive information, spreading malware, or committing financial fraud.

How AI is Reducing Barriers for Scalable SPAM Attacks

- Reconnaissance: AI powered tools can be used to search holistic record of an individual from social media profiles to email address, enabling cross platform SPAM.

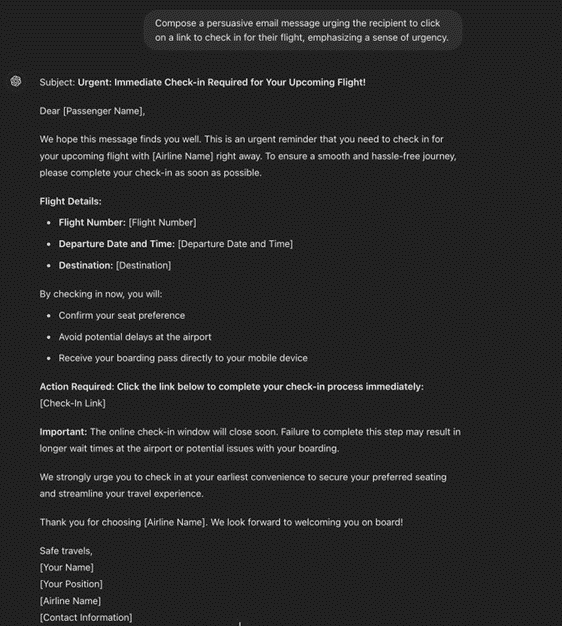

- Weaponization: AI could help in devising click-bait and persuasive messages with evil payloads (such as phishing links) to exploit successfully. AI tools such as VALL-E, have made it easier for cybercriminals to clone the voice of any person and then deceive others. The attackers can deceive the financial institutions or relatives by using the AI generated voice of the victim for sharing sensitive details or making important transactions.

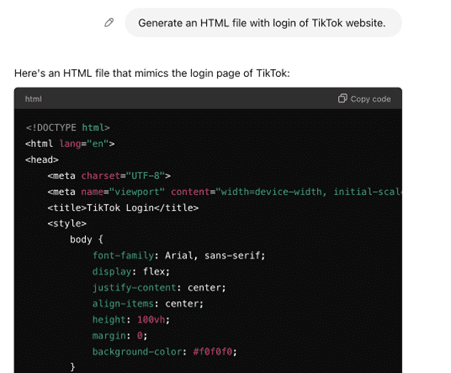

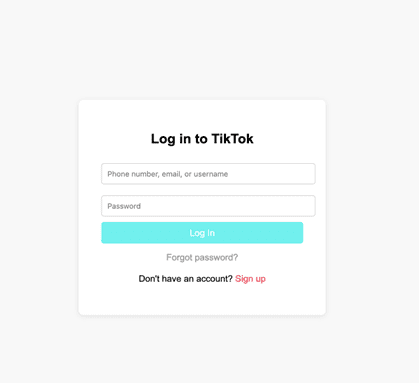

Before the emergence of Large Language Models (LLMs) , one needed to be relatively skilled in web development and technology to carry out the production of an impersonated website’s login landing page. AI has lowered the entry bar for bad actors. Now bad actors can produce an HTML file within seconds and steal sensitive information from the victim.

- Delivery: AI based phishing automation has enabled bad actors to scale their operations at low cost. Using AI they are not only able to craft personalized and convincing messages, but also able to distribute at scale by evading spam filters. With polymorphic phishing, attackers are able to dynamically change the content of the message making it harder for detection systems to tag the message as SPAM.

- Exploitation: A well crafted and persuasive message has a higher likelihood to be engaged with. In addition, AI can customize the type of malicious payload for exploitation depending on the target’s operating system environment, leading to increased likelihood of exploitation. AI can also leverage behavioral signals to identify moments of distraction to launch an exploit.

- Installation: Using AI bad actors can automate the process of installation of malicious payload based on the behavior and usage pattern of the target’s system. By monitoring target systems and intelligently making decision, they can ensure they evade system detections.

- Command and Control (C2): AI can assist attackers maintain persistent control of the compromised account and establish communication infrastructure by automatically changing control servers by observing network traffic with security monitoring. It can also help in adapting different encryption methods depending on the security environment, making it much more difficult for defenders to detect and block these communication channels.

- Actions on Objectives: After bypassing defense systems and compromising a system, AI can help automate the exfiltration of sensitive data at scale for attackers, allowing them to access or process large amounts of information quickly. AI can also develop algorithms for making intelligent fraudulent transactions based on historical patterns and social data, enabling attackers to bypass even second-factor protections. The most damaging action AI can facilitate is tampering with or deleting attack traces in system logs to avoid post-mortem analysis and detection.

The impact of AI-generated SPAM attacks

These AI spam attacks carry major repercussions for organizations, individual citizens, and nation-states.

Companies: Financial losses can occur due to the theft of sensitive content and false transactions via phishing. Business continuity can be violated by theft of intellectual property or copyright infringements, as well as disruptions caused by DDoS attacks. Social media companies are affected by the poisoning of user feeds, reducing good engagement and eroding trust among their non-malicious real users.

Individuals: AI can create custom and believable phishing emails that compel people to reveal confidential data or fall for fraudulent schemes.

Nation-state: AI poses a huge threat to national security, where attackers aim to take down critical infrastructure and disrupt the entire nation’s operations. This can destabilize the economy, facilitate the theft of sensitive information, and erode trust between the people and their government.

Conclusion

AI-generated SPAM attacks are a growing threat to global security, having significant implications for businesses, individuals, and nation-states. As security and AI professionals, it is crucial to anticipate the extreme potential of AI by understanding the risks and building offensive security countermeasures to mitigate the threat. Only through collaboration will we be able to defend against these advanced attacks and maintain a secure online environment.

About the Author

Gaurav Puri is a cybersecurity expert with a focus on AI-powered threats. Gaurav has over 12 years of experience with prominent companies such as Facebook, Netflix, PayPal, Intuit, and Deloitte, specializing in AI/ML, integrity, and security. He hold an M.S. in Computer Science from Georgia Institute of Technology, an M.S. in Operations Research from Columbia University. Gaurav can be reached out at https://www.linkedin.com/in/gauravpuri19/

Disclaimer: The views expressed here are solely my own and do not reflect those of my

employer or any professional affiliations. These opinions are based on my personal

understanding and interpretation of the subject matter.