The AI Edge Engineer: Extending the power of CI/CD to Edge devices using containers

Background

At the Artificial Intelligence – Cloud and Edge implementations course – I have been exploring the idea of extending CI/CD to Edge devices using containers. In this post, I present these ideas under the framework of the ‘AI Edge Engineer’. Note that the views presented here are personal. I seek your comments if you are exploring similar ideas – especially if you are in academia / research. We are happy to share insights/code as we develop it. You can connect with me on linkedin HERE

AI Edge Engineer Use cases – beyond vision at the Edge

Most AI Edge applications today are based on Computer Vision and Voice.

What is in the image or video?

Give me directions to the nearest local branch.;

Convert spoken audio to text;

Natural Language Processing etc

are all cognitive applications, often running on the edge, that do not need you to be connected to the Cloud as long as the trained model is deployed to the edge devices.

But, we are seeing other examples for AI Edge (Edge AI is going beyond voice and vision).

As applications for AI and Edge computing mature, we need more mature development models which unifies both the Cloud and the Edge devices.

In this post, I discuss models of development for AI Edge Engineering based on deploying containers to Edge devices which unifies the Cloud and the Edge.

I call this role ‘AI Edge Engineer’.

The primary focus of the AI Engineer role is to unify development between the Cloud and the Edge using containers so as to deploy and maintain code to Edge devices. Note that in this case, the device lifecycle is still the same: i.e. plan, provision, monitor and retirement. The device also undergoes other states such as reboot, factory reset, configuration, firmware update and status reporting.

The Role of the AI Edge Engineer

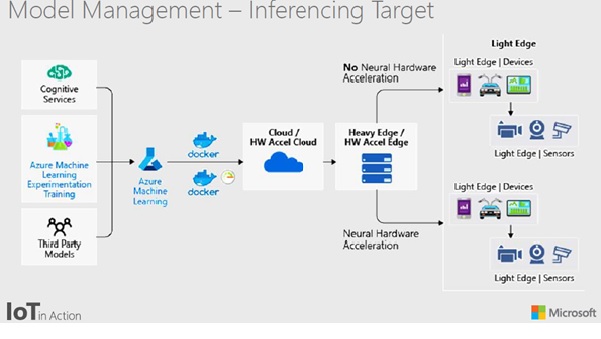

The AI Edge Engineer analyses, designs and implements cognitive AI solutions that span the Cloud and Edge devices. From a developer standpoint, AI Edge deployment implies the ability to move cloud and custom workloads to the edge, securely and seamlessly. It involves the ability to run advanced analytics on Edge devices where the model is trained on the cloud and deployed on edge devices. The AI Edge deployment is configured, updated and monitored from the cloud – with a secure solution deployed end-to-end from chipset to cloud

Providing offline support is a key part of AI Edge Engineering. In practise this means – Once you sync with the IoT Hub(cloud) – the Edge device can run independently and indefinitely with messages being queued for deferred cloud delivery

Implementing the AI Edge Engineer architecture

Implementing the AI Edge ecosystem is an engineering led problem i.e. it extends beyond the data science aspect (writing algorithms) because it includes an understanding of devops. We briefly describe the architecture of such a solution based on Azure. (source Azure documentation):

Terminology

- IoT Hub is a managed service, hosted in the cloud, that acts as a central message hub for bi-directional communication between your IoT application and the devices it manages

- IoT Edge is an IoT service that builds on top of IoT Hub. This service is meant for customers who want to analyze data on devices, or “at the edge,” instead of in the cloud. By moving parts of your workload to the edge, your devices can spend less time sending messages to the cloud and react more quickly to events. An IoT Edge device needs to have the IoT Edge runtime installed on it.

- IoT Edge runtime: IoT Edge runtime includes everything that Microsoft distributes to be installed on an IoT Edge device. It includes IoT Edge agent, IoT Edge hub, and the IoT Edge security daemon. The IoT Edge runtime is a collection of programs that turn a device into an IoT Edge device. Collectively, the IoT Edge runtime components enable IoT Edge devices to receive code to run at the edge, and communicate the results. The responsibilities of the IoT Edge runtime fall into two categories: communication and module management. These two roles are performed by two components that are part of the IoT Edge runtime. The IoT Edge hub is responsible for communication, while the IoT Edge agent deploys and monitors the modules. Both the IoT Edge hub and the IoT Edge agent are modules, just like any other module running on an IoT Edge device.

- IoT Edge hub: The part of the IoT Edge runtime responsible for module to module communications, upstream (toward IoT Hub) and downstream (away from IoT Hub) communications.

- The IoT Edge agent: is responsible for instantiating modules, ensuring that they continue to run, and reporting the status of the modules back to IoT Hub.

- IoT Edge module: An IoT Edge module is a Docker container that you can deploy to IoT Edge devices. It performs a specific task, such as ingesting a message from a device, transforming a message, or sending a message to an IoT hub.

- IoT Edge module image: The docker image that is used by the IoT Edge runtime to instantiate module instances.

- IoT Edge deployment manifest: A Json document containing the information to be copied in one or more IoT Edge devices’ module twin(s) to deploy a set of modules, routes, and associated module desired properties.

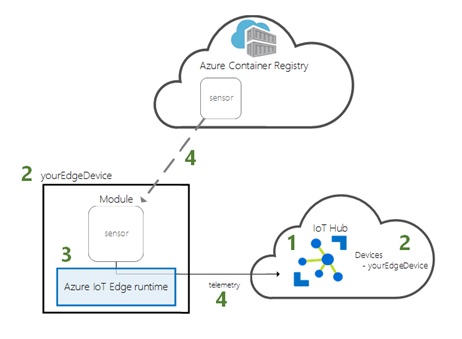

Deployment of code to Edge devices

Currently, most IoT applications use a simple architecture where they connect to the Cloud directly i.e. do not undertake edge computing. Increasingly, almost all future IoT applications will involve the ability to process data on edge devices. The requirement to run functionality on edge devices will also require us to run and maintain code on edge devices. Modules will be run on devices using the container mechanism. Hence, the AI Edge Engineer functionality will involve running modules on Edge devices through containers. We describe this mechanism below for a simple telemetry application which is pre-developed.

Source: Azure Deploy your first IoT Edge module to a virtual Linux device

The steps are

- Create an IoT hub

- Register an IoT Edge device

- Configure your IoT Edge device

- Deploy a module

You could expand this idea to

- Train and deploy a module on an Edge device

- Continuous integration and continuous deployment to IoT Edge

The expanding role of containers with CI/CD

It is now possible to deploy a CI/CD ready IoT Edge project. The availability of a CI/CD pipeline could help developers deliver value faster with rapid updates i.e. extend the power of CI/CD to Edge devices.

This helps developers to create use cases such as:

- Rapid software updates

- A/B testing for devices

- Security ex CICD on MCU devices

- Parallize builds

- containers by vertical

- Containers for target devices (specific hardware)

- Smoke testing for IoT devices

source: Azure/Microsoft

Implications for Telecoms

While the deployment of containers on Edge devices may seem a nascent application, I believe that soon, as intelligence starts to be embedded into devices, many large scale IoT / Edge applications would use this architecture (for example autonomous vehicles. Drones etc). Today, a few applications deploy AI to complex edge devices ex Ocado – but the future may be different. This is broadly true of Telecoms in a post-5G world as can be seen by the Azure AT&T partnership and the Azure – Reliance Jio partnership.

I seek your comments if you are exploring similar ideas – especially if you are in academia / research. We are happy to share insights/code as we develop it. You can connect with me on linkedin HERE