This article was written by James Le.

Neural networks are one type of model for machine learning; they have been around for at least 50 years. The fundamental unit of a neural network is a node, which is loosely based on the biological neuron in the mammalian brain. The connections between neurons are also modeled on biological brains, as is the way these connections develop over time (with “training”).

In the mid-1980s and early 1990s, many important architectural advancements were made in neural networks. However, the amount of time and data needed to get good results slowed adoption, and thus interest cooled. In the early 2000s, computational power expanded exponentially and the industry saw a “Cambrian explosion” of computational techniques that were not possible prior to this. Deep learning emerged from that decade’s explosive computational growth as a serious contender in the field, winning many important machine learning competitions. The interest has not cooled as of 2017; today, we see deep learning mentioned in every corner of machine learning.

Most recently, I have started reading academic papers on the subject. From my research, here are several publications that have been hugely influential to the development of the field:

- NYU’s Gradient-Based Learning Applied to Document Recognition (1998), which introduces Convolutional Neural Network to the Machine Learning world.

- Toronto’s Deep Boltzmann Machines (2009), which presents a new learning algorithm for Boltzmann machines that contain many layers of hidden variables.

- Stanford & Google’s Building High-Level Features Using Large-Scale Unsupervised Learning (2012), which addresses the problem of building high-level, class-specific feature detectors from only unlabeled data.

- Berkeley’s DeCAF — A Deep Convolutional Activation Feature for Generic Visual … (2013), which releases DeCAF, an open-source implementation of the deep convolutional activation features, along with all associated network parameters to enable vision researchers to be able to conduct experimentation with deep representations across a range of visual concept learning paradigms.

- DeepMind’s Playing Atari with Deep Reinforcement Learning (2016), which presents the 1st deep learning model to successfully learn control policies directly from high-dimensional sensory input using reinforcement learning.

The field of AI is broad and has been around for a long time. Deep learning is a subset of the field of machine learning, which is a subfield of AI. The facets that differentiate deep learning networks in general from “canonical” feed-forward multilayer networks are as follows:

- More neurons than previous networks

- More complex ways of connecting layers

- “Cambrian explosion” of computing power to train

- Automatic feature extraction

When I say “more neurons”, I mean that the neuron count has risen over the years to express more complex models. Layers also have evolved from each layer being fully connected in multilayer networks to locally connected patches of neurons between layers in Convolutional Neural Networks and recurrent connections to the same neuron in Recurrent Neural Networks (in addition to the connections from the previous layer).

Deep learning then can be defined as neural networks with a large number of parameters and layers in one of four fundamental network architectures:

- Unsupervised Pre-trained Networks

- Convolutional Neural Networks

- Recurrent Neural Networks

- Recursive Neural Networks

In this post, I am mainly interested in the latter 3 architectures. A Convolutional Neural Network is basically a standard neural network that has been extended across space using shared weights. CNN is designed to recognize images by having convolutions inside, which see the edges of an object recognized on the image. A Recurrent Neural Network is basically a standard neural network that has been extended across time by having edges which feed into the next time step instead of into the next layer in the same time step. RNN is designed to recognize sequences, for example, a speech signal or a text. It has cycles inside that implies the presence of short memory in the net. A Recursive Neural Network is more like a hierarchical network where there is really no time aspect to the input sequence but the input has to be processed hierarchically in a tree fashion. The 10 methods below can be applied to all of these architectures.

10 Deep Learning Methods

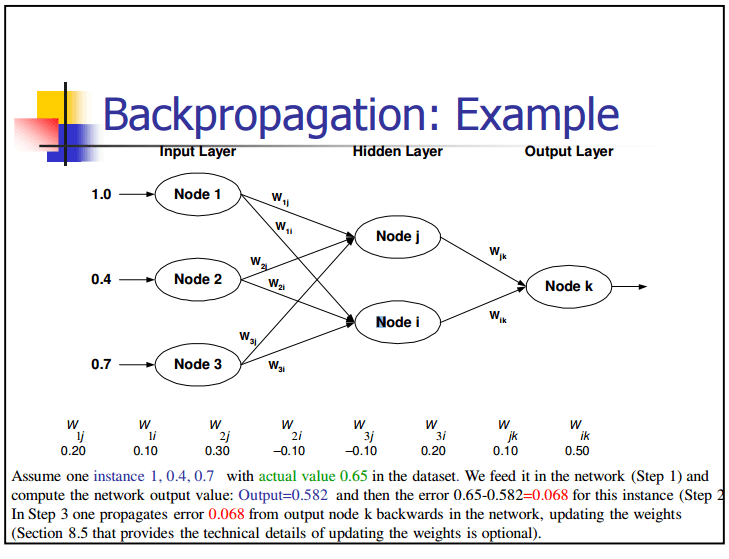

- Back-Propagation

- Stochastic Gradient Descent

- Learning Rate Decay

- Dropout

- Max Pooling

- Batch Normalization

- Long Short-Term Memory

- Skip-gram

- Continuous Bag Of Words

- Transfer Learning

To read the full article, with illustrations, click here.