Generative AI is suddenly everywhere. Because of this, the future of AI looks very bright indeed. There are many opportunities for generative AI to impact life and business in both positive and negative ways in the near future. Because the consequence of negative human impacts can easily far outweigh the benefits of positive human impacts, the future of AI looks very dark indeed.

Wait! What did I just say? If you are confused by the contradictory “bright and dark” statements in the previous paragraph, then please realize that was my intention. It was a little trick of logic on my part, to demonstrate how a sequence of statements, starting with facts, and continuing with inferences and deductions logically argued from those facts, might easily lead to a conclusion that conflicts with the initial premise of the argument.

Conflicting statements in long text might become easily possible when the distance between the statements is large. That was seen to happen when the GPT-3 autoregressive language model was first released. This is a 3rd-generation model of the type Generative Pre-trained Transformer (hence, GPT-3). Articles written by GPT-3 occasionally showed this flaw of self-contradiction after several paragraphs of AI-generated text.

Generative AI models produce outputs (text, images, videos, art, voice, code, solve math problems, answers to homework questions, etc.) based on very large training data sets that were used to train the model. That’s what we mean by autoregressive — it predicts future values based on past values.

Any decent Markov model can infer the next-most probable state in a sequence of states, such as the next-most likely word in a text message following the current word (e.g., a first-order Markov model), or the next-most likely sequence of words (statement) following preceding statements (e.g., a high-order Markov model). But, unless the model makes inferences for the next-most likely state that are based on the combined logical sequence of a massive number of prior states, then the logic just might produce contradictory predicted “facts” downstream that conflict with upstream training data facts.

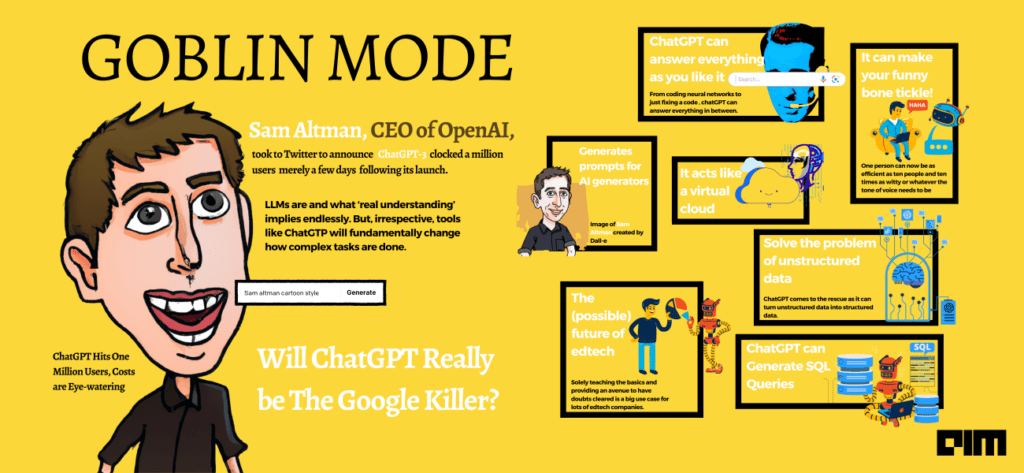

There have been many articles written recently about the latest and greatest generative AI language model application: ChatGPT (product of OpenAI [2]) — a conversational AI bot built upon the GPT transformer-based language model. Some interesting failure cases have been seen, of the type described above. It is very common for ground-breaking new technologies to have failure modes. One need only look at the early history of rocket launch tests in the 1950’s to see some amazingly spectacular failures. In time, the technology was eventually refined, safety “guardrails” were introduced, failure modes were understood and addressed, risk factors were managed and mostly mitigated, etc. We can hope and should hope that the same will be true with generative AI applications, including language models and the other generative AI model types (for images, videos, art, marketing copy, code-writing, article TL;DR summarization, etc.).

And now for some productive fun… when search engines were first introduced for the World Wide Web, they were primarily keyword search engines: “find me pages containing these keywords”. Eventually, keyword search gave way to search for metatags, then synonyms for the search words, then context and semantics of the search words, and so on, including listing web pages that may not contain any of those search word characteristics but the pages are linked-to from many other sites that do have those search word characteristics (i.e., “find me authoritative nodes in the knowledge graph associated with my search words”).

The magic pixie dust for doing search for the best results is to input the best prompt into the search box. Well, ChatGPT is no different. Giving it the best (or right) prompt is the key to getting good and useful results. Many articles (and even some e-books [4]) now offer lists of such “good prompts” (AKA ChatGPT Productivity Hacks) [5]. Instead of repeating those specific lists of good prompts here, I provide this short list of 10 useful productivity hacks that good ChatGPT prompts can produce for you:

- Create content on any subject

- Write code for a specified task

- Review and comment your code

- Write cold emails and personalize marketing copy to targeted customers

- Answer emails

- Create study guides and sample test questions from a large corpus of educational material

- Discover and summarize the important points from a collection of business or legal documents

- Prepare yourself for an interview or for a board meeting

- Translate and search foreign-language articles for specific topics and themes

- Let ChatGPT become your mentor to guide and instruct you in learning new things

Wishing you many productive prompt payoffs!

References:

[1] Cartoon from https://marketoonist.com/2023/01/ai-tidal-wave.html

[3] Graphic from https://analyticsindiamag.com/these-8-potential-use-cases-of-chatgpt-will-blow-your-mind/

[4] 50 Awesome ChatGPT Prompts: https://www.linkedin.com/posts/mengyaowang11_chatgpt-ugcPost-7017082919571619843-A9KB/

[5] The rise of the prompt engineer: https://www.datasciencecentral.com/the-rise-of-the-prompt-engineer/