Occasionally a novel neural network architecture comes along that enables a truly unique way of solving specific deep learning problems. This has certainly been the case with Generative Adversarial Networks (GANs), originally proposed by Ian Goodfellow et al. in a 2014 paper that has been cited more than 32,000 times since its publication. Among other applications, GANs have become the preferred method for synthetic image generation. The results of using GANs for creating realistic images of people who do not exist have raised many ethical issues along the way.

In this blog post we focus on using GANs to generate synthetic images of skin lesions for medical image analysis in dermatology.

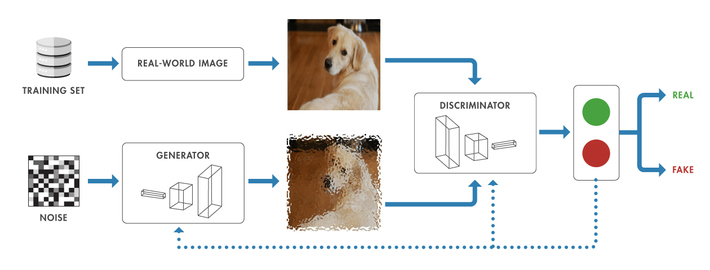

Figure 1 How a generative adversarial network (GAN) works.

A Quick GAN Lesson

Essentially, GANs consist of two neural network agents/models (called generator and discriminator) that compete with one another in a zero-sum game, where one agent’s gain is another agent’s loss. The generator is used to generate new plausible examples from the problem domain whereas the discriminator is used to classify examples as real (from the domain) or fake (generated). The discriminator is then updated to get better at discriminating real and fake samples in subsequent iterations, and the generator is updated based on how well the generated samples fooled the discriminator (Figure 1).

During its history, numerous architectural variations and improvements over the original GAN idea have been proposed in the literature. Most GANs today are at least loosely based on the DCGAN (Deep Convolutional Generative Adversarial Networks) architecture, formalized by Alec Radford, Luke Metz and Soumith Chintala in their 2015 paper.

Youre likely to see DCGAN, LAPGAN, and PGAN used for unsupervised techniques like image synthesis, and cycleGAN and Pix2Pix used for cross-modality image-to-image translation.

GANs for Medical Images

The use of GANs to create synthetic medical images is motivated by the following aspects:

- Medical (imaging) datasets are heavily unbalanced, i.e., they contain many more images of healthy patients than any pathology. The ability to create synthetic images (in different modalities) of specific pathologies could help alleviate the problem and provide more and better samples for a deep learning model to learn from.

- Manual annotation of medical images is a costly process (compared to similar tasks for generic everyday images, which could be handled using crowdsourcing or smart image labeling tools). If a GAN-based solution were reliable enough to produce appropriate images requiring minimal labeling/annotation/validation by a medical expert, the time and cost savings would be appealing.

- Because the images are synthetically generated, there are no patient data or privacy concerns.

Some of the main challenges for using GANs to create synthetic medical images, however, are:

- Domain experts would still be needed to assess quality of synthetic images while the model is being refined, adding significant time to the process before a reliable synthetic medical image generator can be deployed.

- Since we are ultimately dealing with patient health, the stakes involved in training (or fine-tuning) predictive models using synthetic images are higher than using similar techniques for non-critical AI applications. Essentially, if models learn from data, we must trust the data that these models are trained on.

The popularity of using GANs for medical applications has been growing at a fast pace in the past few years. In addition to synthetic image generation in a variety of medical domains, specialties, and image modalities, other applications of GANs such as cross-modality image-to-image translation (usually among MRI, PET, CT, and MRA) are also being researched in prominent labs, universities, and research centers worldwide.

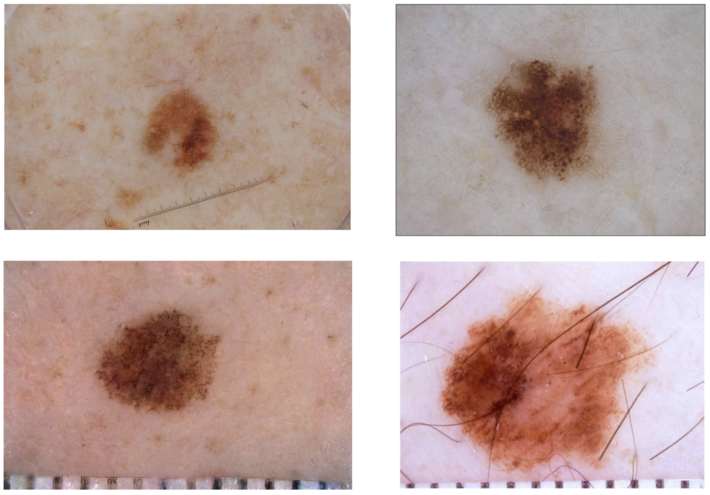

In the field of dermatology, unsupervised synthetic image generation methods have been used to create high resolution synthetic skin lesion samples, which have also been successfully used in the training of skin lesion classiï¬ers. State-of-the-art (SOTA) algorithms have been able to synthesize high resolution images of skin lesions which expert dermatologists could not reliably tell apart from real samples. Figure 2 shows examples of synthetic images generated by a recently published solution as well as real images from the training dataset.

Figure 2 (L) synthetically generated images using state-of-the-art techniques;

(R) actual skin lesion images from a typical training dataset.

An example

Here is an example of how to use MATLAB to generate synthetic images of skin lesions.

The training dataset consists of annotated images from the ISIC 2016 challenge, Task 3 (Lesion classification) data set, containing 900 dermoscopic lesion images in JPEG format.

The code is based on an example using a more generic dataset, and then customized for medical images. It highlights MATLABs recently added capabilities for handling more complex deep learning tasks, including the ability to:

- Create deep neural networks with custom layers, in addition to commonly used built-in layers.

- Train deep neural networks with custom training loop and enabling automatic differentiation.

- Process and manage mini-batches of images and using custom mini-batch processing functions.

- Evaluate the model gradients for each mini-batch and update the generator and discriminator parameters accordingly.

The code walks through creating synthetic images using GANs from start (loading and augmenting the dataset) to finish (training the model and generating new images).

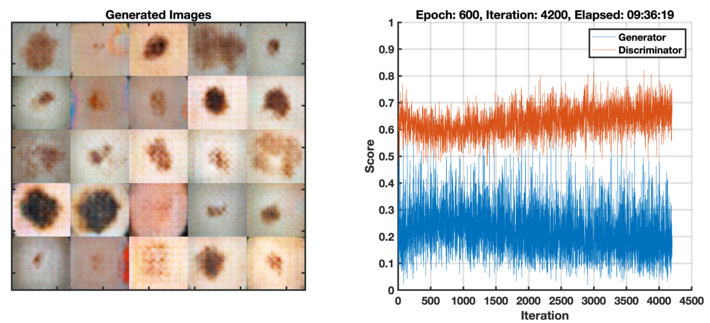

One of the nicest features of using MATLAB to create synthetic images is the ability to visualize the generated images and score plots as the networks are trained (and, at the end of training, rewind and watch the entire process in a movie player type of interface embedded into the Live Script). Figure 3 shows a screenshot of the process after 600 epochs / 4200 iterations. The total training time for a 2021 M1 Mac mini with 16 GB of RAM and no GPU was close to 10 hours.

Figure 3 Snapshot of the GAN after training for 600 epochs / 4200 iterations. On the left: 25 randomly selected generated images; on the right, generator (blue) and discriminator (red) curves showing score (between 0 and 1, where 0.5 is best) for each iteration (right).

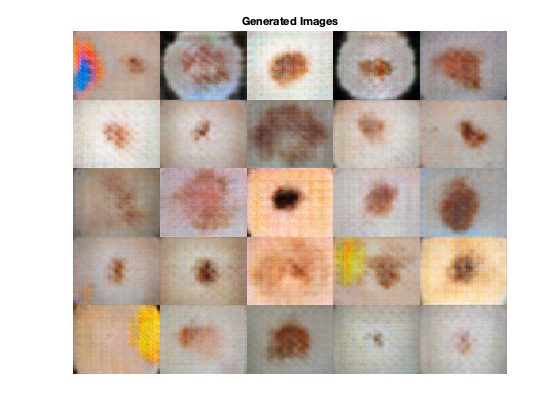

Figure 4 shows additional examples of 25 randomly selected synthetically generated images after training has completed. The resulting images resemble skin lesions but are not realistic enough to fool a layperson, much less a dermatologist. They indicate that the solution works (notice how the images are very diverse in nature, capturing the diversity of the training set used by the discriminator), but they display several imperfections, among them: a noisy periodic pattern (in what appears to be an 8×8 grid of blocks across the image) and other visible artifacts. It is worth mentioning that the network has also learned a few meaningful artifacts (such as colorful stickers) that are actually present in a significant number of images from the training set.

Figure 4 Examples of synthetically generated images.

Practical hints and tips

If you choose to go down the path of improving, expanding, and adapting the example to your needs, keep in mind that:

- Image synthesis using GANs is a very time-consuming process (just as most deep learning solutions). Be sure to secure as much computational resources as you can.

- Some things can go wrong and could be detected by inspecting the training progress, among them: convergence failure (when the generator and discriminator do not reach a balance during training, with one of them overpowering the other) and mode collapse (when the GAN produces a small variety of images with many duplicates and little diversity in the output). Our example doesnt suffer from either problem.

- Your results may not look great (contrast Figure 4 with Figure 2), but that is to be expected. After all, in this example we are basically using the standard DCGAN (deep convolutional generative adversarial network) Specialized work in synthetic skin lesion image generation has moved significantly beyond DCGAN; SOTA solutions (such as the one by Bissoto et al. and the one by Baur et al.) use more sophisticated architectures, normalization options, and validation strategies.

Key takeaways

GANs (and their numerous variations) are here to stay. They are, according to Yann LeCun, the coolest thing since sliced bread. Many different GAN architectures have been successfully used for generating realistic (i.e., semantically meaningful) synthetic images, which may help training deep learning models in cases where real images are rare, difficult to find, and expensive to annotate.

In this blog post we have used MATLAB to show how to generate synthetic images of skin lesions using a simple DCGAN and training images from the ISIC archive.

Medical image synthesis is a very active research area, and new examples of successful applications of GANs in different medical domains, specialties, and image modalities are likely to emerge in the near future. If youre interested in learning more about it, check out this review paper and use our example as a starting point for further experimentation.