For statistical models, selecting those predictors is what tests the steel of data scientists. It is really challenging to lay out the steps, as for every step, they should evaluate the situation and make decisions for the next or upcoming steps. It is a completely different story when running predictive models, and if relationship among the variables is not the main focus, situations get easier. Data analysts can go ahead to run step-wise regression models, empowering the data to give best predictions. However; if the main focus is on answering research questions that describe relationships, it can give analysts a really tough time.

If they willingly ignore practical issues, it’s easy for them to use theory or test research questions. To exemplify, say there are 10 different variables, and all of them measure the same theoretical construct, without the clarity as to which one to use. Or that decision analysts could, theoretically, make the case for all 40 demographic control variables. But when you put them all in together, all of their coefficients become non-significant.

This is one way of looking at things. Cook and Weisberg in Residuals and Influence, Draper and Smith in Applied Regression Analysis, 3rd Edition; to name a few, are of the opinion that step-wise process usually is not capable of giving out the best predictions. They say that users seeking the best of prediction models should go ahead and use branch-and-bound algorithm, though it’s gone the way of the Dodo. Outside of that, the step-wise algorithms are only of historical interest. Branch-and-bound methods are an imperfect solution, though they are much faster than all possible regressions; they remain exponential time algorithms.

So the question is how to do it? There are some guidelines, which if considered religiously; can assure of if not the “best”, “near to best predictions”.

1. Regression coefficients are marginal results

It means that coefficient for each predictor has its unique effect on the response variable, though these effects are not at their fullest, unless all of them are independent. It’s the effect that emerges after controlling other variables in the model. What else is there in the model?

Coefficients can change quite a bit, depending on what else is in the model. It’s really interesting that if two or more predictors overlap in the way they explain the outcomes, the overlap will not get highlighted in wither of the regression coefficient. It’s in the overall model F statistic and the R-squared, but not the coefficients.

2. Instead of bell curves, look out for interesting breaks in the middle of the distribution

It will not be an exaggeration to say” It is mandatory to always start with descriptive statistics”. It certainly will help in finding errors, which one might have missed during data cleansing. But more importantly, it will highlight what you are working with. Univariate descriptive graphs to start with. Remember, the motive here is to not look out for bell curves, but for those interesting breaks in the middle of the distribution.

But more importantly, you have to know what you’re working with; as they are values with huge number of points. They are the values with more than usual or less variation than expected. Upon plugging these variables in the model, they might act funny. So you should be aware of how they would look, to have a better understanding why.

3. Running bivariate descriptive including graphs, should be the next step

Understanding of how each potential predictor relates to the outcome and to other predictors is of great help. As mentioned earlier, regression coefficients are marginal results, and knowing the bivariate relationships amongst these variables helps analysts attain insights into why certain variable lose significance in bigger models.

In addition to correlations or crosstabs, scatterplots of the relationship are extremely informative. The reason to feel so is that, this is where you can see if linear relationships are plausible, or in times when you are required to deal with nonlinearity in some or other way.

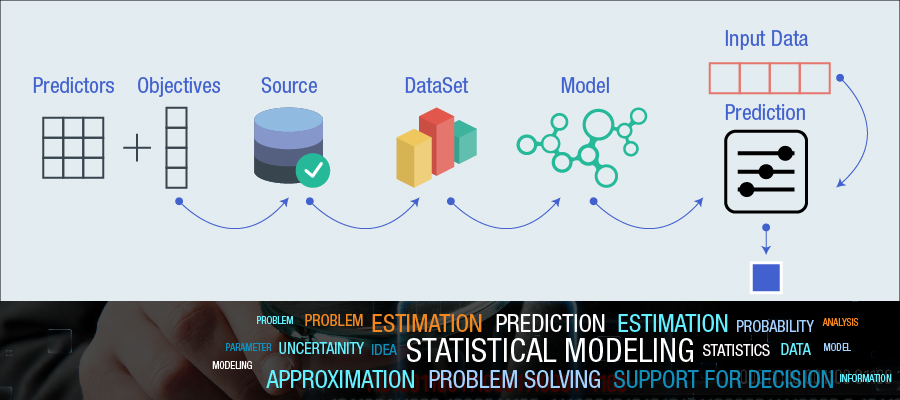

4. Think of predictors as datasets

Predictors in theoretically distinct sets, empower you to see how related variables can work together and then what will happen once they all are put together. If we take the case of binge drinking in youngsters; potential sets of variables may include:

- Demographics (age, year in school, socio-economic status)

- History of Mental Health (diagnoses of mental illness, family history of alcoholism)

- Current psychological health (stress, depression)

- Social issues (feelings of isolation, connection to family, number of friends)

Usually, variables within a set are correlated, but not across sets. So by putting everything in at once, it becomes challenging to assess or find relationships; & it will turn out to be a huge mess. Hence, building each set separately, building a theoretically meaningful model with solid understanding of how the pieces fit together – makes much more sense.

5. Build model while you interpret and vice versa..!!

Data scientists should stop and listen to the story the model, they run has to tell.

Did the R-squared change? Take time out to look at the coefficients. How much have the coefficients changed from the model with control variables to one without?

Pause to do all this, as it helps in making better decisions on the model to run next.

6. Include every single variable used in an interaction, in the model

Getting rid of unwanted and things that are not significant becomes easier, upon deciding what to leave in and what to boot from the model.

Eliminating non-significant interactions first is always a good idea. The only exception to this is when the interaction is central to the research question, and is important to portray that it was not significant.

In case the interaction is significant, you can go ahead and take out the terms from the component variables. These should be the ones which make up interactions. The interpretation of the interaction is only possible if the component term is in the model.

7. Stay focused on the research question

Increased data influx assures you of large data sets to work on. And while doing so, it is very easy to step off the brick road – straight into the poppies. You may discover so many interesting relationships, which of course will be shiny. Ultimately a few months down the line, you would be testing every possible predictor, categorized in every possible manner; but all this without any considerable progress.

Stay focused on your destination – the research question. Writing it and taping it on to the wall should certainly help.

Appropriate interpretations and not “correctness”, should be the focus

Aforementioned steps are for any type of model-linear regression, ANOVA, logistic regression, mixed models. Considering them or at least giving them a first preference while doing statistical analysis – is what is suggested.

The point here is that they are more useful for prediction models as compared to theoretical ones. Branch and bound methods though seem easily to adapt, may not prove that helpful in such situations. Also allowing interactions without main effects is not always an error. However, would stand by the fact that meaning to several model parameters change as and when these parameters are removed or added, for that matter. Researchers or data scientists, trying to assume a certain meaning of their interaction coefficient, should realize that it likely would have changed. The point of importance here is appropriate interpretations than “correctness”.