In the evolving landscape of large language models (LLMs), it is increasingly evident that their immense size and broad applicability present several limitations. Despite the significant advancements made in natural language processing, it is crucial to acknowledge the inefficiencies, high costs, and privacy concerns associated with LLMs.

In September 2023, I authored a two-part blog series to delve into the potential of small language models:

- “Use Case Language Models: Taming the LLM Beast – Part 1” discusses the challenges and opportunities associated with LLMs, focusing on how organizations can effectively manage and leverage these models for specific use cases.

- “Entity Language Models: Monetizing Language Models – Part 2” explores the monetization aspects of language models, emphasizing the potential of domain-centric, entity-based models to drive business value and highlighting strategies for deploying these models to optimize operations and generate insights.

While LLMs play a pivotal role in generative analytics, only a few companies have the capabilities and resources to develop and maintain them. Additionally, approaches such as Retrieval-Augmented Generation (RAG) and fine-tuning public LLMs do not fully leverage an organization’s proprietary knowledge while safeguarding sensitive data and intellectual property. Small language models (SLMs) tailored to specific domains provide a more effective solution, offering enhanced precision, relevance, and security.

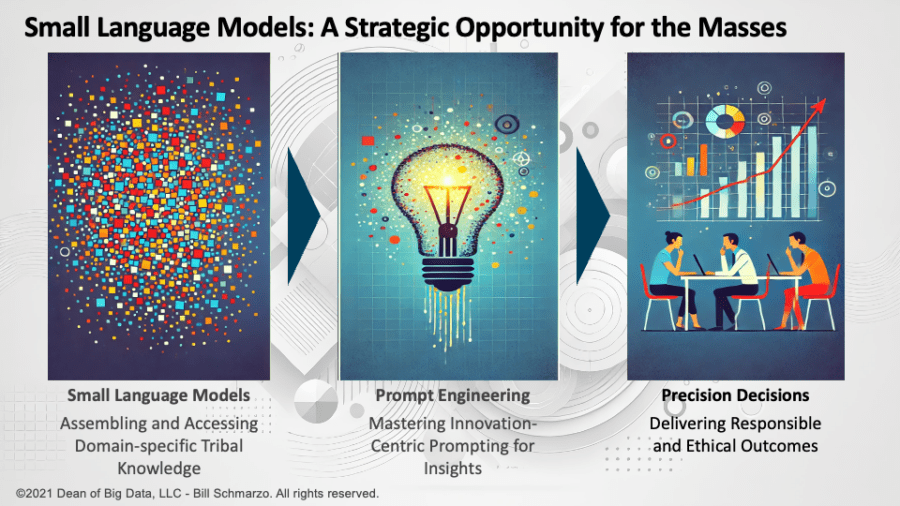

Welcome to the potential of Small Language Models (SLMs). SLMs, designed to collate, analyze, and categorize an organization’s proprietary information sources, represent a logical progression towards democratizing access to advanced language model capabilities. By developing SLMs to align with domain-specific business requirements, organizations can achieve enhanced precision, relevance, and security, ultimately unlocking substantial business value.

The Limitations of Public Large Language Models

Recent industry research and publications have increasingly underscored the relative ineffectiveness of public LLMs in delivering specialized, context-specific insights. While LLMs excel at general tasks, their performance often falters when applied to niche domains or specific organizational needs. This is particularly problematic for businesses that require precision and relevance in their AI applications[1].

Moreover, the data privacy and security concerns associated with public LLMs are non-trivial. Utilizing these models often exposes sensitive organizational data to external entities, increasing the risk of data breaches and intellectual property theft. In an era where data is critical, safeguarding this asset is paramount.

From an operational standpoint, training and deploying LLMs involve exorbitant financial and computational costs. These models require vast data and computational power, making them inaccessible to many organizations. In contrast, SLMs, with their lower resource requirements, offer a more sustainable and scalable alternative.

The Strategic Advantage of Small Language Models

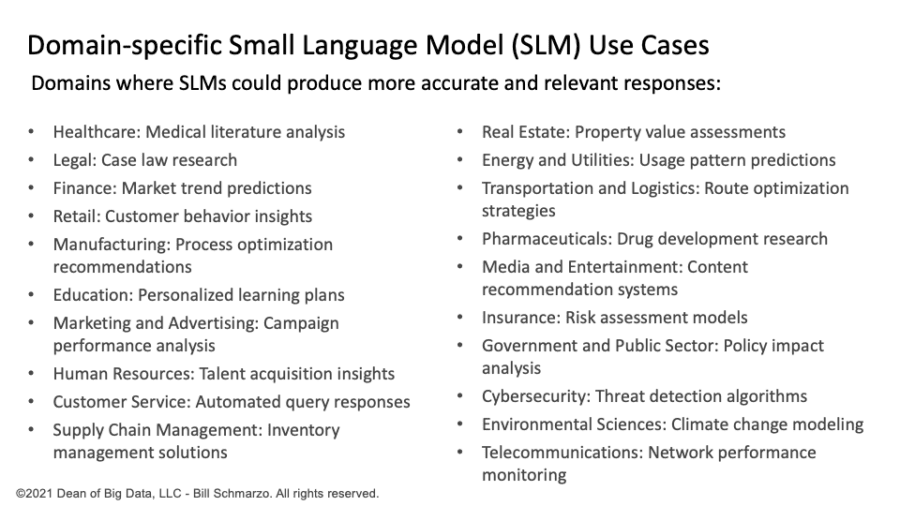

Small Language Models (SLMs), tailored to specific domains, provide precision and relevance that LLMs cannot match. By focusing on domain-specific data and objectives, SLMs can deliver highly targeted insights that drive business value. For instance, a healthcare organization might develop an SLM trained exclusively on medical literature and patient data, ensuring the model’s outputs are relevant and actionable. Similarly, in the legal industry, a firm might use an SLM trained in legal documents, case law, and regulatory texts to provide precise legal research and contract analysis, improving the efficiency and accuracy of legal advice. In the financial sector, a bank might implement an SLM trained on market data, financial reports, and economic indicators to generate targeted investment insights and risk assessments, enhancing decision-making and strategy development. Other business and operational areas where domain-specific SLMs could deliver more value at a lower cost are shown in Figure 1.

Figure 1: Domain-specific SLM Use Cases

Another significant advantage of SLMs is the enhanced data privacy they afford. Organizations can train these models on proprietary datasets within their secure environments, mitigating the risks associated with data exposure. This ensures compliance with data protection regulations and builds trust with stakeholders. Additionally, operational efficiency is another area where SLMs shine. Their lower computational requirements translate to faster deployment times and reduced costs, making it feasible for organizations to iterate quickly and refine their models to better meet evolving needs.

Building and Expanding Intellectual Property with Small Language Models

Developing Small Language Model (SLM) capabilities allows organizations to significantly build upon and expand their intellectual property. By integrating SLMs with existing data systems, businesses can create a feedback loop that continuously enhances the model’s performance. This incremental learning ensures that the model remains relevant and effective over time.

SLMs offer a clear advantage in relevance and value creation compared to LLMs. Their specific domain focus ensures direct applicability to the business context. SLM usage correlates with improved operational efficiency, customer satisfaction, and decision-making processes, driving tangible business outcomes.

Compared to techniques like Retrieval-Augmented Generation (RAG) and fine-tuning of LLMs, SLMs demonstrate superior performance in specialized tasks. While RAG and fine-tuning can somewhat enhance LLMs, they often fall short of the precision and relevance offered by SLMs. By focusing on a specific set of objectives and data, SLMs provide more consistent and valuable outputs.

| Dimension | Public LLM RAG | Public LLM Fine-Tuning | Small Language Models |

| Relevance | Moderate: Can be tailored with relevant documents but still relies on broad base LLM. | Moderate-High: Fine-tuning improves relevance for specific tasks but is limited by the base model’s architecture and training data. | High: Tailored to specific use cases, providing highly relevant insights. |

| Security | Low-Moderate: Uses external sources, increasing the risk of data exposure. | Moderate: Fine-tuning requires sharing data with model providers. | High: Trained on proprietary datasets within secure environments, minimizing data exposure. |

| Costs | Moderate: Lower initial costs but can become expensive with high retrieval usage. | High: Significant computational resources required for fine-tuning and maintaining models. | Low-Moderate: Lower computational and operational costs compared to fine-tuning LLMs. |

| Accuracy | Moderate: Dependent on the quality of the retrieved documents and base LLM. | High: Fine-tuning improves accuracy for specific tasks but may overfit to the training data. | High: Tailored training ensures high accuracy for specific tasks and use cases. |

| Management | Moderate: Requires ongoing management of the retrieval process and document database. | High: Requires continuous fine-tuning and monitoring to maintain performance. | Low-Moderate: Easier to manage once set up, with less frequent updates needed compared to fine-tuning. |

Table 1: Comparing RAG, Fine-tuning, and SLMs

Your SLM Self-Help Guide

Let’s provide a self-help guide that any organization, regardless of size, can use to build its own domain-specific small language models.

- Design Phase: Design the project to align with your organization’s business initiatives. This involves identifying the business problems the SLM will address, such as enhancing customer support, optimizing supply chain management, or providing precise medical diagnostics. Collect and curate high-quality, domain-specific data relevant to these use cases, ensuring it is diverse and pertinent to improve model performance.

- Development Phase: Choose an appropriate model architecture that fits your needs, such as transformer models or recurrent neural networks. Consider starting with pre-trained models to save computational resources. Clean and preprocess your data to ensure consistency and quality through tokenization, normalization, and handling missing values. Train your model using this high-quality data, employing techniques like transfer learning to leverage existing pre-trained models and fine-tune them on your domain-specific data. Validate the model using a separate dataset to ensure it generalizes well, and evaluate its performance using metrics such as accuracy, precision, recall, and F1-score.

- Deployment Phase: Set up a robust infrastructure that supports scalability and low-latency requirements. Integrate the SLM with your existing software and workflows using APIs to ensure seamless communication between the model and other systems. Implement strong security protocols to protect sensitive data and ensure compliance with relevant regulations, using techniques like encryption, access controls, and regular security audits.

- Management / Learning Phase: The management of the SLM requires continuous monitoring to swiftly detect and address issues. This involves using automated alerts and dashboards for real-time performance tracking. The model should be regularly updated with new data to ensure its relevance and accuracy. Implementing incremental learning is crucial to keep the model current without needing complete retraining from scratch. Additionally, the SLM should be orchestrated to continuously learn and adapt based on new data inputs and feedback. Users and stakeholders should receive training on effectively utilizing the SLM, and a robust support system should be established to address any issues or questions. This ensures that the SLM remains a dynamic, evolving tool that consistently meets the organization’s needs.

Additional considerations include adhering to ethical AI practices by ensuring fairness, accountability, and transparency in your SLM. Conduct regular audits to identify and mitigate biases and stay updated with industry regulations to ensure compliance with legal standards like GDPR for data protection in Europe or HIPAA for healthcare data in the U.S.

Small Language Models Conclusion

The strategic imperative for modern enterprises is clear: developing small language model capabilities is not just an option but a necessity. SLMs offer a unique blend of relevance, efficiency, and security that public LLMs cannot match. By investing in SLMs, organizations can protect their intellectual property, enhance operational efficiency, and drive significant business value.

The journey towards leveraging SLMs begins with understanding their potential and taking actionable steps to integrate them into your organization’s AI strategy. The time to act is now – embrace the power of small language models and unlock the full potential of your data assets.

[1] “The Working Limitations of Large Language Models”

(https://sloanreview.mit.edu/article/the-working-limitations-of-large-language-models/ )

“Understanding the Limitations of Large Language Models (LLMs)”

(https://www.marktechpost.com/2024/07/02/understanding-the-limit )