Editor’s Note: It has come to our attention that several statements in this article have been based on sources that have later been recanted and are factually incorrect. Court documents from the case show that ShotSpotter accurately showed the location of the gunfire as reported in both the real-time alert, as well as in the forensic report. The initial alert was classified as a possible firework, but through their standard procedure of human analysis, it was determined within one minute to be gunfire. The evidence that ShotSpotter provided was later withdrawn by the prosecution and had no bearing on the results of the case.

- The gunshot detection system has been widely criticized for degradation of civil rights.

- ShotSpotter’s many problems include a false positive rate of up to 90%.

Sixty-five-year-old Michael Williams was released from jail last month after spending almost a year in jail on a murder charge. The initial evidence against him wasn’t eyewitnessed testimony or forensics, but an audio recording from ShotSpotter, the most popular acoustic gunshot detection technology in the United States.

The “gunshot” sound that pointed the finger at Williams was initially classified as a firework by the AI and sent for human review. After the charges were dropped due to insufficient evidence, it was revealed that one of ShotSpotter’s human “reviewers” had manually classified the sound as a gunshot instead of a firework [1]. The case brings to question how much power we should give to AI “witnesses”, especially those that can be tampered with.

What is ShotSpotter?

Shotspotter is a patented acoustic gunshot detection system of microphones, algorithms, and human reviewers that alerts police to potential gunfire [2]. Once an “explosive type sound” [3] is detected, the sensors switch on and create a three-second audio recording. If three sensors capture the same sound, the recording is sent for further verification at ShotSpotters Incident Review Center. After noise filters remove sounds from construction, fireworks, and other gunshot-like sources, the potential gunshots are then sent to human reviewers—who decide if the police should be alerted.

ShotSpotter’s claim is that the system has a 97% accuracy rate is unsupported by any actual evidence. But that isn’t stopping cities from paying a subscription of between $65,000 and $90,000 per square mile per year to install the technology [4]: Chicago’s three-year contract with ShotSpotter cost $33 million [5].

How Does The Algorithm Work?

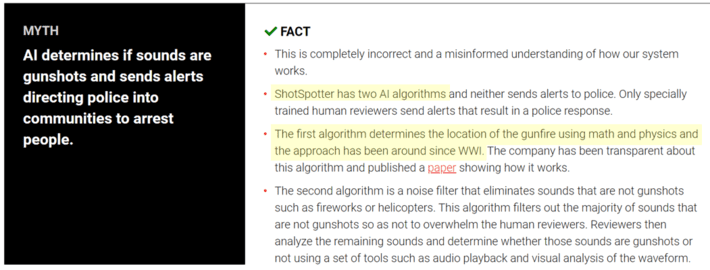

What’s under the hood? No one outside of ShotSpotter knows; the “deep learning” classifier at the heart of the gunshot detection system has not been independently assessed nor peer-reviewed. The company states they have “two AI algorithms,” one to determine the location of the gunfire and one to filter noise. The following “fact” from the ShotSpotter website provides a few hints [6]:

However, the algorithm that determines the location of the gunfire [7] is a simple triangulation algorithm that is definitely not AI, despite the company’s claims, highlighted above in yellow. The nuts and bolts of the second algorithm, the noise filter, is kept tightly under wraps. Cities using the systems are expressly forbidden from sharing the data with any outside sources—even research institutions [8], which makes it impossible to validate the system’s effectiveness or true positive rate. Although ShotSpotter has never been independently evaluated, it has been used as evidence in many court cases [1].

False Positive Rate May be as High as 90%

Although we know nothing about the “AI, we do know that the system’s false positive rate is somewhere between 33% to 90%, depending on who commissioned the report; the ACLU puts the rate at the higher end [9], while a report commissioned by ShotSpotter puts the figure at the lower end:

Instead of alerting police to actual gunfire, they were alerting police to “dumpsters, trucks, motorcycles, helicopters, fireworks, construction, vehicles traveling over expansion plates on bridges or into potholes, trash pickup, church bells, and other loud, concussive sounds common to urban life” [1].

So even if the classifier is a novel AI, it isn’t a particularly good one. A whopping 86% of “gunshot” reports to the police lead to no report of any crime at all [3]. Chicago’s Office of Inspector general (OIG) concluded from its analysis that CPD responses to ShotSpotter alerts rarely produce documented evidence of a gun-related crime, investigatory stop, or recovery of a firearm [10].

Changing the System

Considering that the system has multiples issues, it may be time for cities to redirect their million-dollar budgets to more effective ways of preventing crime. While ShotSpotter does have the potential to identify gun violence hotspots within cities [1], the technology shouldn’t be used in its current guise: an unreliable “anonymous tipster” sending even more unwarranted police responses to minority neighborhoods that are already over-policed.

References

Police Image: Adobe Creative Cloud (Licensed)

[1] ShotSpotter – The New Tool to Degrade What is Left of the Fourth Am…

[2] ShotSpotter Precision Policing Platform

[3] Leaders Weigh Pros and Cons of ShotSpotter

[4] High Tech Ears Listen for Shots

[5] Chicago Police Department’s Use of ShotSpotterTechnology

[6] ShotSpotter responds to false claims

[7] Precision and accuracy of acoustic gunshot location in an urban env…

[8] 29. Jason, Tashea, Should The Public Have Access To Data Police Acquire Through Private Companies?, A.B.A J. 6 (Dec. 1, 2016).

[9] Four problems with the ShotSpotter gunshot detection system

[10] THE CHICAGO POLICE DEPARTMENT’S USE OF SHOTSPOTTER TECHNOLOGY