The Retrieval Augmented Generation (RAG) paradigm has popularized the notion of supplementing prompts for GenAI models with trusted enterprise data. Many organizations utilize this practice to restrict model outputs to their data, improve the accuracy of responses, and minimize models’ tendencies to deliver fallacious outputs.

But there are alternative forms of prompt augmentation that can achieve these objectives, and more, by integrating complex workflows to inform machine-based decisions while initiating actions within next-generation AI applications.

Talentica Software CTO and Co-founder Manjusha Madabushi described “an agent-based architecture” as one such methodology renowned for the complexity of tasks it enables organizations to complete. In addition to answering questions, providing summaries of content, and supporting semantic search, it allows organizations to devise entire business strategies, meet mission-critical objectives, and implement actions typically reserved for humans. These gains are redoubled when implementing this architecture with resources like LangGraph, a LangChain-based framework for building and managing AI agents.

Madabushi said this approach is part of a broader shift in AI implementations, emphasizing the need for a new mindset in application development that considers both human and machine users. “As more machines interact with these systems, the information and interfaces must be redefined with AI agents in mind,” she explained.

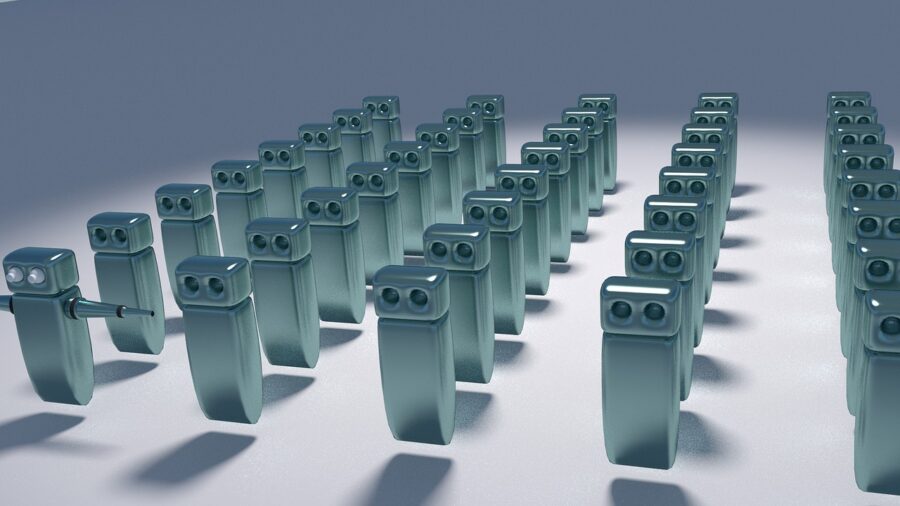

AI agents work together

These AI agents are integral to the success of this distributed architecture. In certain implementations, these agents can scour any number of enterprise data sources—including popular, external ones such as Databricks, Salesforce, cloud warehouses, and more—to gather information that’s relevant to a task. According to Opaque Systems CEO Aaron Fulkerson, those agents “call to the different sources” to retrieve data relevant to a user’s prompt before sending both to a Large Language Model (LLM). Organizations can employ different agents for different sources.

Nonetheless, it’s the synthesis of these agents, particularly in use cases in which there’s an agent assigned to different components of a single task, that makes this approach so useful. Organizations can “achieve end-to-end objectives by creating a chain or a workflow with AI agents,” Madabushi said. “You can start by saying, ‘I want to reduce the time to resolve support tickets,’ then all the agents perform tasks to achieve this single objective.”

Machines become the users of applications

In certain implementations of this architecture, AI agents retrieve data for prompt augmentation while employed in roles typically fulfilled by human users. For example, in a customer success organization there’s typically a support engineer, a lead support engineer, a customer success manager, and other such roles. When a new support ticket is raised, it’s received by the front-line support engineer, who then looks up past tickets, figures out the playbook to run, and executes it. “Using a multi-agent architecture approach, a user would prompt the support engineer agent to look up the category of the ticket, its priority, and all past tickets that are related, and then recommend the playbook to run and collect the data needed to run it,” Madabushi explained.

Resources such as LangChain, and LangGraph in particular, are influential in such implementations because they enable agents to effectively communicate with—and hand the results of their labor to achieve an overarching goal to—one another. “Now, agents can talk to each other,” Madabushi mentioned. “So, if the support engineer agent cannot find a playbook suitable for the ticket, it hands the task over to the lead support engineer agent, who can request more information from the customer.”

Interactivity in LangGraph

By combining agents in different roles that are overseen by humans, this multiple agent architecture can accomplish extremely sophisticated tasks while reducing the required overhead. LangGraph enables agents to interact with one another in a graph environment in which it “connects all these agents,” Madabushi said. However, humans can still oversee each part of these implementations, approve or deny steps, and re-engineer prompts until they’re satisfied with their bot’s response.

By inputting what Madabushi called “interrupts” at specific phases in the multiple steps required to complete an overarching task in LangGraph, humans can review the efforts of agents to ensure satisfaction. This approach allows for a human-in-the-loop framework to validate and verify the actions performed by the AI agents.

The broader significance

Prompt augmentation is quickly becoming indispensable to obtain trustable, competent results from LLMs and generative AI models. However, augmenting prompts within a multi-AI agent paradigm can move the needle further by broadening the scope of what individual models can do. When assessing prompt augmentation methods, Fulkerson revealed that “RAG is still more common, but we’re increasingly seeing these agent-based ones.”