Imagine you’re completing a mission in a computer game. Maybe you’re going through a military depot to find a secret weapon. You get points for the right actions (killing an enemy) and lose them for the wrong ones (falling into a pit or getting hit). If you’re playing on high difficulty, you might not conclude this task in just one attempt. Try after try, you learn which consecutive actions are needed to get out of a location safe, armed, and equipped with bonuses like extra health points or small artifacts in your bag. Every time you challenge yourself and compete with other gamers in the virtual world, you act as a reinforcement learning agent.

In this article, we’ll talk about the core principles of reinforcement learning and discuss how industries can benefit from implementing it.

What is reinforcement learning?

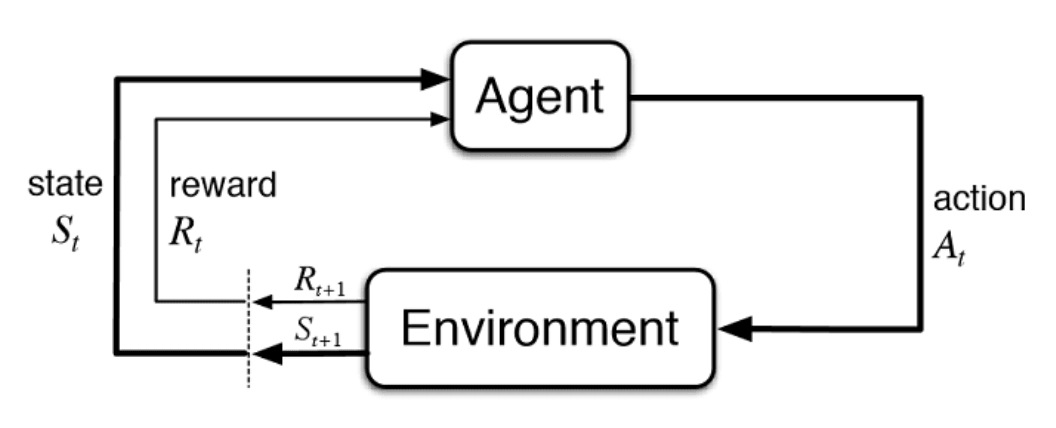

Reinforcement learning (RL) is a machine learning technique that focuses on training an algorithm following the cut-and-try approach. The algorithm (agent) evaluates a current situation (state), takes an action, and receives feedback (reward) from the environment after each act. Positive feedback is a reward (in its usual meaning for us), and negative feedback is punishment for making a mistake.

How reinforcement learning works.

RL algorithm learns how to act best through many attempts and failures. Trial-and-error learning is connected with the so-called long-term reward. This reward is the ultimate goal the agent learns while interacting with an environment through numerous trials and errors. The algorithm gets short-term rewards that together lead to the cumulative, long-term one.

So, the key goal of reinforcement learning used today is to define the best sequence of decisions that allow the agent to solve a problem while maximizing a long-term reward. And that set of coherent actions is learned through the interaction with environment and observation of rewards in every state.

Difference between reinforcement learning, supervised learning, and unsupervised learning

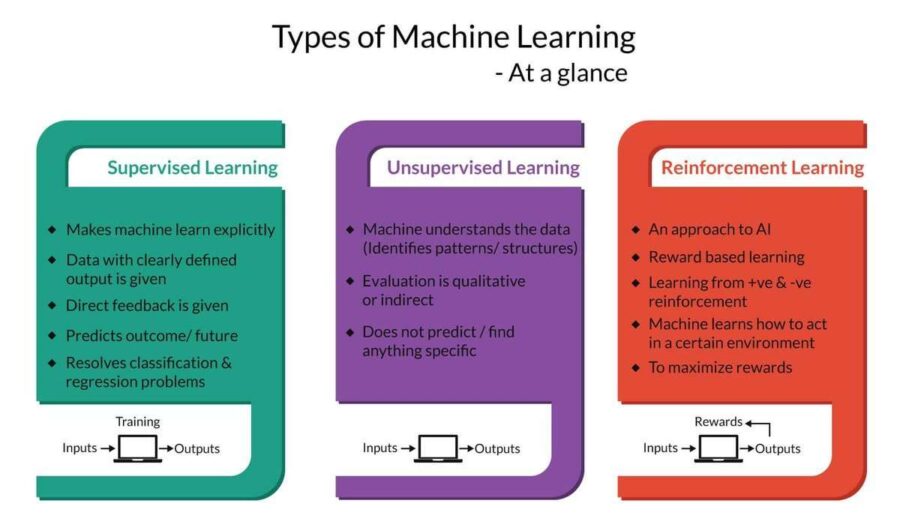

Reinforcement learning is distinguished from other training styles, including supervised and unsupervised learning, by its goal and, consequently, the learning approach.

Three ML training styles compared

Three ML training styles compared

Reinforcement learning vs supervised learning. In supervised learning, an agent “knows” what task to perform and which set of actions is correct. Data scientists train the agent on historical data with target variables (desired answers with predictive analysis) AKA labeled data. The agent receives direct feedback. As a result of training, an agent can forecast whether there will be target variables in new data or not. Supervised learning allows for solving classification and regression tasks.

Reinforcement learning doesn’t rely on labeled datasets: The agent isn’t told which actions to take or the optimal way of performing a task. RL uses rewards and penalties instead of labels associated with each decision in datasets to signal whether a taken action is good or bad. So, the agent only gets feedback once it completes the task. That’s how time-delayed feedback and the trial-and-error principle differentiate reinforcement learning from supervised learning.

Since one of the goals of RL is to find a set of consecutive actions that maximize a reward, sequential decision making is another significant difference between these algorithm training styles. Each agent’s decision can affect its future actions.

Reinforcement learning vs unsupervised learning. In unsupervised learning, the algorithm analyzes unlabeled data to find hidden interconnections between data points and structures them by similarities or differences. RL aims at defining the best action model to get the biggest long-term reward, differentiating it from unsupervised learning in terms of the key goal.

Reinforcement and deep learning. Most of reinforcement learning implementations employ deep learning models. They involve the use of deep neural networks as the core method for agent training. Unlike other machine learning methods, deep learning fits best for recognizing complex patterns in images, sounds, and texts. Additionally, neural networks allow data scientists to fit all processes into a single model without breaking down the agent’s architecture into multiple modules.

Reinforcement learning use cases

Reinforcement learning is applicable in numerous industries, including internet advertising and eCommerce, finance, robotics, and manufacturing. Let’s take a closer look at these use cases.

Personalization

News recommendation. Machine learning has made it possible for businesses to personalize customer interactions at scale through the analysis of data on their preferences, background, and online behavior patterns.

However, recommending such content type as online news is still a complex task. News features are dynamic by nature and become rapidly irrelevant. User preferences in topics change as well.

Authors of the research paper DRN: A Deep Reinforcement Learning Framework for News Recommendation discuss three main challenges related to news recommendation methods. First, these methods only try to model current (short-term) reward (e.g., click-through rate that shows the ratios page/ad/email viewers that click on a link). The second issue is that current recommendation methods usually take into account the click/no click labels or ratings as users’ feedback. And third, these methods typically continue suggesting similar news to readers, so users can get bored.

The researchers used the Deep Q-learning based recommendation framework that considers current reward and future reward simultaneously in addition to user return as feedback rather than clicks data.

Continue reading