All machine intelligence is powered by data. This isn’t ground-breaking, or even news – we’ve known about data’s value for decades now. However, not all data are created equal, and we’ll be looking at executing machine learning products from a standpoint that prioritizes the quality of the data streaming into them.

Machine Learning (ML) is a specific subset of Artificial Intelligence that plays a key role in a wide range of critical applications such as image recognition, natural language processing, and expert systems to name a few.

ML solves problems that can’t be solved by numerical means alone, mainly through supervised or unsupervised learning:

- In Supervised Learning, an algorithm is trained using a labeled set of input data and known responses to the data (output). It learns by comparing its actual output with correct output to find errors.

- In Unsupervised Learning, the algorithm uses a set of unlabeled input data to explore the data and find some structure within.

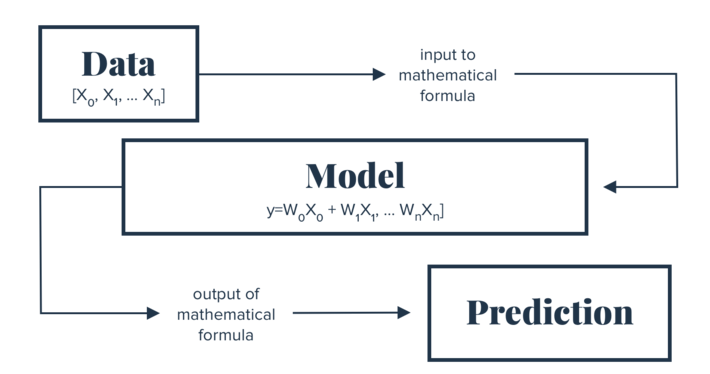

1. Overview of machine learning workflow:

Example machine learning workflows, from raw data to predictions

The first step involves data collection, but this is just one part of the equation in building predictive solutions – the next, and often overlooked, step is the data prep.

2. Stages of Machine Learning development include:

- Data collection

- Data preparation

- Developing the hypothesis

- Model construction

- Model deployment

- Model evaluation

3. The current state of Machine Learning

IDC published their AI in 2020 and beyond predictions. in which they posit that the effective use of intelligent automation will require significant effort in data cleansing, integration, and management that IT will need to support.

Data cleansing is a manual and laborious task that continues to handcuff data professionals. Without effective methods to cleanse data efficiently using automation, organizations will not be able to achieve their digital transformation goals. The IDC FutureScape report states that resolving past data issues in legacy systems can be a substantial barrier to entry, particularly for larger enterprises, showcasing the difficulties endemic in the adoption of digital strategies.

Last year alone, organizations were expected to invest $1.3 trillion (USD) in digital transformation projects, according to Morningstar. McKinsey later announced that 70% of these projects fail. For those of you keeping score at home, those failures are costing businesses over $900B. No matter the size of lost investment, organizations cannot afford to continuously fail when it comes to digital transformation. Clean, standardized data is critical in unlocking the value of digital transformation efforts, but getting the data you want in the state you need is tedious, time-consuming, and expensive.

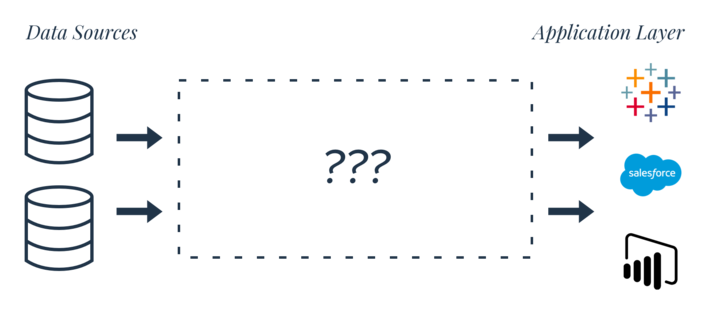

A common misconception, highlighted through the above diagram, is that once all of the data is in a common place, we can plug it into whatever we want. But the process isn’t as simple as that. The data coming from different sources lack a common standard, and the applications sitting on top of the data can’t be fully implemented because there’s a massive barrier sitting between the data (lake or no lake) and the enterprise apps. Refined data needs to come in a reliable, repeatable, predictable format.

At the ground level, the operational hurdles that need to be overcome in order to unlock this value have slowed innovation to a crawl. In many organizations, data professionals are spending 80% of their time cleansing and prepping data instead of spending that time on analysis and modelling. Data professionals should not be burdened with the custodial tasks of data prep. Without a layer of automation in place, some tasks are hard, others are harder, and the rest are practically impossible.

“Data professionals should not be burdened with the custodial tasks of data prep.”

Predictive models are only as good as the data on which they’re built, so they need to be fuelled by refined, standardized data in order to produce results that are both practical and representative of reality.

4. The typical data refining process is complicated

When we see the process of refining data found in the wild, it’s not as simple as plugging data into a BI layer or visualization tool. Often, there are many steps, something like this image:

Once the data is finally clean and in useable format, the next step is to pick an algorithm. There are trade-offs between several characteristics of algorithms, such as speed of training, predictive accuracy, and memory usage. With AutoML on the rise, algorithm selection is trending towards automation as well. If you are not familiar with Automated Machine Learning Systems, generally speaking, with a refined dataset on hand, the AutoML system can design and optimize a machine learning pipeline for you.

Now that we’ve covered the ML workflow, let’s try to apply some of the concepts discussed by building a simple Product Recommender System powered by refined data and AutoML.

4.1. Strategy must catch up with talent

Many organizations are worried about a talent shortage when it comes to data, but the root of the problem is most often a strategy shortage. Data variety is an obstacle for everyone, no matter how large the team. There are a lot of platforms where you can find sources for building machine learning systems, and plenty more places to find some of the most frequently used datasets. A data management platform allows for faster model-ready deployment (among other advantages) by automating most of the custodial work, including the dataset refinement.

4.2. Data prep & processing, simplified

Feeding data through Namara handles the custodial tasks associated with turning raw data into usable assets. The workflow is simplified again, like in the first image:

In this example, we’ll examine an Amazon Customer Reviews Dataset sample that was refined using Namara. For collaborative filtering methods, the refined historical data is sufficient enough to make a prediction. The steps highlighted in the earlier image, ETL, Enrichment, Search, Role Management, and API, are handled by the platform.

Now that we have the ready-to-use data, let’s pick an algorithm. There are any number of ways we could go about this; however, let’s keep it simple for now, and use Turi Create to simplify the development of machine learning models in Python.

4.3. AutoML – The Code

Once this has been built, it’s ready for model evaluation and deployment. We could also plot charts and visualizations to describe the data – as you can see, we’re looking at just the tip of the iceberg when it comes to the analysis.

To sum it up

As mentioned earlier, 70% of digital transformation efforts fail, demonstrating the serious misalignment issue when it comes to predictive solutions and data strategies. Predictive models are only as good as the data fuelling them, so they need refined, standardized data in order to achieve meaningful results. Organizations must adopt and leverage data management strategies as well as AutoML systems to unlock the value of data. This will lead to being able to deliver insights more efficiently, accurately, and at scale.