This articles was written by Andrej Karpathy. Andrej, PhD student at Stanford, is a Research Scientist at OpenAI working on Deep Learning, Generative Models and Reinforcement Learning.

There’s something magical about Recurrent Neural Networks (RNNs). I still remember when I trained my first recurrent network for Image Captioning. Within a few dozen minutes of training my first baby model (with rather arbitrarily-chosen hyperparameters) started to generate very nice looking descriptions of images that were on the edge of making sense. Sometimes the ratio of how simple your model is to the quality of the results you get out of it blows past your expectations, and this was one of those times. What made this result so shocking at the time was that the common wisdom was that RNNs were supposed to be difficult to train (with more experience I’ve in fact reached the opposite conclusion). Fast forward about a year: I’m training RNNs all the time and I’ve witnessed their power and robustness many times, and yet their magical outputs still find ways of amusing me. This post is about sharing some of that magic with you.

We’ll train RNNs to generate text character by character and ponder the question “how is that even possible?”

By the way, together with this post I am also releasing code on Github that allows you to train character-level language models based on multi-layer LSTMs. You give it a large chunk of text and it will learn to generate text like it one character at a time. You can also use it to reproduce my experiments below. But we’re getting ahead of ourselves; What are RNNs anyway?

Recurrent Neural Networks

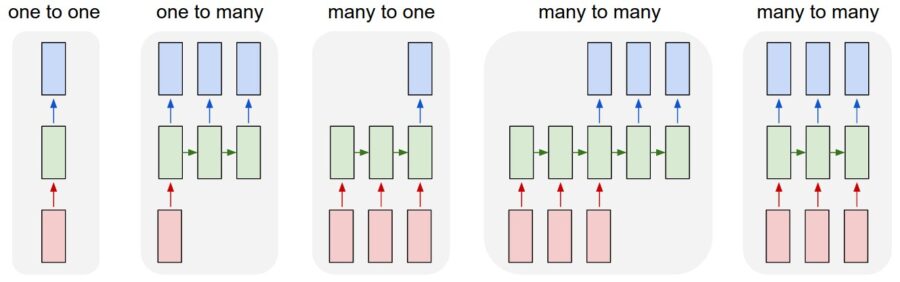

Sequences. Depending on your background you might be wondering: What makes Recurrent Networks so special? A glaring limitation of Vanilla Neural Networks (and also Convolutional Networks) is that their API is too constrained: they accept a fixed-sized vector as input (e.g. an image) and produce a fixed-sized vector as output (e.g. probabilities of different classes). Not only that: These models perform this mapping using a fixed amount of computational steps (e.g. the number of layers in the model). The core reason that recurrent nets are more exciting is that they allow us to operate over sequences of vectors: Sequences in the input, the output, or in the most general case both. A few examples may make this more concrete:

As you might expect, the sequence regime of operation is much more powerful compared to fixed networks that are doomed from the get-go by a fixed number of computational steps, and hence also much more appealing for those of us who aspire to build more intelligent systems. Moreover, as we’ll see in a bit, RNNs combine the input vector with their state vector with a fixed (but learned) function to produce a new state vector. This can in programming terms be interpreted as running a fixed program with certain inputs and some internal variables. Viewed this way, RNNs essentially describe programs. In fact, it is known that RNNs are Turing-Complete in the sense that they can to simulate arbitrary programs (with proper weights). But similar to universal approximation theorems for neural nets you shouldn’t read too much into this. In fact, forget I said anything.

What you will find in this article:

Recurrent Neural Networks

– Sequences

– Sequential processing in absence of sequences

– RNN computation

– Going deep

– Getting fancy

Character-Level Language Models

Fun with RNNs

– Paul Graham generator

– Temperature

– Shakespeare

– Wikipedia

– Algebraic Geometry (Latex)

– Linux Source Code

– Generating Baby Names

Understanding what’s going on

– The evolution of samples while training

– Visualizing the predictions and the “neuron” firings in the RNN

Source Code

Further Reading

– Computer Vision.

– Inductive Reasoning, Memories and Attention.

– People

– Code

Conclusion

To check out all this information, click here.

DSC Resources

- Career: Training | Books | Cheat Sheet | Apprenticeship | Certification | Salary Surveys | Jobs

- Knowledge: Research | Competitions | Webinars | Our Book | Members Only | Search DSC

- Buzz: Business News | Announcements | Events | RSS Feeds

- Misc: Top Links | Code Snippets | External Resources | Best Blogs | Subscribe | For Bloggers

Additional Reading

- What statisticians think about data scientists

- Data Science Compared to 16 Analytic Disciplines

- 10 types of data scientists

- 91 job interview questions for data scientists

- 50 Questions to Test True Data Science Knowledge

- 24 Uses of Statistical Modeling

- 21 data science systems used by Amazon to operate its business

- Top 20 Big Data Experts to Follow (Includes Scoring Algorithm)

- 5 Data Science Leaders Share their Predictions for 2016 and Beyond

- 50 Articles about Hadoop and Related Topics

- 10 Modern Statistical Concepts Discovered by Data Scientists

- Top data science keywords on DSC

- 4 easy steps to becoming a data scientist

- 22 tips for better data science

- How to detect spurious correlations, and how to find the real ones

- 17 short tutorials all data scientists should read (and practice)

- High versus low-level data science

Follow us on Twitter: @DataScienceCtrl | @AnalyticBridge