Data modeling has been a fixture of enterprise architecture since the 1970’s with ANSI defined conceptual, logical, and physical data schema. As data models developed, so did the availability of templates for business use. Retail banks use similar data models, as do other industries. A shared approach to data modeling advanced the discussion and planning of solutions.

Growth in unstructured data has led to development of tools to search data to identify context or bring structure to data analysis. Elasticsearch is used to identify contextual data in an unstructured data store. Just as structured data models brought greater standardization, the ability to template solutions is growing that combine structured and unstructured data, for more efficient solution delivery.

Growth in streaming data (real time events) raises a need for a shared ontology for streaming event modeling. Streaming event processing, commonly referred to as Streaming Analytics, is focused on discrete events that are processed and combined in real time to drive real time customer engagement. A shared model will benefit users and data science professionals with increased collaboration and templates for solution delivery.

Discrete Simple Streaming Event Model:

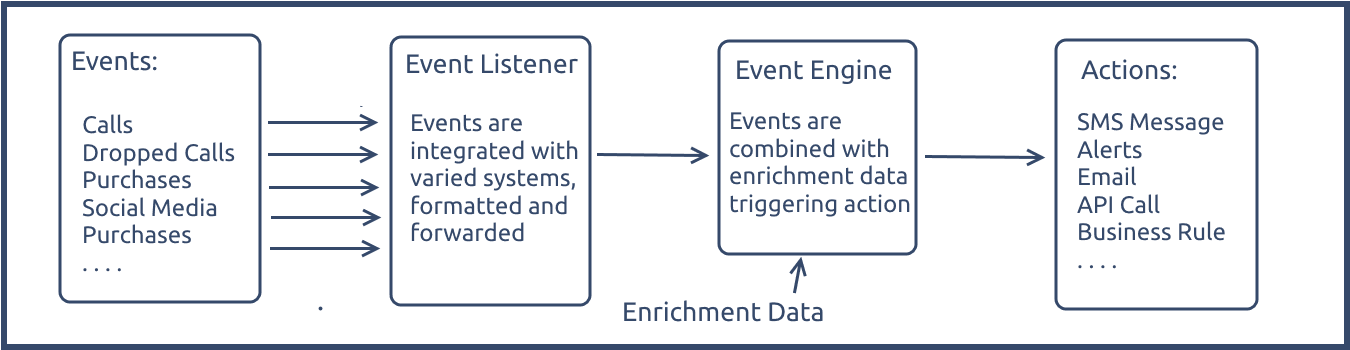

The event model described here reflects solutions implemented by leading Streaming Analytics systems today. The attributes a Streaming Event Model includes:

Discrete: the events are separate and distinct, as opposed to a continuous process. The model is not aimed at real time event management in a nuclear power plant, where processes are continuous. Rather, our focus is to track meaningful business events during a customer’s digital journey.

Simple: events are defined in terms meaningful to a business or customer. A dropped cellular call, or a purchase transaction, or a web browsing session, are examples. Simple events are abstracted from the technical details, which vary with different businesses and infrastructures. Purchases are recorded in varied databases, but the “purchase event” is the same whether it originates in Oracle or SQL Server.

Streaming: events are recognized and processed in real time, enabling real time customer engagement, and real time actions.

Event: the focus of the model is a point in time change that occurs with a meaningful change in the state of an actor. Streaming data systems typically focus on selected events, providing a focused approach to dealing with rapidly growing big data.

The preceding elements are recognized and recorded as an immutable fact, or what can be called “atomic” data. A user visits a web page, on a particular domain, at a point in time, with a specific browser, and remained on the page for seconds or minutes. This core event data is immutable and does not change.

Model: atomic events are aggregated over time, and enriched with contextual data and business logic to form a modeled event. A web page visit may be viewed as the first page in a session, leading to an online purchase. The identity of the user is inferred by referencing a browser cookie, and the intentions of the web session (browsing or purchasing). Adding context and business logic to events yields a “modeled event.” The model, however, is mutable and changes in business logic can change how we interpret past events.

A dropped wireless call may be aggregated with other dropped calls to indicate a problem for network operations, generating an alert for the operations team. Conversely, a series of dropped calls for a VIP customer could trigger a real time SMS message from a customer engagement focused team. The same events takes on different meanings, based on the event model employed. Events are cataloged and available for different users within an organization.

Event models typically aggregate and evaluate events over a time window, and are enhanced with enrichment data. In the above example, dropped calls are associated with a VIP customer, which is based on enrichment data from the CRM system.

The goal of Streaming Event processing is to recognize combinations of events, enhanced with enrichment data, to allow for informed real time actions. Real time customer engagement should enhance the customer experience, deliver personalized offers, and improve revenues and bottom line results.

The framework described here is widely used today with a number of open source frameworks, as well as solutions from IBM, Software AG, Tibco, EVAM, and other leaders in streaming event systems. Solutions are applied in financial and insurance industries to detect and manage fraud, by wireless network operators for remote device management and service, by retailers and others for all forms of real time customer engagement.

Streaming Event Model contrasted with Markhov, Monte Carlo Models:

The streaming event model recognizes business events, and combines events with enrichment data to support real time business action. This is a simple model, and adds to other well-known discrete event models, such as Markhov and Monte Carlo models.

Markhov: is widely used to describe expected outcomes for medical outcomes of patients, or throughput of repairs in a repair facility. The underlying principle is that the outcome of a system is based only on a current state, irrespective of history. For example, a Markhov model would model a cancer patient in remission as having X% probability of a relapse. The model is widely used for predicting likely outcomes, based on historical observed data, but not oriented to real time event processing.

Monte Carlo: is heavily used for simulation, based on probability and repeated sampling, to model complex systems. Wireless networks are modeled with varied numbers of users, in varied locations, and using a mix of services. Monte Carlo simulation is also widely used to estimate costs of large scale construction projects. Like the Markhov model, the Monte Carlo model focuses on simulation for planning purposes, and is not designed for real time, streaming data processing.

Streaming Event Model

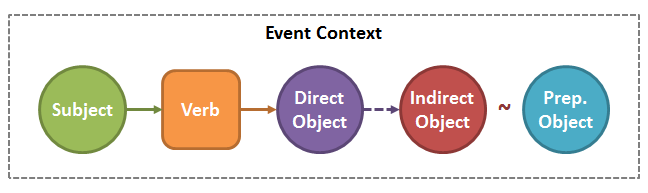

Fortunately, an ontology for streaming event modeling is straightforward. The following is derived from existing streaming event systems, and based in part on earlier work by SnowPlow Analytics. The model is based on English grammer, and is programming and data store neutral. The elements of the model are simple:

Subject, or noun in the nominative case. This is the entity which is carrying out the action: “I sent an email.”

Verb, this describes the action being done by the Subject: “I sent an email.”

Object, or noun in the accusative case. This is the entity to which the action is being done: “I sent an email.”

Indirect Object, or noun in the dative case, the entity indirectly affected by the action: “I sent the email to Frank.”

Context. Not a grammatical term, but context is used to describe the phrases of time, manner, place which further describe the action: “I posted the letter on Tuesday from Boston”

Applying the Event Model to Ecommerce

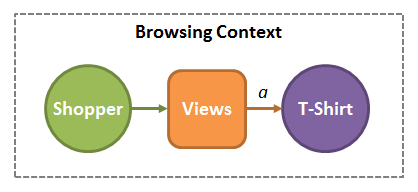

Here we illustrate how the event model is applied to a simple ecommerce example.

We begin with a shopper (Subject) views (Verb) a T-shirt (Direct Object) while browsing an online store (Context). This event could be cataloged as a “View Product” event.

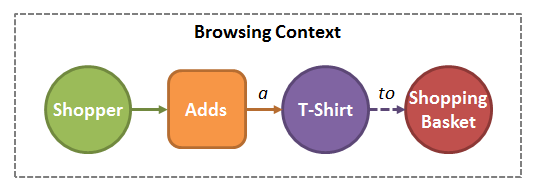

In a second step, the shopper (Subject) adds (verb) a t-shirt (Direct Object) to a shopping basket (Indirect Object). The context continues to be while browsing the online store. This is also an event that could be catalogued in our event modeling system, as “Select Product for Purchase.”

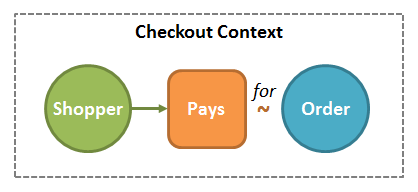

The next step could involve the shopper (subject) purchases (verb) Order (Prepositional Object). This event is viewed in a “Checkout Context.” Just as the preceding events are cataloged (standardized) for repeated use, this step could be cataloged as an “Online Purchase” event.

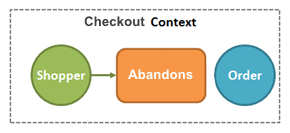

It’s also likely that a shopper (subject) abandons (verb) the order (Prepositional Object). The ability to model “negative events” are critical to practical real time event systems. This event would be catalogued as “Abandoned Purchase.”

Events are cataloged to enable use for different users and groups within a business. Metadata associated with the event, such as geography, customer segment, and shopper demographic are important for linking events to different channels and campaigns. The item viewed is relevant for merchandisers, and other session metrics are valuable to web site design and operation.

Benefits of a Standard Streaming Event Model

It’s time for Data Science professionals to engage in the design of a shared model for real time event systems. The language (or ontology) is a simple practical framework to define events in a format that doesn’t require advanced programming skills. A simple event model also enables managerial review of real time event systems, and more reasonable “release management” of updated event scenarios. In the same way that data models facilitated the development of solutions, a shared event model simplifies discussion and implementation of streaming event solutions.