Summary: Unless you’re involved in anomaly detection you may never have heard of Unsupervised Decision Trees. It’s a very interesting approach to decision trees that on the surface doesn’t sound possible but in practice is the backbone of modern intrusion detection.

I was at a presentation recently that focused on stream processing but the use case presented was about anomaly detection. When they started talking about unsupervised decision trees my antenna went up. What do you mean unsupervised decision trees? What would they split on?

It turns out that if you’re in the anomaly detection world unsupervised decision trees are pretty common. Since I’m not in that world and I suspect few of us are, I thought I’d share what I found.

Anomaly versus Sparse Data

A few weeks back we featured techniques for dealing with imbalanced data sets. We observed that there’s no standard for when the imbalance becomes so great that it ought to be considered an anomaly. Like great art, you’ll know it when you see it.

The anomalies that we’re going to use as illustrations here are about intrusion detection. Although these may occur many times during a day they are extremely rare compared to the torrent of data being produced by regular web logs and system packets. On a more human scale, anomalies could be events of credit card fraud or the detection of cancer from among normal CT scans.

If you think your use case is on the borderline between just being sparse and being an anomaly, do what we all do, try it both ways and see what works best.

Supervised Versus Unsupervised

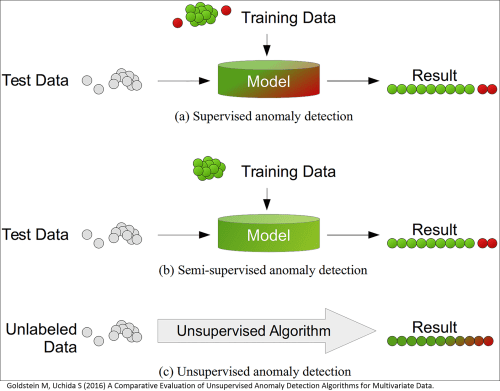

In anomaly detection we are attempting to identify items or events that don’t match the expected pattern in the data set and are by definition rare. The traditional ‘signature based’ approach widely used in intrusion detection systems creates training data that can be used in normal supervised techniques. When an attack is detected the associated traffic pattern is recorded and marked and classified as an intrusion by humans. That data then combined with normal data creates the supervised training set.

In both supervised and unsupervised cases decision trees, now in the form of random forests are the weapon of choice. Decision trees are nonparametric; they don’t make an assumption about the distribution of the data. They’re great at combining numeric and categoricals, and handle missing data like a champ. All types of anomaly data tend to be highly dimensional and decision trees can take it all in and offer a reasonably clear guide for pruning back to just what’s important.

To be complete, there is also category of Semi-Supervised anomaly detection in which the training data consists only of normal transactions without any anomalies. This is also known as ‘One Class Classification’ and uses one class SVMs or autoencoders in a slightly different way not discussed here.

The fact is that supervised methods continue to be somewhat more accurate than unsupervised in intrusion detection but they are completely unable to identify new zero-day attacks which are perhaps an even more serious threat.

Unsupervised Decision Trees

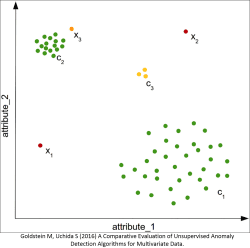

The concept of unsupervised decision trees is only slightly misleading since it is the combination of an unsupervised clustering algorithm that creates the first guess about what’s good and what’s bad on which the decision tree then splits.

Step 1: Run a clustering algorithm on your data. Pretty much all the clustering techniques have been tried and good old k-NN still seems to work best. It might be tempting to set K=2 but it would be unrealistic to expect a good result given the different types of intrusion that may be present. Practical guidance is to set K at a minimum of 10 and experiment with values up to 50. Since the data is unlabeled there is no objective function to determine an optimum clustering.

Step 1: Run a clustering algorithm on your data. Pretty much all the clustering techniques have been tried and good old k-NN still seems to work best. It might be tempting to set K=2 but it would be unrealistic to expect a good result given the different types of intrusion that may be present. Practical guidance is to set K at a minimum of 10 and experiment with values up to 50. Since the data is unlabeled there is no objective function to determine an optimum clustering.

It’s not covered in the literature but this might be a good point to try SMOTE (Synthetic Minority Oversampling Technique) discussed in the earlier article on imbalanced data sets since its objective is to clarify the boundaries among clusters.

Step 2: Having recorded the cluster on your data, run the decision tree in the normal manner. Clearly if K is large this will be a multi-class problem where you will need to interpret the threshold values to spot the anomalies.

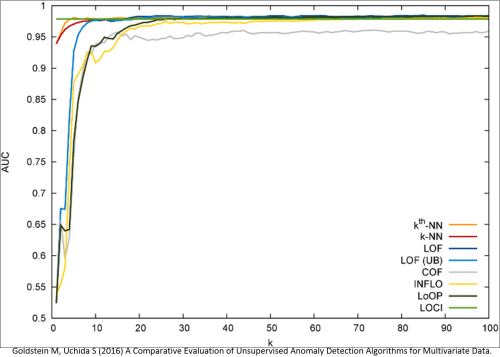

Focusing on the k-NN methods the results are quite good as you can see from this ROC curve run against breast cancer detection benchmark data. (Note the AUC axis is cutoff at 0.5).

The real implementation of anomaly detection unsupervised decision trees is somewhat more complex and there are issue of different types of anomalies, procedures for data normalization, and variations in the benchmark data sets themselves. If you want to deep dive this I suggest the article from which these graphics were derived which compares eight different techniques across a variety of data sets.

In Best Practice

There are Python scripts for Unsupervised Random Forests that will make your life much easier if you’d like to explore this. Boni Bruno, Chief Solutions Architect of Analytics from Dell EMC’s Emerging Technologies Division gave the presentation I attended. The scenario he described was monitoring for intrusion detection in a worldwide geographically distributed group of data centers and doing it from a single location.

The core of the technology architecture was Spark Streaming where an operator in the stream contained the detection algorithm built with the Python Unsupervised Random Forests script. Alert notifications are sent out in less than 5 seconds of the intrusion detection.

Of equal interest was that the system was designed to gather a new (and truly Big data) data set every 24 hours to retrain the model so that any new Zero-day exploits could be caught in near real time.

About the author: Bill Vorhies is Editorial Director for Data Science Central and has practiced as a data scientist since 2001. He can be reached at: