Summary: Count yourself lucky if you’re not in one of the regulated industries where regulation requires you to value interpretability over accuracy. This has been a serious financial weight on the economy but innovations in Deep Learning point a way out.

As Data Scientists we tend to take as gospel that more accuracy is better. There are some practical limits to this. It may not be profitable to continue to work a model for many days or weeks when the improvement to be had is minor. Specifically when the cost of the accuracy exceeds the financial gain. It is at the core of our beliefs that decreasing cost or increasing profits of the organization is the sole appropriate goal and is the direct result of better, more accurate models. We create financial value.

If you are in an environment where you can live by this rule then you are fortunate. There is a pretty significant percentage of our fellow practitioners who are denied this. They are the ones who work in the so-called regulated industries, mostly banking, financial services, and insurance, but increasingly other areas like real estate and any other industry that can designate individuals as winners or losers.

The guiding regulatory rules, which are by no means consistent, are basically that if your algorithm can create negative financial impact on an individual you need to be able to explain why that particular individual was so rated.

If your credit rating algorithm says an individual is not eligible for the most preferred terms, your underwriting algorithm says they are not eligible for this mortgage rate (or at all), life insurance under the most beneficial terms, or any of dozens of similar outcomes you are only allowed to use predictive techniques that are transparent. This is the classic battle between accuracy and interpretability written as a regulatory mandate.

Increasingly though this is lapping over into other fields. If a rental agency uses an algorithm that denies the ability to rent, that may increasingly be covered. In the not too distant future when the availability of certain high cost medical procedures must be paid from the public pocketbook, then the decision about who receives the treatment and who does not may be determined by algorithm and also fall to this scrutiny.

Issues Raised – Social versus Financial

Now that algorithmic decision making is becoming so ingrained in our society, we increasingly hear voices raising a warning against its unregulated use. This isn’t new, but the volume is getting louder. The Fair Credit Reporting Act dates back to the 70s. HIPPA is a reflection of this. Regulatory restrictions and reporting requirements have been a fact of life in banking, financial services, and insurance for many years with very uneven interpretation and implementation.

Now that algorithmic decision making is becoming so ingrained in our society, we increasingly hear voices raising a warning against its unregulated use. This isn’t new, but the volume is getting louder. The Fair Credit Reporting Act dates back to the 70s. HIPPA is a reflection of this. Regulatory restrictions and reporting requirements have been a fact of life in banking, financial services, and insurance for many years with very uneven interpretation and implementation.

Basel II and Dodd-Frank place a great deal of emphasis on financial institutions constantly evaluating and adjusting their capital requirements for risk of all sorts. This has become so important that larger institutions have had to establish independent Model Validation Groups (MVGs) separate from their operational predictive analytics operation whose sole role is to constantly challenge whether the models in use are consistent with regulations.

There has been an on-going threat of even more intrusive regulation which might even be based on such unscientific theories as ‘disparate impact’ where the government assumes that correlation means causation.

Increasing regulatory oversight is not going away. In fact, as our data science capabilities accelerate with deep learning, AI, even bigger data sets, and more unstructured data there is a very real concern that regulation will not keep up and outmoded standards will be applied to new technologies and techniques.

It’s likely that this will result in a continuing dynamic tension between well intentioned individuals seeking to protect against perceived economic misdeeds, and very real but hidden costs imposed on all of our economy by sub-optimized processes. Very small changes in model accuracy can leverage into much larger increases in the success of different types of campaigns and decision criteria. Intentionally forgoing the benefit of that incremental accuracy imposes a cost on all of society.

How Does Data Science Adapt

The ability of Data Scientists to explain their work is not a new requirement. The tension between accuracy and interpretability isn’t just the result of regulation. Many times it’s the need to explain a business decision based on predictive analytics to a group of executives who are not familiar with these techniques at all. In many of these cases interpretability can be achieved even with black box models with the use of data visualization techniques and good story telling that allows management to have some confidence in the outcome you promise.

In regulated industries however, the distinction is much more severe. If the man in the street who has been impacted by your decision cannot understand why he specifically was rated in a certain way, your technique is not allowed.

Essentially this throws our predictive analysts back on only two techniques, simple linear models and simple decision trees. Techniques of any complexity including Random Forests, pretty much any multi-model ensemble, GBM, and all the flavors of SVM and Neural Nets are clearly out of bounds.

These Data Scientists are not insensitive to the dilemma this presents and some techniques have been developed as work arounds. In many cases this involves convincing the individual regulator that your new technique is compliant with existing regs. Not an easy task.

Specialized Regression Techniques:

There are a few specialized techniques that can give better accuracy while maintaining interpretability. These include Penalized Regression techniques that work well where there are more columns than rows by selecting a small number of variables for the final model. Generalized Additive Models fit linear terms to subsets of the data dividing nonlinear data into segments that may be represented as a group of separate linear sub-models. Quantile Regression achieves a similar result of dividing non-linear data into subsets or customer segments that can be addressed with linear regression.

Surrogate Modeling With Black Box Methods:

Make no mistake; the Data Scientists in regulated industries are exploring their data with the more accurate, less interpretable black box methods, just not putting them into production. Since techniques like Neural Nets naturally take into account a large number of variable interactions, then when linear models are seen to dramatically underperform it’s a tip off that some additional feature engineering may be necessary.

Another technique is to first train a more accurate black box model, and to use the output of that model (not the original training data) to train a linear model or decision tree. It’s likely that the newly trained surrogate decision tree will be more accurate than the original linear mode and serve as an interpretable proxy.

Input Impact to Explain Performance:

GBM, random forests, and neural nets can provide tables that show the importance of each variable to the final outcome. If your regulator will allow this, explanation of input impacts may meet the requirement for interpretability.

Regulated Industries Innovate and Push Back with Deep Learning

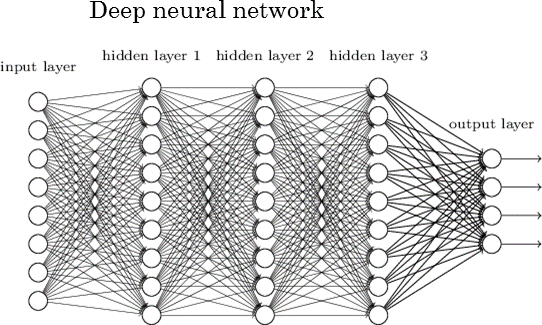

We tend to think of Deep Learning as applicable mostly to image processing (Convolutional Neural Nets) and natural language processing (Recurrent Neural Nets), both major components of AI. However, Neural Nets in their simpler forms of perceptrons and feed forwards have long been a regular part of the predictive analytics tool box.

We tend to think of Deep Learning as applicable mostly to image processing (Convolutional Neural Nets) and natural language processing (Recurrent Neural Nets), both major components of AI. However, Neural Nets in their simpler forms of perceptrons and feed forwards have long been a regular part of the predictive analytics tool box.

The fact is that Deep Neural Nets with many hidden layers can as easily be applied to traditional row and column predictive modeling problems and the increases in accuracy can be remarkable.

Equifax, the credit rating company recently decided the restrictions on modeling were too severe and decided to work with Deep Neural Nets. Taking advantage of MPP and the other techniques associated with Deep Learning they were able to look at the massive data file of the last 72 months across hundreds of thousands of attributes. This also wholly eliminated the requirement for manual down sampling of the data into different homogenous segments since each hidden layer can effectively emulate a different, not previously defined, customer segment.

Peter Maynard, Senior Vice President of Global Analytics at Equifax says the experiment improved model accuracy 15% and reduced manual data science time by 20%. The real magic however was that they were able to reverse engineer the DNN to make it interpretable. Maynard says in a Forbes interview:

“My team decided to challenge that and find a way to make neural nets interpretable. We developed a mathematical proof that shows that we could generate a neural net solution that can be completely interpretable for regulatory purposes. Each of the inputs can map into the hidden layer of the neural network and we imposed a set of criteria that enable us to interpret the attributes coming into the final model. We stripped apart the black box so we can have an interpretable outcome. That was revolutionary; no one has ever done that before.”

Here’s the social payoff. Maynard says that after reviewing the last two years of data in light of the new model they found many declined loans that could have been made safely. Better accuracy will pay off in allaying the concerns about disadvantages to individuals.

The work was done jointly by Equifax and SAS. Equifax has applied for a patent on its ability to interpret DNNs and isn’t sharing for now. They have however pointed a way forward out of the costly restrictive barriers of low accuracy high-interpretability modeling that has straight jacketed regulated industries for years.

About the author: Bill Vorhies is Editorial Director for Data Science Central and has practiced as a data scientist and commercial predictive modeler since 2001. He can be reached at: