The use of formal statistical methods to analyse quantitative data in data science has increased considerably over the last few years. One such approach, Bayesian Decision Theory (BDT), also known as Bayesian Hypothesis Testing and Bayesian inference, is a fundamental statistical approach that quantifies the tradeoffs between various decisions using distributions and costs that accompany such decisions. In pattern recognition it is used for designing classifiers making the assumption that the problem is posed in probabilistic terms, and that all of the relevant probability values are known. Generally, we don’t have such perfect information but it is a good place to start when studying machine learning, statistical inference, and detection theory in signal processing. BDT also has many applications in science, engineering, and medicine.

The use of formal statistical methods to analyse quantitative data in data science has increased considerably over the last few years. One such approach, Bayesian Decision Theory (BDT), also known as Bayesian Hypothesis Testing and Bayesian inference, is a fundamental statistical approach that quantifies the tradeoffs between various decisions using distributions and costs that accompany such decisions. In pattern recognition it is used for designing classifiers making the assumption that the problem is posed in probabilistic terms, and that all of the relevant probability values are known. Generally, we don’t have such perfect information but it is a good place to start when studying machine learning, statistical inference, and detection theory in signal processing. BDT also has many applications in science, engineering, and medicine.

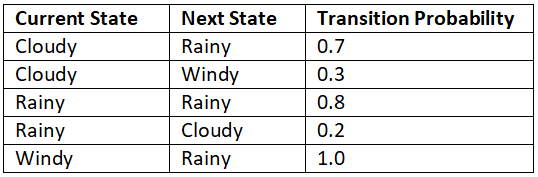

From the perspective of BDT, any kind of probability distribution – such as the distribution for tomorrow’s weather – represents a prior distribution. That is, it represents how we expect today the weather is going to be tomorrow. This contrasts with frequentist inference, the classical probability interpretation, where conclusions about an experiment are drawn from a set of repetitions of such experience, each producing statistically independent results. For a frequentist, a probability function would be a simple distribution function with no special meaning.

(null hypothesis) and

(null hypothesis) and  (alternate hypothesis) corresponding to two possible probability distributions

(alternate hypothesis) corresponding to two possible probability distributions  and

and  on the observation space

on the observation space  . We write this problem as

. We write this problem as  versus

versus  . A decision rule

. A decision rule  for

for  versus

versus  is any partition of the observation set

is any partition of the observation set  into sets

into sets  and

and  . We think of the decision rule as such:

. We think of the decision rule as such:

so to do so we assign costs to our decisions, which are some positive numbers.

so to do so we assign costs to our decisions, which are some positive numbers.  is the cost incurred by choosing hypothesis

is the cost incurred by choosing hypothesis  when hypothesis

when hypothesis  is true. The decision rule is alternatively written as the likelihood ratio L(y) for the observed value of Y and then makes its decision by comparing this ration to the threshold

is true. The decision rule is alternatively written as the likelihood ratio L(y) for the observed value of Y and then makes its decision by comparing this ration to the threshold  :

:

and

and

when that hypothesis is :

when that hypothesis is : is the risk of choosing

is the risk of choosing  when

when  is true multiplied the probability of this decision plus choosing

is true multiplied the probability of this decision plus choosing  when

when  is true multiplied the probability of doing this. Next we assign priori probability that

is true multiplied the probability of doing this. Next we assign priori probability that  is true unconditioned of the observation, and we assign priori probability

is true unconditioned of the observation, and we assign priori probability  that

that  is true. Given the risks and prior probabilities we can then define the Bayes Risk which is the overall average cost of the decision rule:

is true. Given the risks and prior probabilities we can then define the Bayes Risk which is the overall average cost of the decision rule: versus

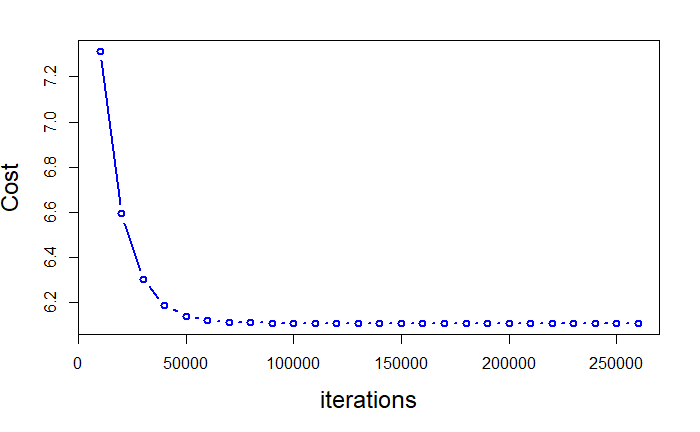

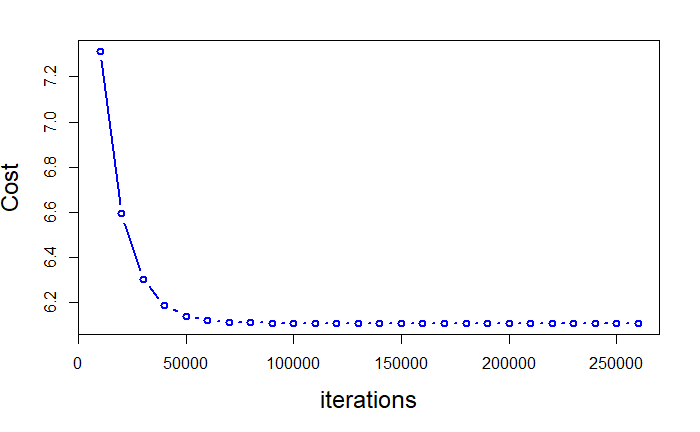

versus  is one that minimizes over all decision rules the Bayes risk. Such as rule is called the Bayes rule. Below is a simple illustrative example of the decision boundary where and

is one that minimizes over all decision rules the Bayes risk. Such as rule is called the Bayes rule. Below is a simple illustrative example of the decision boundary where and  are Gaussian, and we have uniform costs, and equal priors.

are Gaussian, and we have uniform costs, and equal priors.