At Grakn Labs we love technology. Here is our December 15th edition by Filipe Pinto Teixeira, where he looked back at Predictive Analytics.

*****

Today’s techie topic is . . . Predictive Analytics. Great, analytics is quite a large field so today let’s focus on common goal of the field which is making predictions based on past observations.

Can I predict the future ?

Predictive analytics is an umbrella term used to describe the process of applying various computational techniques with the objective of making some predictions about the future based on past data. This encompasses a variety of techniques including data mining, modelling, pattern recognition, and even graph analytics.

Does this mean we can predict future lottery numbers based on past lottery numbers? Sadly no, but, if anyone wants to prove us wrong, we will require at least 3 successful live demonstrations before we are convinced.

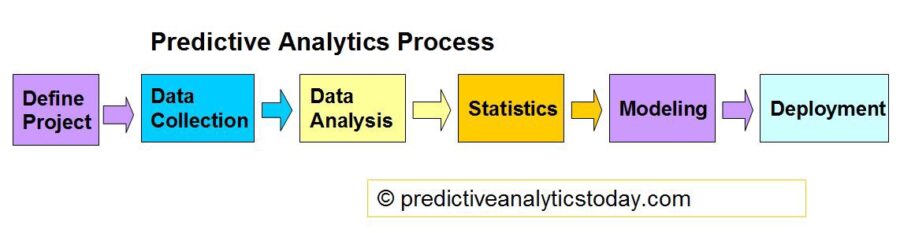

We’re not going to get into too many details in this article as the field is quite large and we are far from an expert. We are just going to touch on the general process used when trying to make predictions using historical data. Then we are going to poke our head into some cool tech within this field.

Step 1: Get the Data

The first step in the process is usually all about data mining and filtering. Many data sources are often quite large and unstructured. So this step is all about extracting structured data from sources. On the topic of sources, be sure to select relevant and trusted sources. If we were trying to predict election results we would probably avoid using The Onion— although given political outcomes this year we may be wrong.

Step 2: Analyse the Data

Here we need to start focusing on the contents of the data. This alone can prove to be quite a challenge. For example, if you are trying to make predictions about your own health, what information should you take into account? Do you smoke? What is your favourite colour? Where do you work? Often determining what is relevant and what is not is its own challenge. Proper pre-processing and filtering techniques are a must when cleaning up your data.

You should also ensure your data is of good quality. A reliable source alone does not ensure quality. What if you scraped your data from wikipedia on the day someone thought it would be fun to vandalise the articles you were mining? Running your data through existing analysis pipelines could be quite informative and a simple method of spotting questionable data. More formally you can use confirmatory factor analysis to ensure your extracted data will at least fit your model. It is also recommend that you apply other statistical techniques to ensure your data can account for variance, false positives, and other issues which often crop up from real world data.

Step 3: Model the Data

This step is fundamental as it allows you to structure your data in such a way that you can start recognising patterns that potentially allow you to extract future trends. Models also allow you to formally describe your data. This is helpful in understanding the results you get from your data analysis but is also a good starting point when it comes time to visualise your results.

Similarly to data extraction, your models should undergo the same scrutiny. You should ensure that your models are valid representations of the issue you are trying to predict. Consulting with domain experts is often a good idea. Trying to predict inflation for the next years? Well you should probably speak to an economist as a first step when defining the model. When modelling ontologies at Grakn Labs we cannot count the times an expert on hand would have saved us from hours of deliberation.

Step 4: Predicting the Future

This is where the massive field of machine learning can come into play. There are a multitude of ways to start recognizing patterns in your data and exploiting those patterns. Neural Networks, Linear Regression, Bayesian Networks, Deep Learning: all of these and many more can help you to start making predictions. Personally, Filipe would recommend Graph Based Analytics, but he may be a bit biased here.

Luckily, data analytics is becoming so desirable these days that many of these tools are available as simple applications. This means that it is now much easier to start analyzing your data without the need to understand how each crystal ball works.

If I can’t predict the lottery then why bother?

You can’t predict the lottery because it is purely random but there are many things in life which are not random and can be predicted to some extent. Predictive analytics affects anyone with insurance. The premiums you pay are not set by a random number generator but are set by predicting the risk in your life and that risk can be estimated based on your past.

Predictive analytics also plays a big role in project management. Any large project can fail and estimating the likelihood of that failure is an important part of deciding if it should be attempted or not. Before the days of predictive analytics, we would rely on experience and instinct on these matters. Now we can formally measure the chances of success of a project using these techniques. Although anyone working in a startup has probably learned to ignore these risks.

The number of applications are countless, but one of the reasons we think it is worth pursuing this field is a more fundamental one. Warning: we are going to become slightly idealistic here. Imagine if we could make predictions on a truly large and accurate scale. What mistakes could we have avoided? The subprime mortgage crisis in 2007? Terror attacks? The Ebola outbreak? World wars? We’d like to think that these mistakes could have been avoided with the right tools in place.

Predicting Predictive Analytics

The recent trends in Big Data have put more pressure on predictive analytics to be more efficient and robust. Interestingly, this has led to more database vendors incorporating analytics into their feature set, for example Oracle and IBM.

This provides us with more easily accessible tools which enable us to perform analytics. This also means that we are likely to become more accurate in our estimations as we consume more data in making these estimations.

What is interesting to see is that a process which was formerly exclusively reserved for Data Scientists is now becoming accessible to everyone. I Furthermore, we are seeing open source APIs even incorporate these features. Apache Tinkerpop’s Graph Computer is a perfect example of something which has the potential to perform predictive analytics.

We believe that, in the future, we will continue to see an adoption of these technologies as demand continues to grow. We are particularly interested to see how we will make these features more accessible and easy to work with.