This article was written by Koustubh.

Unless you have been living under the rock, you must have heard of the revolution that deep learning and convolutional neural networks have brought in computer vision. Computers have achieved near-human level accuracy for most of the tasks. However, most of the top performing neural networks for state of the art image recognition problem suffer from three problems:

1. Low speed:

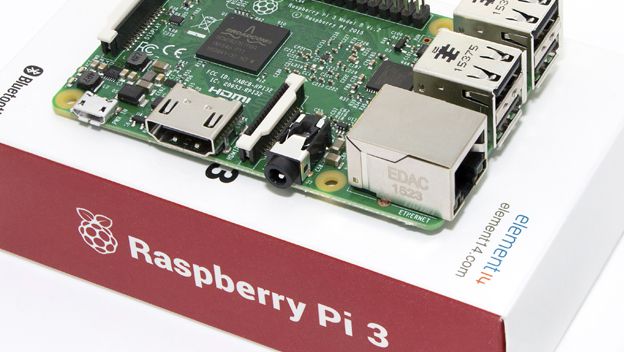

which deter them to get deployed in real time applications on embedded devices like Raspberry PI, Nvidia Jetson etc. This problem gets worse for an application like object detection where multiple windows at different locations and scale need to be processed.

2.Very large model size:

Models that achieve state of the art accuracy are too large to fit into mobile devices or small devices like Raspberry Pi.

3. Large memory footprint:

Even if you somehow manage to live with the large size of models, the amount of run-time memory(RAM) required to run these models is way too high and limits their usage.

Hence, the current trend is to deploy these models on servers with large graphical processing units(GPU), but issues like data privacy and internet connectivity demand usage of embedded deep learning. So huge efforts from people all around the world are geared towards accelerating the inference run-time of these networks, decreasing the size of the model and decreasing the run-time memory requirement.

Why are CNNs slow?

This long article has the following sections:

- Decomposition of Response Map

- Convolutional Layer

- Lower Rank Subspace

- Decomposition

To check out all this information, click here.

DSC Resources

- Services: Hire a Data Scientist | Search DSC | Classifieds | Find a Job

- Contributors: Post a Blog | Ask a Question

- Follow us: @DataScienceCtrl | @AnalyticBridge

Popular Articles