Summary: More data means better models but we may be crossing over a line into what the public can tolerate, both in the types of data collected and our use of it. The public seems divided. Targeted advertising is good but the increased invasion of privacy is bad.

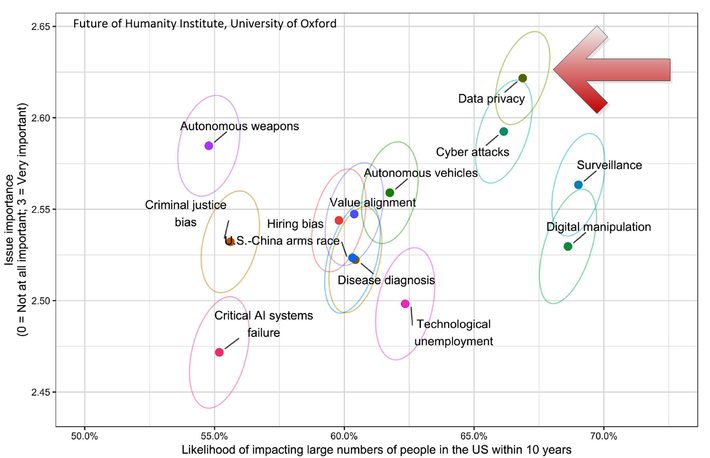

Headlines are full of alarm. The public is up in arms. The internet is stealing their privacy. Indeed, the Future of Humanity Institute at Oxford rates this as the most severe problem we will face over the next 10 years.

As data scientists how much should we care? Well more data means better models and less data means less accurate models. So in a sense the value we bring to the table will be directly impacted if government regulation takes many of our data sources off the table. So the answer is likely we should care a lot.

However, “privacy” has become what Marvin Minsky described as a ‘suitcase word’. That is it can carry such a variety of meanings that it can refer to many different experiences. After all, who doesn’t want more privacy? Give me more. Similarly ‘freedom’ or ‘democracy’ or ‘safety’ are words used so broadly that if asked, the public will respond from their own personal point of view, not necessarily what any survey is seeking to discover.

Some Obvious Exceptions – Some Benefits

So is the public really alarmed about data privacy? Let’s carve out a few obvious issues:

Government: The government doesn’t sell us anything and we’re probably right to be suspicious of government gathering too much of our personal data. Will it be used for us or against us?

Children: We can probably all agree that children’s data should be off limits for commercial use. Children don’t yet have the judgement needed to prevent their being manipulated by targeted advertising. It’s hard enough for some adults.

Really Personal Stuff: For example, I don’t think revealing my personal healthcare data is going to benefit me if I make it available for targeted advertising. We can probably make a short list of what constitutes information that should remain private.

But aside from these limited carve outs you’d think the public would welcome targeted advertising driven by our increasingly accurate models. Thanks to us ecommerce has dramatically reduced advertising and distribution cost.

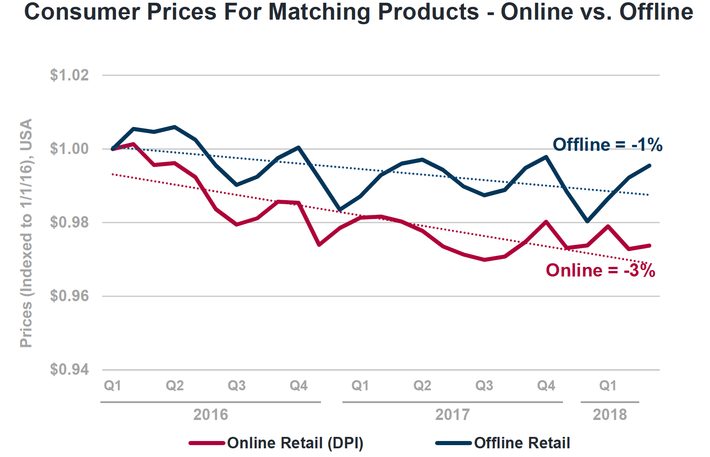

Mary Meeker, in her well known annual review of the internet concluded in 2018 that ecommerce (and our targeted advertising) had actually driven down the online cost of goods by 3% in 2 ¼ years and was probably responsible for another 1% reduction in off line goods through competition.

So What Does the Public Think About Targeted Advertising?

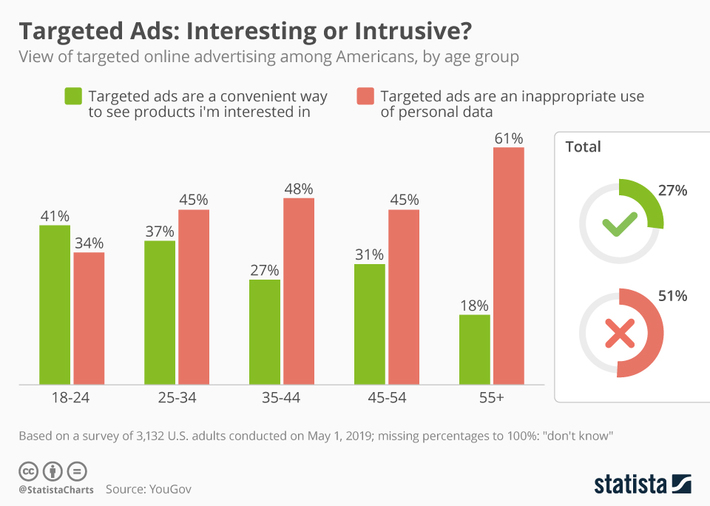

Let’s separate out the issue of data privacy from the perceived value of targeted advertising driven by our models. It seems to depend a lot on who’s asking the question and just as important, how the question is being asked. Here’s a survey conducted by YouGov representing the negative point of view.

OK data scientists. This looks bad but you should have spotted that on average 22% of responses are missing and are in the ‘don’t know’ (or more likely don’t care) category. Second, whenever you have an ‘opt in’ survey you are much more likely to get strongly opinionated responses, not representative of the average Jack and Jill.

Here’s a sampling of findings from other surveys.

Mary Meeker’s 2019 Internet Review Study:

- 91% prefer brands that provide personalized offers / recommendations.

- 83% willing to passively share data in exchange for personalized experiences.

- 74% willing to actively share data in return for personalized experiences.

EMarketer.com did a metadata analysis of other recent surveys and reported:

- Adlucent found that 7 out of 10 customers yearn for personalized ads.

- A survey by Epsilon found that 80% of customers were more likely to make a purchase when brands offered a more personalized experience.

Adobe backs this up with compelling stats:

- 67% of respondents said it’s important for brands to automatically adjust content based on their current context.

- 42% get annoyed when their content isn’t personalized.

So if you come at the question from the standpoint of value, the public seems overwhelmingly to say yes. When you come at it from the standpoint of privacy the result is more likely to be 50/50 or even negative.

What’s the Disconnect?

From a quick survey of other studies on the topic, it seems we’re doing our job too well, or at least our advertising departments are when they make use of our models. A few customer responses:

- Digital ads are starting to feel psychic.

- Too many, too intrusive, too creepy (especially retargeting ads).

Is the public simply schizophrenic on this topic? Is it possible to push too far into getting more data?

It’s clear for starters that the public wants to know exactly how they are being tracked. There’s an apocryphal story making its way around the internet that Facebook may be monitoring our conversations through our phones even when they’re not in use.

The story involves a casual conversation between old friends at a bar where it comes to light that friend 1 has been working out and friend 2 has not. Upon leaving the bar friend 2 suddenly receives an ad for a gym membership with 20% off if he signs up today. True? Unconfirmed. Could just have been a coincidence based on equally creepy location based analysis.

Too many targeted ads is the other half of this story and likely the reason that ad blockers are on the rise. Hubspot reports these top 6 reasons:

- annoying/intrusive (64%)

- disrupt what I’m doing (54%)

- create security concerns (39%)

- better page load time/reduced bandwidth use (36%)

- offensive/inappropriate ad content (33%)

- privacy concerns (32%)

These responses tend to support the idea that it’s frequency and intrusiveness that drives resistance, not necessarily the privacy of the data used.

How is Privacy Legislation Progressing?

GDPR is now just over a year old and by all reports it’s not going well at all. A recent review by the Center for Data Innovation reported a long laundry list of unintended consequences.

- Negatively affects the EU economy and businesses.

- Drains company resources.

- Hurts European tech startups.

- Reduces competition in digital advertising.

- Is too complicated for businesses to implement.

- Fails to increase trust among users.

- Negatively impacts users’ online access.

- Is too complicated for consumers to understand.

- Is not consistently implemented across member states.

- Strains resources of regulators.

The specifics include companies on average spending on average $1.8 million each on compliance, weaponizing GDPR authorization requests that must be handled within 30 days to attack competitors by wasting their time, a 30% fall off in tech company formation, and as many as 30% of previously available news and information services withdrawn from the market over failure to be able to comply.

Not a good track record and not getting better.

Our own congress has also recognized the complexity of the task and the Commerce Committee assigned with drafting legislation has currently taken a pause. Doesn’t mean this good news will last indefinitely.

From all this what can we conclude

As data scientists we should care about potential privacy restrictions since the withdrawal of data will add cost and reduce model accuracy. That will reflect directly on us.

The American people seem legitimately divided on the topic. In general targeting advertising is good except:

- When it’s too frequent.

- When it’s too creepy.

To the extent that you can guide the conversation in your own company you can give your executives this balanced view and take a common sense approach to crossing that line. To paraphrase a famous mission statement “don’t be creepy”.

Other articles by Bill Vorhies

About the author: Bill is Contributing Editor for Data Science Central. Bill is also President & Chief Data Scientist at Data-Magnum and has practiced as a data scientist since 2001. His articles have been read more than 2 million times.

He can be reached at:

[email protected] or [email protected]