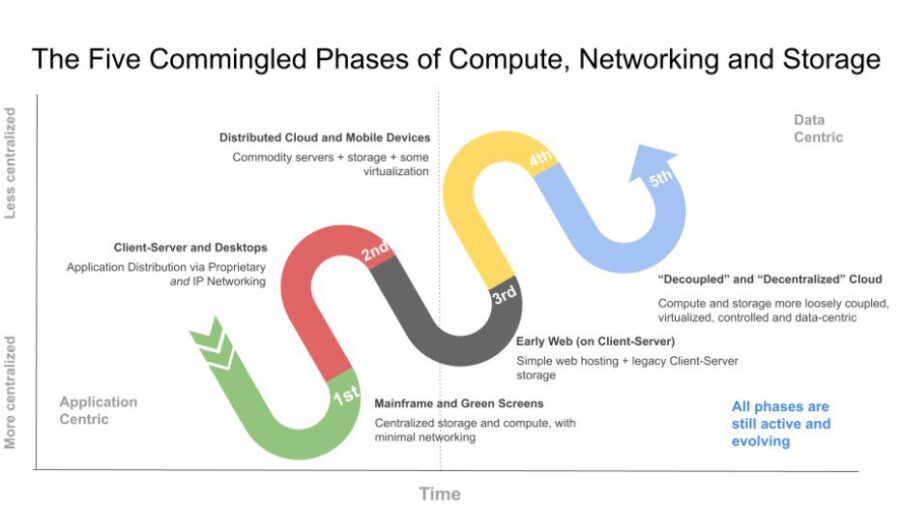

During a presentation at the TechTarget/BrightTALK Accelerating Cloud Innovation event this past December, I named the fifth phase of compute, networking and storage that we’ve entered the “Decoupled” and “Decentralized” Cloud.The quotation marks emphasized that what we’ve been experiencing is neither truly decoupled nor decentralized, but even so, the direction we’re headed in is toward the less centralized end of the shared systems continuum.

Decoupling implies a component connection capability with greater independence than loose coupling. The term “loose coupling” gained popularity in the early 2000s with the rise of the RESTful web (inspired by Roy Fielding’s 2001 UC Irvine dissertation on Representational State Transfer, or REST). Instead of conforming to a monolithic, rigid method of data transfer (which implies more inherent brittleness, known as “tight coupling”), a service-oriented architecture (SOA) relies on messaging protocols relaying “representations” to establish communications service links between a customer and a service provider.

SOA as an architecture philosophy in the 2000s accompanied the move toward RESTful websites and the enterprise use of messaging or enterprise service buses (ESBs) to connect services. ESBs, which had a single point of failure and significant levels of complexity due to the applications all being integrated in a single bundle via a bus, preceded Application Programming Interfaces (APIs). Both ESBs and APIs left Fielding’s vision of service discoverability and ease of use unfulfilled.

APIs gained critical momentum and focus with Jeff Bezos’ infamous 2002 API mandate memo, a company-wide imperative to use “service interfaces” and design them to be “externalizable.” This mandate opened the door to Amazon’s launch of Amazon Web Services (AWS) and then a broader public cloud services offering later in the 2000s.

APIs have proven quite useful, but require developers to learn aspects of each API owner’s data model and quirks of each API, one by one. Decoupling in a broader, more complete sense implies more of an automated, any-to-any, plug-and-play capability. That’s where digital twins and agents enter the picture,

The next phase of decoupling evolution: Digital twins and autonomous agents

In January 2023, IOTICS Cofounder Mark Wharton and Elly Howe, ESG Coordinator at Portsmouth International Port, presented at the monthly Semantic Arts meetup. They described how PIP’s smart IoT ecosystem was helping to support environmental and sustainability compliance across the transportation organizations serving the ports. IOTICSs describes the key elements of the approach as digital twin + autonomous agent. The semantics make the twins discoverable and interoperable.

“APIs are routinely developed for a single purpose and rarely designed to be discovered and consumed by other machines, said Wharton in a LinkedIn posting. ”This single-purpose nature is unfortunate when you want to consume the API for something other than its original intention and good luck trying to do it in any automated way.”

Wharton advocates “allowing ecosystems of discoverable assets to share data, interoperate and turn insights into action across the cyber-physical infrastructure. Humans are welcome, but this is not our world. This is the world of the autonomous agent.”

Numerous providers have used agents over the decades, but this is one of the few I’ve come across that has semantically modeled digital twins in a standards-based way so that agents can more or less autonomously act on the users’ behalf within an ecosystem full of digital twins. I think of IOTICS’ twins as microtwins, with enough smarts per twin to measure air quality, in this case.

PIP is working with one of its best shipping customers who uses the port daily. The port is now able to monitor air quality at the berths this customer is using. Howe and others on the project then correlate that data with CCTV of ships coming and going to pinpoint which ships are causing spikes in particulate and CO2 emissions associated with particular timeframes.

Howe’s vision is that PIP will develop its own data and analytics ecosystem for various kinds of ESG monitoring, including energy usage. Armed with PIP’s detailed emissions observations, the port’s users are responsible for reducing their own emissions levels their vehicles are producing.

Decentralization and self-sovereign data

“Governance is the kind of thing you want to keep your own hands on,” says Wharton. He argues that “people don’t want to share their data into the same central database.” The data architecture of the IOTICS system at PIP leave the data management and governance for each ship to the fleet. IOTICS uses decentralized identifiers (DIDs, a W3C standard) to establish provenance and prove that a digital twin of a ship, for example, is actually the twin for that ship, for instance.

As you can see, not everything is decentralized in this scheme, nor should it be. Hosts still act as hosts, for example. But resources are more distributed and agent-enabled than they have been. Speaking of the self-describing knowledge graph, “You want agents to access this kind of data soup,” Wharton says.

With such a method, the twins are documented in ways that APIs and relational databases are not. RDF (standard triple semantic graph) enables a self-describing graph in a uniform format–what Wharton calls a “lingua franca”. You can do things like share a bundle of 20 triples in this environment, and they can be plug and play with the entity you’re sharing with.

That’s a little bit of ad-hoc contextized data sharing that could make all the difference between reusable and single purpose. In that sense, there’s enough intelligence at the node and in each agent to interact in a loosely coupled, less centrally controlled way. That means easier scaling and fewer headaches from trying to grow and manage a large system.