Generative AI (GenAI) products like OpenAI ChatGPT, Microsoft Bing, and Google Bard are disrupting the roles of data engineers and data scientists. According to a recent report by McKinsey, these GenAI products could potentially automate up to 40% of the tasks performed by data science teams by 2025. And Emad Mostaque, founder and CEO of Stability AI, recently stated that he believes that AI will transform the software development world to the point that “There will be no programmers in five years.”

These GenAI products can generate data pipelines, recommend machine learning algorithms, and optimize hyper-parameter tuning with comparable or higher accuracy than human experts. In contrast to the Harvard Business Review’s 2012 declared “Data Scientist: the sexiest job of the 21st Century”, some data professionals may now feel a wee bit concerned about their future prospects in the face of generative AI products.

But there’s no need to not fear, Underdog is here! There is much that GenAI products can do to not only make the Data Science team more effective and productive today but provides new opportunities to acquire new skill sets that make the Data Science team more valuable tomorrow. Let’s explore this evolution.

Data Science Today: GenAI to Become More Productive

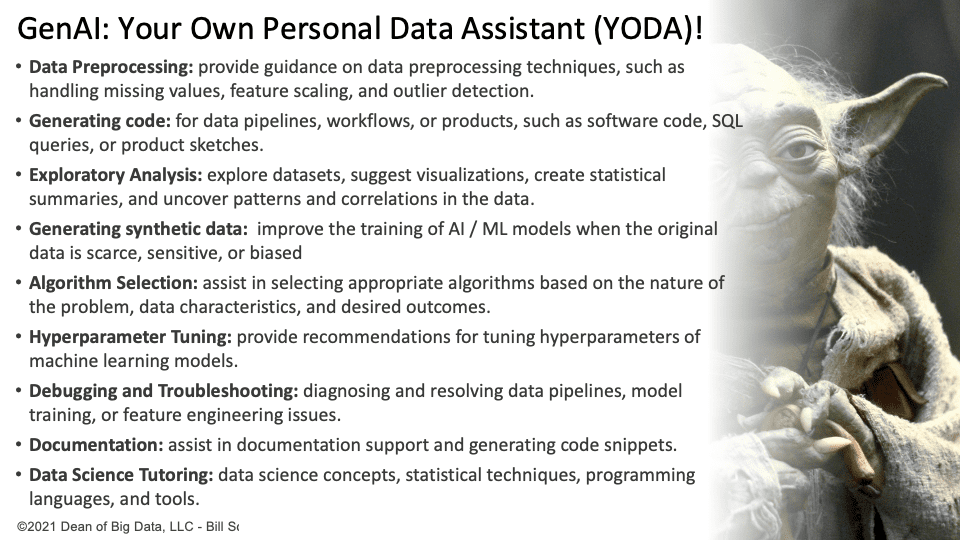

Instead of seeking to compete with these GenAI technologies, maybe one should think about how these GenAI products can become Your Own Data Assistant (YODA) in helping you become more effective and productive data scientists and data engineers. By mastering these products, you can leverage their extensive knowledge base to enhance your own skills, knowledge, effectiveness, and productivity. These GenAI products are already proving to be effective in many data science tasks, including:

- Data Preprocessing: provide guidance on data preprocessing techniques, such as handling missing values, feature scaling, and outlier detection. For example, a GenAI product can suggest different methods to impute missing values based on the type and distribution of the data, such as mean, median, mode, or k-nearest neighbors.

- Generating Code: for data pipelines, workflows, or products, such as software code, SQL queries, or product sketches. For example, a GenAI product can generate code snippets based on natural language descriptions or pseudocode.

- Exploratory Analysis: explore datasets, suggest visualizations, create statistical summaries, and uncover patterns and correlations in the data. For example, a GenAI product can create interactive dashboards or charts based on the type and purpose of the data.

- Generating Synthetic Data: improve the training of AI / ML models when the original data is scarce, sensitive, or biased. For example, a GenAI product can create realistic but fake data that preserves the statistical properties and relationships of the original data.

- Algorithm Selection: assist in selecting appropriate algorithms based on the nature of the problem, data characteristics, and desired outcomes. For example, a GenAI product can recommend different types of algorithms for classification, regression, clustering, or dimensionality reduction problems.

- Hyperparameter Tuning: provide recommendations for tuning hyperparameters of machine learning models. For example, a GenAI product can use techniques such as grid search, random search, or Bayesian optimization to find the optimal values for hyperparameters such as learning rate, regularization parameter, or number of hidden layers.

- Debugging and Troubleshooting: diagnosing and resolving data pipelines, model training, or feature engineering issues. For example, a GenAI product can identify and fix errors in code syntax, logic, or performance.

- Documentation (Yay!!): assist in writing documentation and generating code snippets. For example, a GenAI product can create comments or annotations for code blocks that explain what the code blocks do and how they work.

- Data Science Tutoring: teach data science concepts, statistical techniques, programming languages, and tools. For example, a GenAI product can provide interactive lessons or exercises that cover topics such as probability theory, linear algebra, Python programming, or the TensorFlow framework.

Figure 1: GenAI: Creating Your Own Data Assistant (YODA)

It’s important to remember that the more that these GenAI products are used, the more effective they will become in performing these data science tasks. Attempting to outlearn them is not the right strategy. Personally, I’ve already abdicated my ability to calculate square root to my $1.99 calculator. While it’s uncertain if GenAI products will ever fully solve the “80% of data science time is spent on data preparation” challenge, it’s worth considering how you can use the extra time to improve your personal and professional development, making you a more valuable asset.

Data Science Tomorrow: GenAI to Become More Valuable

Becoming a more productive data scientist or data engineer is great. But it’s like paving the cowpath. To be successful and reach your full potential, don’t become the best cowpath paver. Instead, develop the skills and competencies to reinvent the cowpath.

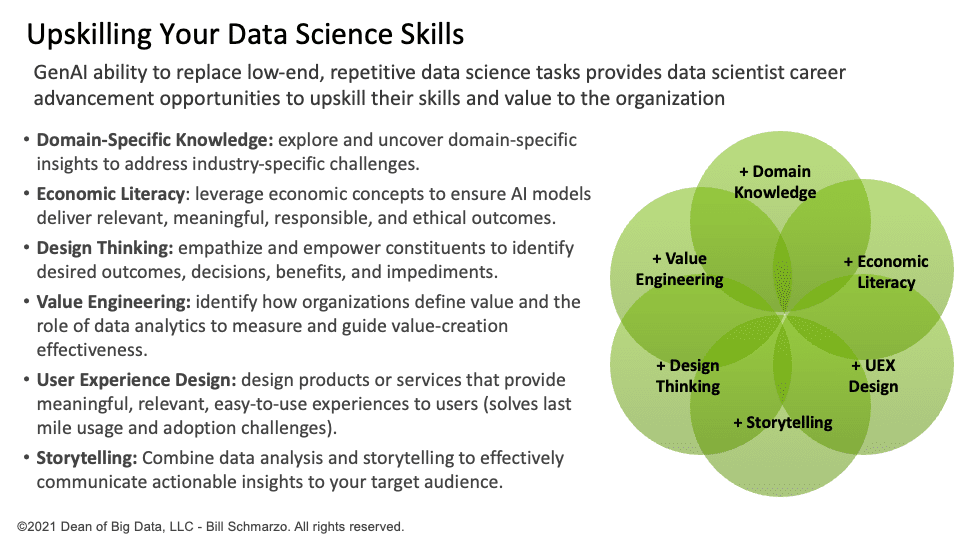

If you really want to transform yourself, invest in areas that enhance the value and applicability of your data science skills and competencies. Here are a few areas where you can reinvest that time freed up by the GenAI products to increase your personal and professional value (Figure 2):

- Domain-specific Knowledge: Explore and uncover domain-specific insights (predicted behavioral and performance propensities) to address industry-specific challenges. For example, a data professional in the healthcare industry can use data and analytics to identify the factors that influence patient outcomes, satisfaction, or loyalty.

- Economic Literacy: Leverage economic techniques and concepts to ensure AI models deliver relevant, meaningful, responsible, and ethical outcomes. For example, a data professional in the banking industry can use cost-benefit analysis, risk assessment, or social welfare functions to evaluate the trade-offs and impacts of different AI models on customers, stakeholders, or society.

- Design Thinking: Empathize and empower key constituents in identifying desired outcomes, key decisions, benefits, and impediments on their journeys. For example, a data professional in the education industry can use design thinking methods such as personas, empathy maps, or journey maps to understand the needs, goals, pain points, or emotions of students, teachers, or administrators.

- Value Engineering: Identify how organizations define and measure value effectiveness and the role of data and analytics to power their value-creation processes and use cases. For example, a data professional in the retail industry can use value engineering techniques such as value stream mapping to identify the sources of value creation or waste reduction for customers or suppliers.

- User Experience Design: Design products or services that provide meaningful, relevant, easy-to-use experiences to users (solve the last mile usage and adoption challenges). For example, a data professional in the entertainment industry can use user experience design principles such as usability testing, feedback loops, or gamification to enhance the engagement, enjoyment, or retention of users.

- Storytelling: Combine data analysis and storytelling to effectively communicate insights to your target audience. The goal is to create engaging narratives that help the audience understand key insights, motivating action or decision-making based on the data. By bridging the gap between data analysis and communication, storytelling with data analysis enhances accessibility and impact, making data-driven stories more memorable and actionable.

Figure 2: Upskilling Your Data Science Skills

Data professionals should embrace this opportunity to update their skill sets. Shifting from algorithmic models to economic models will increase their value to the organization and society overall.

Next-Gen Data Scientist: Thinking Like an Economist

The similarities between AI (and the AI Utility Function) and economics are astounding. Both AI and economic models are based on data and algorithms as well as operational assumptions and “value” projections, where the definition of value, and the dimensions against which value is defined, are critical for driving model effectiveness.

AI models, like economic models, should account for the trade-offs, externalities, or ethical issues that arise from their actions or outcomes. Unfortunately, many AI models are developed without considering the broader economic implications or consequences of their AI-driven decisions and recommendations. This is where learning to “think like an economist” can have a material impact on the effectiveness of your data science teams and their AI models.

Economic models are explicitly concerned with the assumptions and values that underlie their theories and policies. Economic models try to account for the costs and benefits, incentives and constraints, and moral and social implications of their actions or outcomes. The AI Utility Function can be enhanced from an economic mindset by incorporating more human and social factors into their utility functions. The result: AI models that deliver more meaningful, relevant, responsible, and ethical outcomes.

Yea, to advance your data science career, begin to “Think Like an Economist.”