Summary: Here’s some background on how 3rd generation Spiking Neural Nets are progressing and news about a first commercial rollout.

Recently we wrote about the development of AI and neural nets beyond the second generation Convolutional and Recurrent Neural Nets (CNNs / RNNs) which have come on so strong and dominate the current conversation about deep learning. Our research shows that the next generation of neural nets is most likely to be led by Spiking Neural Nets (SNNs) that are a return to the ‘strong’ AI tradition and closely mimic actual brain function.

Recently we wrote about the development of AI and neural nets beyond the second generation Convolutional and Recurrent Neural Nets (CNNs / RNNs) which have come on so strong and dominate the current conversation about deep learning. Our research shows that the next generation of neural nets is most likely to be led by Spiking Neural Nets (SNNs) that are a return to the ‘strong’ AI tradition and closely mimic actual brain function.

Basics of Spiking Neural Nets

Unlike CNNs that fire signals to every one of their deep layer connections every time, SNNs are modeled after the fact that in the brain neurons do not constantly communicate with one another. Rather they communicate in spikes of signals or more correctly short trains of spiking signals.

As each spike in the train arrives at a neuron it raises the potential of that neuron until finally a spike arrives that tips it over its potential threshold and it in turn fires, propelling the signal onward. But only to selected neurons within the whole array.

Research is showing that information is encoded somehow in the rate, amplitude, or even latency between spikes.

There is general agreement that there are about 86 Billion neurons in the human brain and each neuron may have in the range of 2,000 to 10,000 connections to other neurons. This results in a system with roughly 100 Trillion connections with each neuron firing at the rate of 5 to 50 times a second.

Today’s supercomputers and even not-so-super computers are approaching this volume of interconnection and that firing rate is fairly leisurely compared to most computer chips. Plus the fact that we don’t necessarily have to have a full brain-sized SNN to achieve some useful results.

What to Expect from SNNs

Once these algorithms reach the same industrial strength we enjoy today in CNNs there are a number of expectations for improvement that researchers are observing.

- Since not all ‘neurons’ fire each time then a single SNN neuron could replace hundreds in a sigmoidal NN yielding much greater efficiency in both power and size.

- Early examples show they can learn from their environment using only unsupervised techniques (no tagged examples).

- They can generalize about their environment by learning from one environment and applying it to another. They can remember and generalize, a truly breakthrough capability.

In the Beginning – DARPA and IBM

The path to SNNs has not been a straight line. Government funding through DARPA starting in about 2008 attempted to kick start the effort to model the neuron-to-synapse architecture for computation. Keep in mind that this is in the earliest days of NoSQL, massive parallel processing, and well before the advancement of Convolutional Neural Nets. This is only a year after Hadoop was first commercialized.

In addition to the raw problem solving power that researchers envisioned then, there was also the promise that it could be accomplished with a fraction of the power requirements of existing hardware.

The application of both Convolutional and Spiking neural net algorithms has been constrained by existing chip architecture. Not only were chips required with orders of magnitude more transistors, but those switches needed to be nano-scale. So the advancement of both CNNs and SNNs has marched in lock step with advancements in chip architecture.

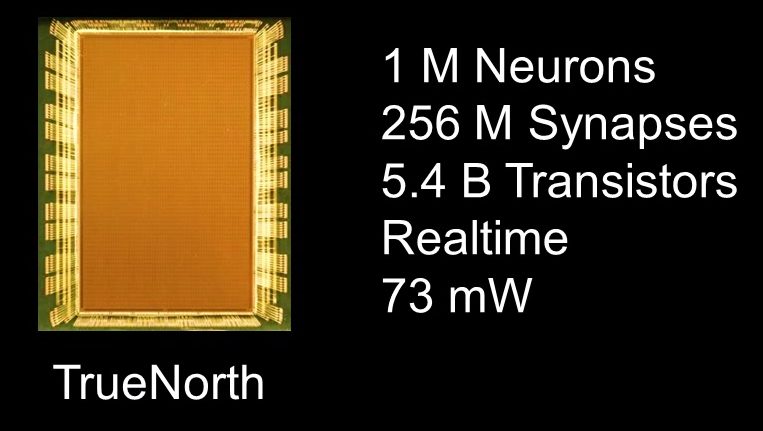

Today, CNNs are rapidly moving off of conventional chip architecture onto speedier GPUs (video game processing), FPGAs, memristors, and even more exotic types. From 2008 to about 2011 however it was IBM that led the way using only about $1 Million in initial DARPA funding to create a chip called TrueNorth.

TrueNorth and Others

TrueNorth was a breakthrough but not one that was initially appreciated. At that time attention was beginning to be diverted to CNNs. TrueNorth however was designed to support computation with signal spiking which is at the core of SNNs but is actually inefficient for CNNs. As a result there were several years when TrueNorth languished as a project but in its most modern form is one of the leading chip architectures for SNNs.

TrueNorth was a breakthrough but not one that was initially appreciated. At that time attention was beginning to be diverted to CNNs. TrueNorth however was designed to support computation with signal spiking which is at the core of SNNs but is actually inefficient for CNNs. As a result there were several years when TrueNorth languished as a project but in its most modern form is one of the leading chip architectures for SNNs.

Too few people are aware of the resource we possess in the form of the 17 US National Laboratories and the larger group of Federally Funded Research and Development Corporations (FFRDCs). The original charge by DARPA’s SyNAPSE program has spread the work among many of these labs including Sandia, Oak Ridge, and Lawrence Livermore. In addition there are significant independent programs at virtually all the great research universities including MIT, CalTech, Stanford and UCSD. IBM of course remains a central player.

The military and security implications of this race are not lost on other countries and many well-funded programs exist outside of the US. The Human Brain Project is a consortium of 116 organizations from around the world with primary funding by the European Union. Their creation is the SpiNNaker neuromorphic device,

There is still no agreement about a general purpose approach to either chip design or computation. Some organizations are focusing on one or the other of these, and a few on both at the same time.

“Neuromorphic computing is still in its beginning stages,” says Dr. Catherine Schuman, a researcher working on such architectures at Oak Ridge National Laboratory. “We haven’t nailed down a particular architecture that we are going to run with. True North is an important one, but there are other projects looking at different ways to model a neuron or synapse. And there are also a lot of questions about how to actually use these devices as well, so the programming side of things is just as important.”

The programming approach varies from device to device, as Schuman explains. “With True North, for example, the best results come from training a deep learning network offline and moving that program onto the chip. Others that are biologically inspired implementations like Neurogrid (Stanford University’s project), for instance, are based on spike timing dependent plasticity.”

Dr. Shuman sees primarily two ways forward. The first with SNNs as embedded processors on sensors and other devices enabled by their low power consumption and high performance processing capability. The second would be as co-processors on large scale super computers which Oak Ridge uses in its work to develop and safe guard our nuclear arsenal.

State of the art on the hardware side appears to still belong to IBM which recently delivered a supercomputing platform based on TrueNorth to Lawrence Livermore Lab with the equivalent of 16 million neurons and 4 billion synapses. (See above, human brain with 86 billion neurons.) Importantly, it works with the power consumption of about 2.5 watts, about the same as a hearing aid battery.

If you want to follow advancements in this field, keep in mind that the advancement in chip hardware and computational strategies are not necessarily coordinated and you will have to track each separately.

First Commercial Rollout of a SNN-Based Application

What better way to test theory than in the marketplace. Tech entrepreneurs aren’t the type to wait until the theory is fully locked down and while SNN computation is not yet enterprise strength, it was just last month that the first SNN-based application that I’ve seen was rolled out in the marketplace.

BrainChip Holdings (Aliso Viejo, CA) announced that it is launching a Phase 1 trial of its security monitoring system at one of Las Vegas’ largest casinos with full rollout to follow the trial. Branded as ‘Game Outcome’ and using patented SNAPvision technology it will first focus on baccarat tables, followed by Blackjack and Poker.

BrainChip Holdings (Aliso Viejo, CA) announced that it is launching a Phase 1 trial of its security monitoring system at one of Las Vegas’ largest casinos with full rollout to follow the trial. Branded as ‘Game Outcome’ and using patented SNAPvision technology it will first focus on baccarat tables, followed by Blackjack and Poker.

Its function is to visually and automatically detect dealer errors by monitoring the video streams from standard surveillance cameras. “Game Outcome is very smart, and can recognize the cards that are played, winning hands, the rules of the game and the payout.“

BrainChip is apparently already publically traded in Australia (ASX: BRN) and claims significant IP patent protection of its SNN technology. It’s rolling out a series of its own gambling-monitoring products and pursuing licensing agreements for its IP.

Welcome to 3rd generation brains-on-a-chip.

About the author: Bill Vorhies is Editorial Director for Data Science Central and has practiced as a data scientist and commercial predictive modeler since 2001. He can be reached at: