Summary: Just how much should you trust your AI systems? Best practice points to constant review, strong governance, and the willingness to override results that seem illogical.

Just how much do you trust your AI? This is not intended to be the skeptical consumer view about bias or black box outcomes. It’s intended to see if leaders in actual implementation see AI through proverbial rose colored glasses or whether and how much skepticism they think is warranted.

Just how much do you trust your AI? This is not intended to be the skeptical consumer view about bias or black box outcomes. It’s intended to see if leaders in actual implementation see AI through proverbial rose colored glasses or whether and how much skepticism they think is warranted.

As a source, I love these very professionally done surveys of AI adoption published by many different vendors. Typically they try to cover a lot of territory to support just how wonderful AI can or will be. But not infrequently there is an important message that can be teased out by looking at just a narrow set of responses. The source for this article is a joint SAS, Intel, Accenture, Forbes whitepaper called “AI Momentum, Maturity & Models for Success”.

What is particularly laudable about this study of responses from 300+ execs is that they are all deeply involved in the implementation of AI in large companies at a C- or near C-level (CDOs, CAOs, CTOs, EVPs of Analytics, and the like). They represent a good worldwide cross section by geography and also by business type. And they appear to have been candid in their self-assessment of how fully their organizations have embraced AI as well as being positioned to accurately understand the degree of success or failure so far.

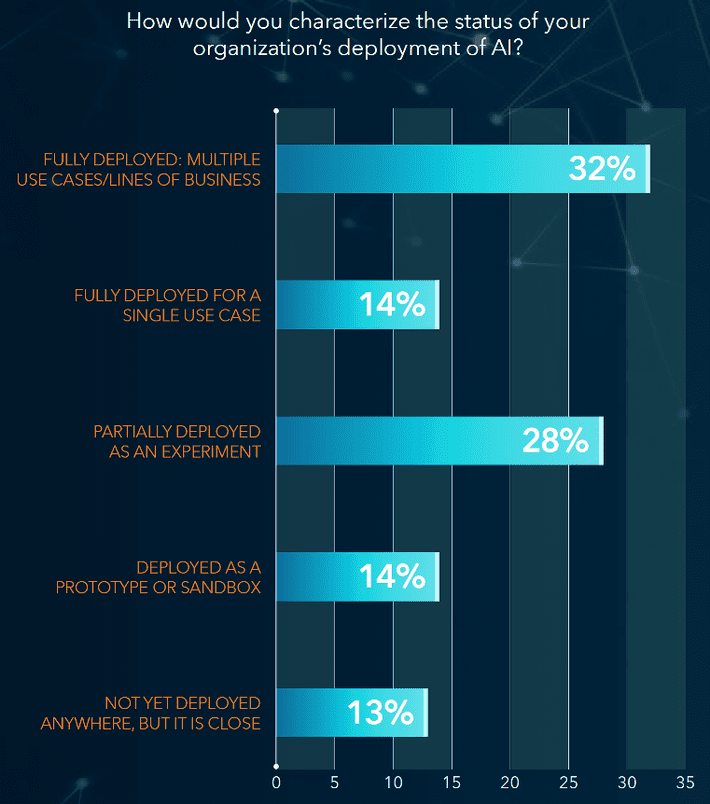

As this chart from the study shows, there is a full spectrum of implementation maturity.

As this chart from the study shows, there is a full spectrum of implementation maturity.

The study authors have helped us out greatly by contrasting the responses from the most successful with the less successful. By then looking at those responses that vary most widely between these two groups the hope is that this points to best practices to be widely adopted.

Here’s the Surprise

The leaders of the most successful AI implementations are prepared to be the most skeptical of results.

This is not to say that they discount the economic benefit they’ve achieved from these implementation or that they are less than enthusiastic about their journey. Rather that they have implemented governance procedures that constantly review AI results for accuracy and benefit.

In an earlier article we focused on the fact that improved model performance does not necessarily mean improved business impact. Thanks to detailed data shared by Bookings.com they observed that in a regular review of 26 recent model results that 42% showed improved model performance but actually resulted in diminished business outcomes. It’s clear that skepticism is warranted and governance is needed.

Leaving out the undecided responses, 30% of respondents said they have had to rethink, redesign, or override an entire AI-based system due to questionable or unsatisfactory results.

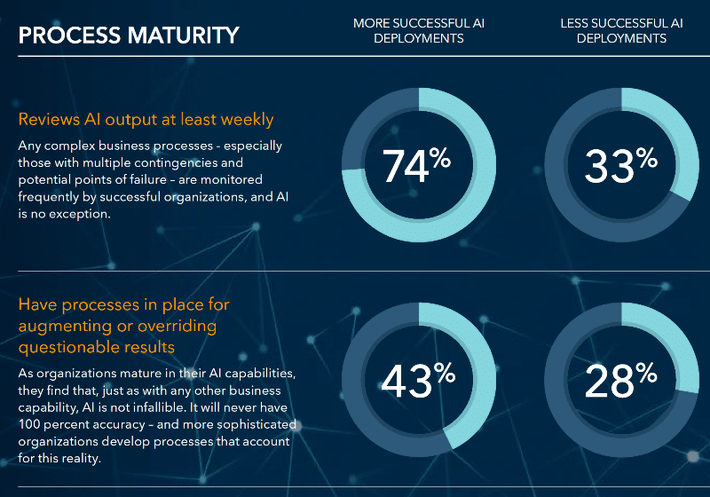

At a rate of almost 2 to 1, the most advanced and successful AI implementers said they had governance practices in place to override questionable results from AI systems and conducted those reviews at least weekly.

Perhaps even more telling is that the majority of respondents said it was they themselves, the senior executives who oversaw the review process.

The frequency of review among all respondents was also notable.

5% Reviewed results hourly

18% Reviewed results daily

31% Reviewed results weekly

Note in the chart above that 74% of the most successful implementers reviewed at least weekly compared to 54% for the study as a whole.

And only 4% or all respondents said they almost never reviewed the results of their AI systems.

Kimberly Nevala, Director of Business Strategies for SAS observes in the study, “If AI is directly influencing your customers or automating critical operational decisions, you can’t take it for granted that the data will be just right, the models will just work and resultant outcomes will meet expectations. As organizations advance down the AI path and see these very real impacts – both positive and negative – they are moving quickly to put that next level of oversight in place.”

Best Practice

As the results of this study and the work shared by Bookings.com shows organizations need to be cautious of accepting the results of AI systems. There needs to be formal governance procedures in place and that review needs to be pushed to the highest levels of management. In the immortal words of the Gipper, trust but verify.

Other articles by Bill Vorhies

About the author: Bill is Contributing Editor for Data Science Central. Bill is also President & Chief Data Scientist at Data-Magnum and has practiced as a data scientist since 2001. His articles have been read more than 2.1 million times.

He can be reached at: