Outliers is one of those issues we come across almost every day in a machine learning modelling. Wikipedia defines outliers as “an observation point that is distant from other observations.” That means, some minority cases in the data set are different from the majority of the data. I would like to classify outlier data in to two main categories: Non-Natural and Natural.

The non-natural outliers are those which are caused by measurement errors, wrong data collection or wrong data entry. While natural outliers could be fraudulent transactions in banking data, High Net worth Individuals (HNI) customers in loan data. Let’s limit the scope of this blog to natural outliers only.

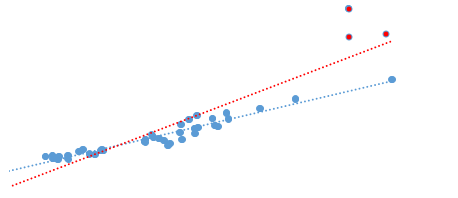

Now, depending on the data, we generally tend to treat outliers by removing the data-points, binning the data, creating separate model or transforming the data. The general thought which goes behind this treatment is your model could be biased towards outliers and will not be accurate.

But is it ‘always’ necessary to treat outliers? What if we do not treat the outlier data at all?

Suppose we are working on Customer Lifetime Value (CLV) prediction. After analyzing the data, you figure out that outlier data consists of best customers with CLV $10000 as compared to customers with CLV $1000. Here in this case, we have to check if the $ cost of missing these best outlier customers is higher than $ benefits obtained from accurately forecasting customers with CLV $100. In this case it is OK to have biased model towards high CLV (outliers) as long as business value delivered by model is in line with business objective.

Models should be first evaluated on business objective along with other performance metrics. The treatment of outliers then becomes balance between type of outlier, business objective and accuracy of the model. In my opinion, it is OK to have less accurate model with high business value than just the highly accurate model.

Please feel free to share your views!!