In many of my presentations and lectures, I have made the following declaration:

In Big Data, it isn’t the volume of data that’s interesting, it’s the granularity; it’s the ability to build detailed analytic or behavioral profiles on every human and every device that ultimately drives monetization.

Ever-larger volumes of aggregated data enable organizations to spot trends – what products are hot, what movies or TV shows are trendy, what restaurants or destinations are popular, etc. This is all interesting information, but how do I monetize these trends? I can build more products or create more TV episodes or promote select destinations, but to make those trends actionable – to monetize these trends – I need to get down to the granularity of the individual.

I need detailed, individual insights with regards to who is interested and the conditions (price, location, time of day/day of week, weather, season, etc.) that attracts them. I need to understand each individual’s behaviors (tendencies, propensities, inclinations, patterns, trends, associations, relationships) in order to target my efforts to drive the most value at the least cost. I need to be able to codify (turn into math) customer, product and operational behaviors such as individual patterns, trends, associations, and relationships.

I need Analytic Profiles.

Role of Analytic Profiles

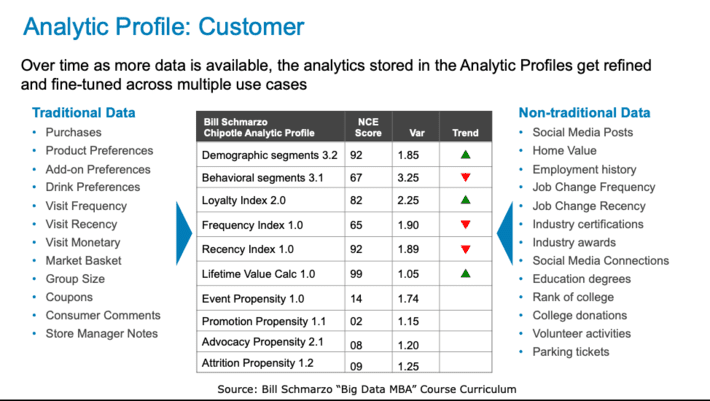

Analytic Profilesprovide a structure for capturing an individual’s behaviors (tendencies, propensities, inclinations, patterns, trends, associations, relationships) in a way that facilitates the refinement and sharing of these digital assets across multiple business and operational use cases. Analytic Profiles work for any humans including customers, prospects, students, teachers, patients, physicians, nurses, engineers, technicians, mechanics, athletes, coaches, accountants, lawyers, managers, etc. Figure 1 shows an example of a customer analytic profile.

Figure 1: Customer Analytic Profile

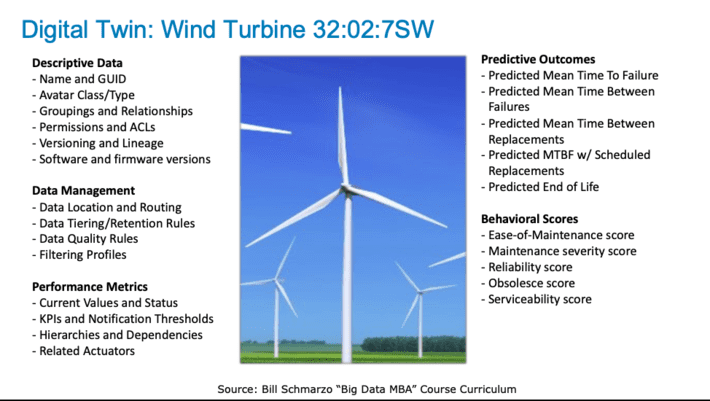

In the same way that we can capture individual (human) behaviors with Analytic Profiles, we can leverage Digital Twinsto capture the behaviors of individual assets. A Digital Twin is a digital representation of an asset (device, product, thing) that enables companies to better understand and predict their performance, uncover new revenue opportunities, and optimize their operations (see Figure 2).

Figure 2: Digital Twin

Analytic Profiles are much more than just a “360-degree view of customer” (an unactionable phrase that I loathe). Analytic Profiles possess the ability to capture and ultimately act upon an individual’s propensities, tendencies, inclinations, patterns, relationships and behaviors. And a Digital Twin is much more than just demographic and performance data about a device. To fully monetize the Digital Twin concept, one must also capture predictions about likelihood performance and behaviors that can lead to prescriptive and preventative actions.

Again, it’s the granularity of humans and devices that lead to monetization opportunities. And I can monetize those individual humans and assets using common analytic techniques.

Analytic Technique: Cohort Analysis

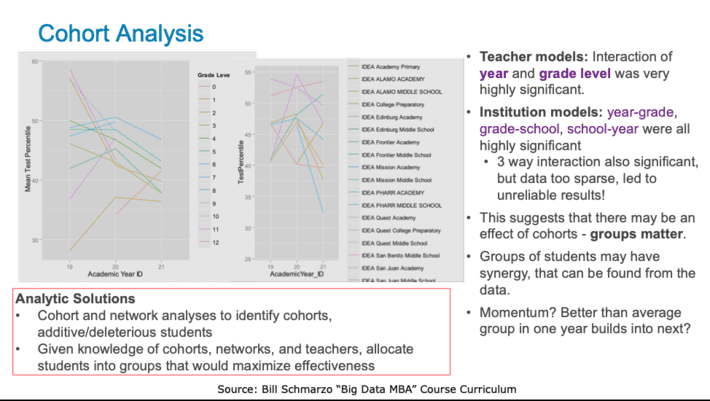

Cohort Analysis is a subset of human behavioral analytics that takes purchase and engagement data and breaks down the performance assessments into related (or “like”) groups of humans. Cohort Analysis can predict the products groups of customers might want to buy and the actions they are likely to take. Cohort analysis allows a company to see patterns and trends across the lifecycle of a group of humans. By quantifying those patterns and trends, a company can adapt and tailor its service to meet the unique needs of those specific cohorts.

While cohort analysis is typically done for groups of clusters of humans, cohort analysis can also work for clusters of devices. Organizations can use cohort analysis to understand the trends and patterns of related or “like” devices over time that can be used to optimize their maintenance, repair and upgrade and end of line decisions (see Figure 3).

Figure 3: Cohort Analysis

See the blog “Cohort Analysis – Cohort Analysis in the Age of Digital Twins” for more details on the application of cohort analysis in the world of IoT.

Analytic Technique: Micro-to-Macro Analytics

Population health focuses on understanding the overall health and the potential health outcomes of groups of individuals. For example, population health may predict a 25% uptick in influenza outbreaks in the Midwest in January. Typically, the different population health organizations would advise its patients to immediately get flu shots.

But maybe there are some important questions that need to be answered before issuing a blanket flu shot recommendation, such as:

- Should everyone get a shot or only those who are most likely to contract the influenza?

- Should everyone get a shot immediately, or is there a way to delay some of the shots in order to optimize the current vaccine supply while more is being manufactured?

- Are there some patients (e.g., elderly, infants, cancer patients) where the side effects of the shot are riskier than the influenza itself?

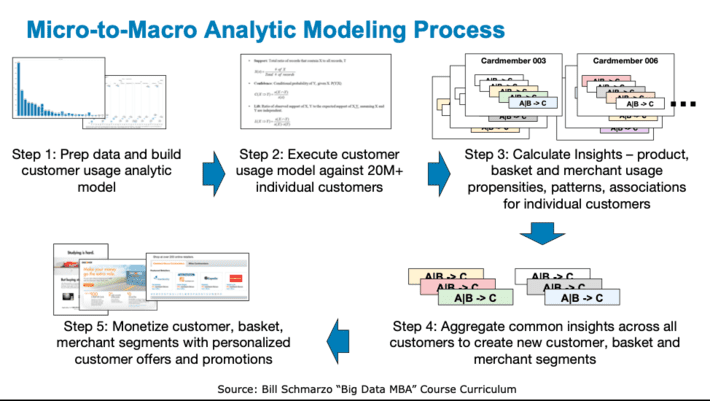

At the macro or aggregated level, it is nearly impossible to make those decisions. However at the granularity of the individual patient, we can leverage patient analytic profiles (with detailed care and treatment history coupled with relevant healthcare and wellness scores) that allows the healthcare providers to make specific decisions about who should get the shots and when given and the potential benefits of the shots weighted against the potential side effects.

Those individual recommendations can then be aggregated into a group level to yield more insights and recommendations about who else should be getting shots using analytic techniques such as cohort analysis (see Figure 4).

Figure 4: Micro-to-Macro Analytic Modeling Process

See the blog “Micro-to-Macro Analytics – The Challenge of Macro Analytics” for more details on the Micro-to-Macro Analytics challenge.

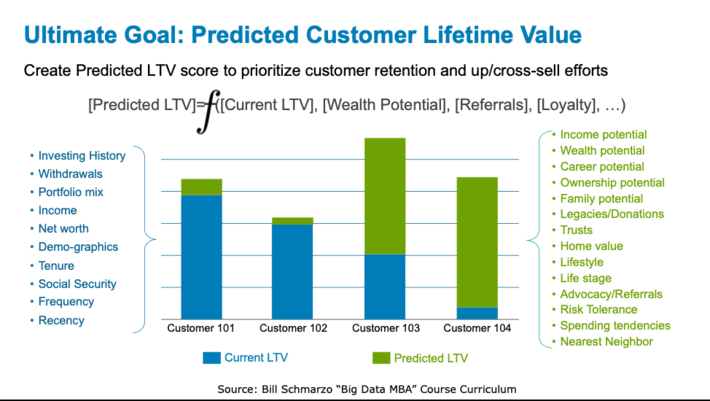

Analytic Technique: Predicted Customer Lifetime Value

The traditional Customer Lifetime Value (LTV) score is based upon historical transactions, engagements and interactions. For example, you would leverage historical purchases, returns, market baskets, product margins and sales and support engagement data to create a “Customer LTV” score. This score is a great starting point for optimizing the organization’s financial and people resources around the organization’s “most valuable” customers.

What if instead of using this “current” Customer LTV, we leveraged new data sources and new analytic techniques to create a predicted Customer LTV score that predicts a customer’s value to the organization? This predicted Customer LTV would leverage second level metrics or scores – Credit score, Retention Score, Fraud Score, Advocacy Score, Frequency Score, Likelihood to Recommend score – to create this Predicted Customer LTV (see Figure 5).

Figure 5: Predicted Customer Lifetime Value

For example, the “Predicted Customer LTV” score in figure 5 highlights the following:

- Customers 101 and 102 are tapped out and probably should not be the focus of heavy sales and marketing efforts (you don’t want to ignore Customers 101 and 102, but they should not be the primary focus of future sales and marketing initiatives).

- On the other hand, Customers 103 and 104 have significant untapped potential; these are the types of customer to target sales, marketing, support and product development investments in order to capture that untapped potential.

This “Predictive Customer LTV” score would be much more effective in helping organizations optimize their sales, marketing, service, support and product development resources.

See the blog “What Defines Your ‘Most Valuable’ Customers?” for more details on the concept of the Predicted Customer Lifetime Value; it’s a game changer for re-framing how you value your customers!!

IoT: Bringing It All Together

IoT offers the potential to blend analytic profiles and digital twins to yield new sources of customer, product and operational value. In particular, Edge Analytics provides the opportunity to bring a real-time or near real-time dimension into our monetization discussions; to improve our ability to catch either humans or devices in the act of doing something where augmented intelligence can make that human or device interaction more effective.

We can leverage IoT sensors and edge analytics to transform “connected” entities into “Smart” entities. For example, with smart factories, integrating Analytic Profiles (for technicians, maintenance engineers, facilities managers) and Digital Twins (for milling machines, power presses, grinding machines, lathes, cranes, compressors, forklifts) can add real-time, low-latency analytics and decision optimization capabilities across each “smart” factory use cases (see Table 1).

|

Potential “Smart Factory” Use Cases |

|

|

– Demand Forecasting – Production Planning – Factory Yield Optimization – Facility management – Production flow monitoring – Predictive Maintenance – Inventory Reduction – Energy Efficiency – Lead time Reduction |

– Worker Scheduling Optimization – Time-to-market Reduction – Plant Safety and Security – Quality Cost Reduction – Asset Utilization Optimization (OEE) – Packaging Optimization – Logistics and Supply Chain Optimization – Sustainability – … |

Table 1: Potential “Smart Factory” Use Cases

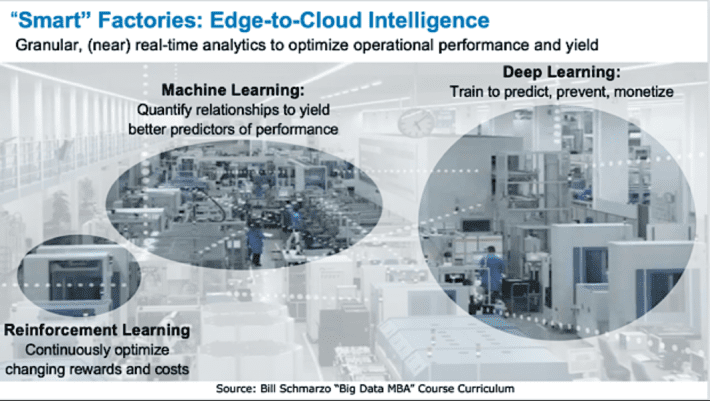

To exploit the granularity and real-time/near real-time benefits offered by IoT and Edge Analytics, we will need an IoT Analytics architecture that puts the right advanced analytic capabilities at the right points in the IoT architecture, for example (see Figure 6):

- Reinforcement Learningat the edge to balance the ever-changing sets of rewards and costs to achieve optimal results by maximizing rewards (energy usage, production, cooling, heating) while minimizing costs (wear & tear, depletion, overtime costs, energy consumption).

- Machine Learningto uncover and quantify trends, patterns, associations and relationships buried in data that might yield better predictors of performance including clustering and classifying of similar activities and outcomes, association and correlation of related activities and outcomes, and regression analysis to quantify cause-and-effect.

- Deep Learningto uncover dangerous or advantageous states in order to predict, prevent and monetize via determination of image contents, discovering detailed nuances of causation, supporting natural language conversations, and video surveillance to identify, count, measure and alert.

Figure 6: “Smart” Factories: Edge-to-Cloud Intelligence

Summary

As IT and OT professionals in organizations seek to deploy IoT, don’t get seduced to start with the sensors. Start by identifying, validating, value and prioritizing the use cases that can exploit the real-time data generation and edge analytics to derive and drive new sources of customer, product and operational value. And in the end, it isn’t the volume of data that’s valuable, it’s the granularity.