Image by Eleanor Smith from Pixabay

Networking technologists and social scientists alike know what the Meltcalfe’s Law is. It’s popularly known as the Network Effect. The Network Effect describes the exponential value networks can bring to resources. The more users that are able to discover and point to a given resource, the larger the Network Effect.

What’s an example of the Network Effect in practice? Well, the Interactive Advertising Bureau (IAB) commissioned a study in 2021, and found that the value of the internet economy in 2020 was $2.45 trillion, accounting for 12 percent of US gross domestic product for that year. Between 2008 and 2020, the internet economy doubled four times, according to the IAB study.

Without scalable, extensible open networking standards like TCP/IP and HTTP gaining adoption, we can be sure that the internet economy would not have been doubling every few years through 2020. Proprietary, purpose-specific networks like XNS (Xerox), DECnet (Digital Equipment Corp.) and SNA (IBM), which had comparatively very small footprints of adoption, were the norm before TCP/IP.

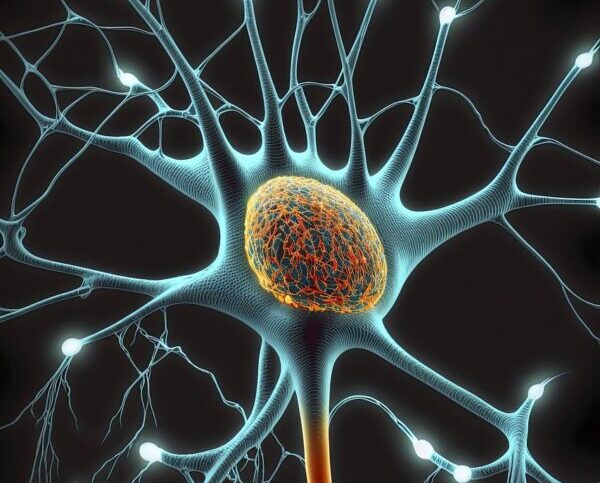

Neural networks leverage the power of networking in another way, as a means of generating scaled out, N-dimensional models with predictive power. Machine learning helps match the shape of a suitable model for prediction purposes – such as estimating which word would go next in a string of words – to a use case.

Unfortunately, the ecosystem surrounding neural networks isn’t networked at the data layer. In fact, the pervasive form of data management in today’s AI environments functions much less like a smooth running system (i.e., continually and efficiently connected to resources) and much more like how the software industry as a whole operates (i.e., in a fragmented, duplicative and wasteful manner).

Metcalfe’s Second Law and how to tap its value

Ethernet pioneer, Xerox PARC luminary and 3COM founder Bob Metcalfe coined the term “Network Effect.” He describes the connected contexts in a knowledge graph and a less centralized architecture as a key means of delivering the “Network Effect to the Nth power”. .(See my 2023 DSC post at https://www.datasciencecentral.com/collaborative-visual-knowledge-graph-modeling-at-the-system-level/ for more information.) This set of effects constitutes Metcalfe’s Second Law.

The Second Law underscores the extent of network power that can be tapped into when the data layer itself is networked in a scalable, logically consistent way.

A knowledge graph is a contextually smart, self-describing network for desiloing and sharing data efficiently at scale. Well-designed knowledge graphs reuse, rather than duplicate, resources and to optimize their distribution. They do this by representing people, places, things and ideas in a uniquely identified way with the help of web address identifiers and entities connected by any-to-any relationships.

In this Tinkertoy-style way (as in the photo below), one knowledge graph can easily connect to another so the two can share resources, and those two can connect to others, and so on.

Such an architecture with the help of consistent, contextualized graph data modeling can allow a single representation to be findable, accessible, interoperable and reusable (FAIR) across enterprise boundaries.

Children in Laos playing with Tinkeroy sets (Blue Plover on Wikimedia Commons, 2012).

And so in this scalable way, organizations can create their own mini versions of continually evolving mirrorworlds: digital twins of the business that can interact and grow symbiotically.

AI needs contiguous, contextualized, organically evolving FAIR data

Back to the brain/body metaphor and how to boost the potential of today’s neural nets with appropriate, data-centric architecture and design. Right now, most enterprises are struggling with their own data because accepted, application + dedicated database architectures in use tolerate or even encourage duplication and fragmentation.

This is not to mention that stranding data and under resourcing effective access to and reuse of it are sure ways to ensure that the data’s quality, limited to begin with, will quickly erode.

The benefits of creating, maintaining and growing a data resource that is findable, accessible, interoperable and reusable (FAIR) are quite clear at this point. The pharmaceutical industry, which has to manage data at the molecular level for drug discovery purposes, first identified and embraced FAIR principles almost a decade ago. Other industries have been following pharma’s lead.

Enterprise knowledge graph platform provider Stardog in a 2020 blogpost articulated how a semantic standards-based solution like theirs makes it possible to establish and grow an organization’s organic FAIR data capabilities. Some of the highlights include these:

- Findability: Users begin their data layer journeys at different starting points. The web of relationships dynamic in semantic graphs allows ease of mapping and modeling newly ingested sources so they can be found from any other part of the connected graph

- Accessibility: A query language such as SPARQL allows complex, virtualized explorations of the graph. Stardog supports other standard query languages such as GraphQL and Gremlin, as well as SQL and RESTful API access.

- Interoperability: Unique IDs as mentioned above, an adaptive graph data model and support for linked data standards mean that both interoperability and control become feasible at scale.

- Reusability: Standard semantic metadata makes zero copy analytics possible, Stardog points out. Standard Shapes and Constraints Language (SHACL) makes it possible to find and correct inconsistencies. Both of these features support data quality and enrichment and therefore reusability efforts.

A network of networks: A systems approach to smarter AI

Metcalfe for decades has underscored the power of connections. The more connected the environment, the more useful and popular it becomes. Is there any reason the methods data scientists and engineers are using aren’t more connected and cohesive at the data layer where it matters most?

Is there any reason given today’s automation that data isn’t shared with its associated context so that it can be more accurate and less duplicative? Old habits, inertia and ignorance just aren’t good enough reasons to stick with methods that are so clearly inferior.