Image by Andrew Martin from Pixabay

Data governance expert Malcolm Chisholm asked a rhetorical question on LInkedin in June 2024: “Is the Cloud the biggest mistake in the history of IT?”

For some, he says, it is. “The execs in these companies are in shock at the invoices they are seeing from their cloud providers.”

“The next stage,” Chisholm thinks, “is denial, and after a few months of that, anger. Expect CIO/CTO ‘turnover’ at that point. Beyond that, bargaining, depression, and acceptance are unlikely as something will have to be done….. Expect a backlash.”

Cloud computing helps scale the sharing of a resource, whether it’s data, logic or a combination. The basic tech is a key enabler. But it’s how cloud computing in a future iteration could be used in a boundary-crossing way that could make all the difference.

That’s why the direction of the next generation of cloud services matters. To be suitable and efficient for AI, next-generation cloud services should feature a deduplicated contextual computing capability: A contiguous, contextualized data space eliminating duplication that can capture how your business operates as a system and articulate how your relevant business identities and contexts relate to one another, both of which are critical for accuracy and efficiency.

Beyond the challenges with pricing and spending visibility, cloud services have fallen short because they reinforce the tendency toward fragmented, application-centric architecture, massive duplication and siloing. Large language models (LLMs) used indiscriminately exacerbate this tendency toward unnecessary complexity and inefficiency. No wonder we’re seeing so many media reports about the massive amounts of energy being consumed by data centers designed for AI purposes.

When LLMs aren’t appropriate to the task at hand

A year ago, Denny Vrandečić of the Wikimedia Foundation, one of the key people behind Wikidata, made some telling remarks about when not to use LLMs during a keynote at the Knowledge Graph Conference:

“Why would you ever use a 96 layer LLM with 175 billion parameters to generate a multiplication, which is a single operation with a CPU? Just because an LLM can do these kinds of things doesn’t mean they should be. Why should you be generating knowledge again and again when you can just look it up in a confident way?…. It’s just not very efficient.”

“The way forward,” Vrandečić said, “is augmented language models.”

Vrandečić pointed to ToolFormer as an example of an augmented language model. MetaAI designed ToolFormer as an LLM that learns how to “use search engines, calculators and calendars, without sacrificing its core language modeling abilities,” according to Benj Edwards in an early 2023 Ars Technica article describing the language bot.

Language models that are a fraction the size of LLMs can be quite useful as tool users at much lower cost. “Knowledge graphs and functions should be tools that LLMs can use,” Vrandečić concluded. “Do we really want to internalize 1 billion Wikidata statements? We could instead use LLMs as the world’s best knowledge extraction mechanisms.”

The Enterprise Language Model: Fluree’s approach to LLMs + enterprise knowledge graphs

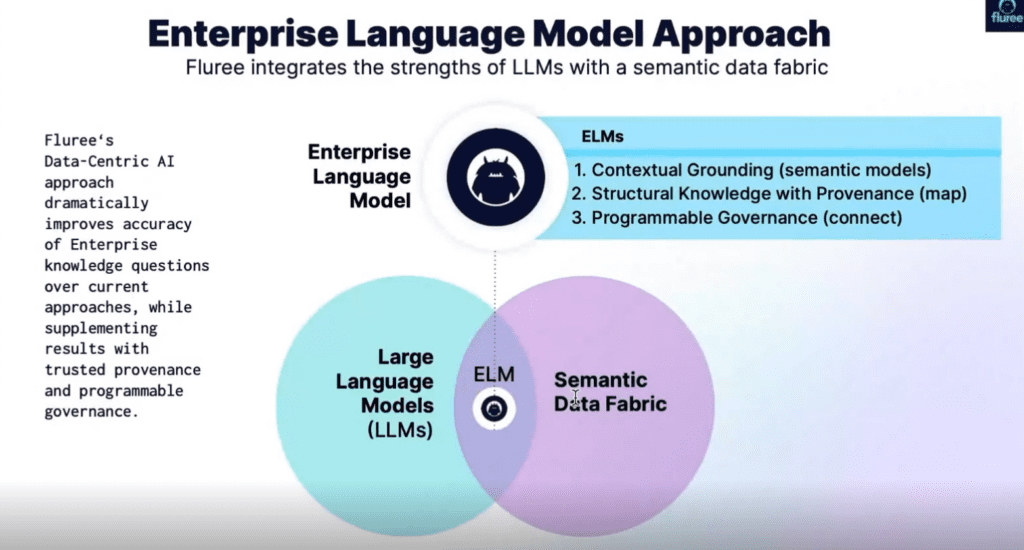

Fast forward to 2024, and savvy graph database platform providers such as Fluree are bringing the strengths of LLMs and knowledge graphs (KGs) together in complementary ways. Brian Platz, CEO and co-founder of Fluree, described his company’s Enterprise Language Model (ELM) approach to making an enterprise knowledge graph accessible by an LLM in a June 2024 webinar.

“Instead of just trying to get an LLM to know everything,”, Platz said, “what we want to do is build this bridge. And then when we build the bridge, we get other benefits. We get access to real-time data. Instead of a large language model having to get constantly retrained, we have this LLM going through this bridge to get to real-time data.“ The ELM becomes the means of communication between an LLM and a KG, as in this diagram.

Fluree PBC, 2024

Other benefits of a bridge to existing enterprise data made available via a knowledge graph

Enterprise data is sensitive, but the policies that govern enterprise data are trapped in application logic that’s not easily accessible or reusable across domains. With a knowledge graph approach, Platz points out, there’s “a way to simply describe the policies and have them live with the data itself, instead of being hard-coded into these applications.” This way, a KG can make policies and other rules a more easily reusable asset.

The KG in that sense becomes a scalable distribution mechanism for contextualized, real-time data governance across a contiguous, de-siloed data environment. In 2023, LLMs were clearly not enterprise ready. But in 2024 – if you know where to look – a more trustworthy means of harnessing the potential of LLMs with existing enterprise data to make better decisions is becoming available. Watch this space for more developments along these lines.