A major aspect of ongoing data center redesign is due to AI’s massive, complex workloads and the need to add many more graphic processing units (GPUs), tensor processing units (TPUs) or accelerators to the mix.

The power these units require and the heat the units generate have forced designers to rethink what constitutes a feasible and optimal layout design. And the redesign cost is ratcheting up.

As a result, according to Tirias Research, owners could be spending $76 billion annually by 2028 on new AI data center infrastructure.

Current challenge for data centers: Today’s dense, GPU-based clusters

Anton Shilov in Tom’s Hardware recently sized up the considerable AI workload demand for GPUs:

“Market research firm Omdia says that Nvidia literally sold 900 tons of H100 processors in the second quarter of calendar 2023.

“Omdia estimates that Nvidia shipped over 900 tons (1.8 million pounds) of H100 compute GPUs for artificial intelligence (AI) and high-performance computing (HPC) applications in the second quarter. Omdia believes that the average weight of one Nvidia H100 compute GPU with the heatsink is over 3 kilograms (6.6 pounds), so Nvidia shipped over 300 thousand H100s in the second quarter.”

So a single Nvidia H100 graphics processing unit (GPU) is about the weight of a light bowling ball. The weight that Omdia calculated above doesn’t include the associated cabling or the liquid cooling.

Steven Carlini, VP of data center innovation for power management equipment provider Schneider Electric, told me recently that racks for AI purposes have had to be redesigned to accommodate the extra weight and heat. He contrasted today’s dense AI server clusters with the “nicely spread out” rows of ordinary server racks that were common before current-generation AI began to ramp up in earnest, turning the nice rows into dense, hot-running clusters.

These AI clusters, Carlini said, are drawing up to 100 kilowatts per rack, compared with up to 20 kW per rack for a conventional, non-AI data center rack. Each Nvidia H100, Carlini’s colleague Victor Avelar, a senior research analyst at Schneider Electric’s Energy Management Research Center, noted, draws 700 watts of power, up from 400 watts for the older A100, which is also still in high demand. Both GPU types require liquid cooling.

The dense, 80 billion transistor silicon area in each GPU generates most of the heat. One AI server of the type that companies like Amazon and Google are installing includes eight of these GPUs. Properly designed, AI server clusters are continually 100 percent in operation, by contrast with much lower server utilization for non-AI applications.

The long view of data center energy management

The owners of the major data centers carrying today’s AI workloads have long been focused on mitigating environmental impact, and they tend to take the long view when it comes to energy management. Yes, energy consumption is higher than ever, but much of the top-tier data center capacity is now powered by renewable energy, and owners are seeking out other zero emission alternatives. Microsoft, for example, signed a contract in May to buy a minimum of 50 megawatts of power from fusion energy startup Helion starting in 2028.

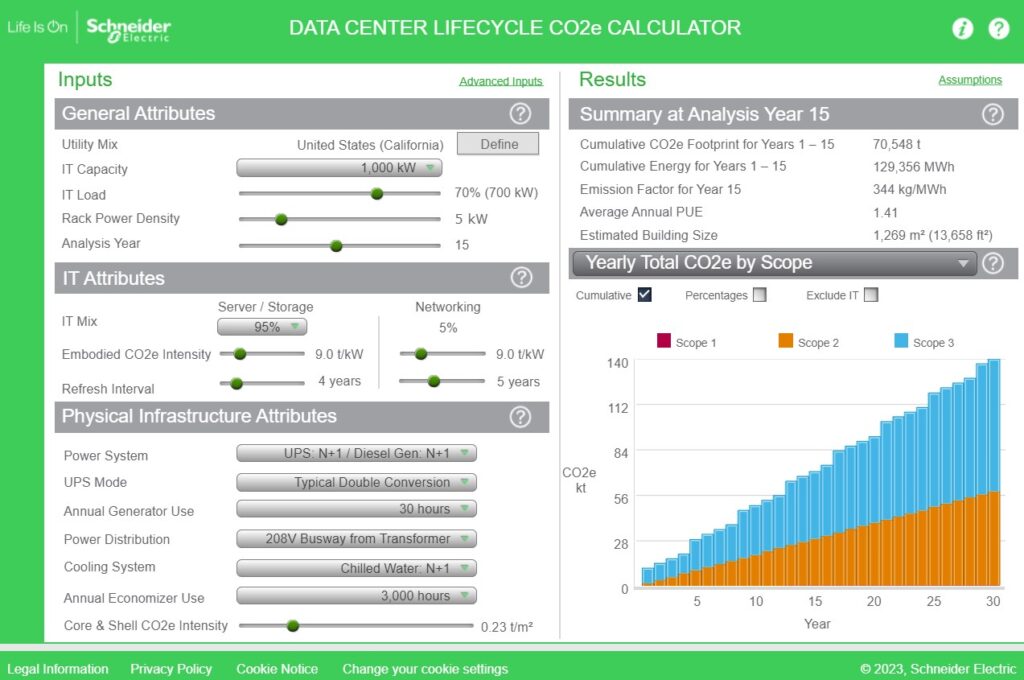

Schneider’s Victor Avelar is behind the company’s effort to quantify the carbon footprint of today’s data centers over their lifecycle and help optimize future data center layout and design. Avelar demoed at their free Data Center Lifecycle CO2e Calculator, which looks at both embodied carbon (such as the carbon emitted in the process of resourcing, making and pouring of concrete used in data center construction) and carbon generated during data center operations.

The Cost Calculator helps planners consider alternatives and choose optimal design criteria. We looked at the power sources, for example. Avelar contrasted a West Virginia location (mostly coal-fired power plants) with one in France (with more nuclear power in the mix).

By looking at the Yearly Total CO2e by Scope, we saw the Scope 2 (power purchased from local utilities) emissions for the West Virginia option to be a much larger percentage of the mix. The option in France, by contrast, showed a larger percentage of Scope 3 (indirect energy, such as embodied carbon in the new data center’s concrete). Scope 1 and 2 emissions are more within the control of the planners.

Shifts in data center ownership

Historically, Carlini pointed out, data centers tended to follow a shopping center-like model of anchor tenants and boutiques, with owners focused solely on the business of building to match the local need and managing the space leases.

But lately the large cloud, media and SaaS providers have been even more dominant in terms of the percentage of new data centers being built. For those owner/operators, there is no standard data center design. “Every Microsoft data center is different,” Carlini said. “It’s amazing.” The main challenge in the current environment is simply keeping pace with all the changes afoot.