Generative AI (GenAI) has revolutionized content generation, information processing, and decision-making. However, as AI tools like ChatGPT and Bard integrate into daily workflows, a crucial question emerges: How is GenAI affecting critical thinking?

A recent research paper published by Microsoft, The Impact of Generative AI on Critical Thinking, explored this issue by surveying 319 knowledge workers and analyzing 936 first-hand examples of AI-assisted tasks. While the study’s sample size is relatively small, its insights provide an early warning about how AI is reshaping our thinking—and where we need to intervene to ensure AI augments human intelligence rather than replaces it.

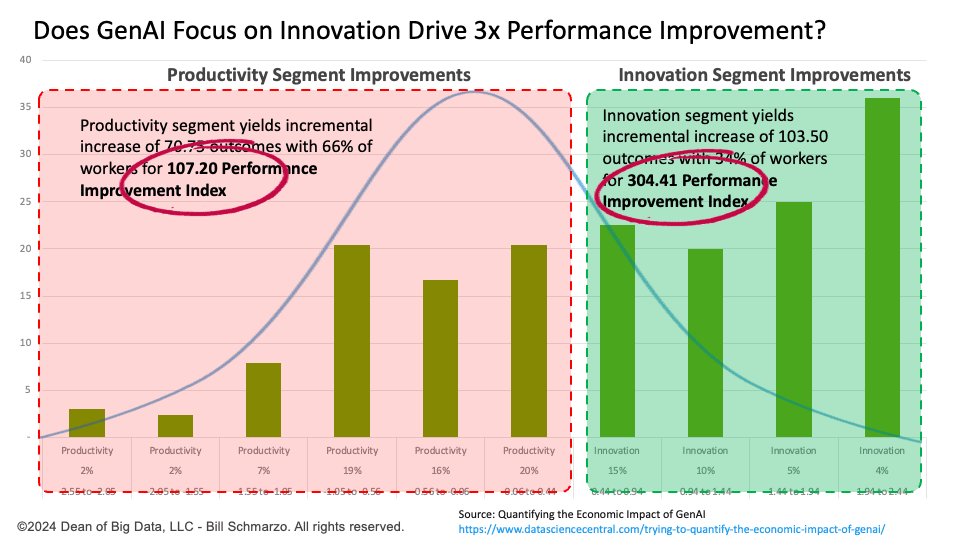

The key takeaway? AI is shifting cognitive effort rather than eliminating it. Knowledge workers spend less time on direct task execution and more time verifying, ideating, and building upon AI-generated outputs. This is consistent with the research that Mark Stouse and I discussed in the blog “Quantifying the Economic Impact of GenAI.” Our blog explored how Generative AI affects different performance groups within an organization. The findings highlighted a critical distinction: While AI can enhance the productivity of below-average and average workers, its most profound impact is unlocking innovation among top performers (Figure 1).

Figure 1: Source: (Trying to) Quantify the Economic Impact of GenAI

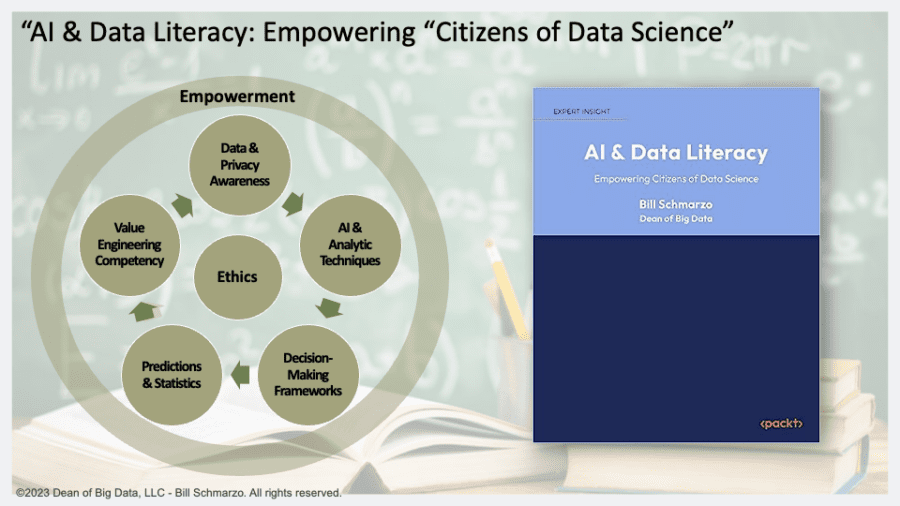

So, how do we ensure that Generative AI becomes a thinking partner rather than a thinking replacement? The answer lies in AI and data literacy. Fortunately, my book AI & Data Literacy: Empowering Citizens of Data Science provides a roadmap for navigating these challenges.

Let’s examine the research findings and explore how AI and data literacy can equip us to thrive in an AI-driven world.

The Research Findings: Generative AI’s Impact on Critical Thinking

The research paper identified several concerns that arise when knowledge workers interact with Generative AI:

1. Reduced Cognitive Effort & Over-Reliance on AI. Workers reported that AI reduced the effort required to perform tasks, especially for information retrieval and content generation. While this sounds positive, it also led to blind trust in AI-generated outputs, with many users failing to evaluate the accuracy of the AI’s results critically.

The Risk: If AI-generated content is taken at face value without verification, errors and biases may go unnoticed, leading to flawed and potentially dangerous decisions.

2. Shifts in Cognitive Roles: From Execution to Oversight. Rather than executing tasks directly, users now take on a supervisory role—refining AI prompts, reviewing AI-generated responses, and verifying accuracy. This shift demands new cognitive skills, particularly in quality assessment.

The Risk: If users are not trained to properly and critically oversee AI, they may unknowingly approve biased content produced by AI rather than actively refining it.

3. Confidence Effects: AI Trust vs. Self-Trust. The study found an inverse relationship between confidence in AI and one’s abilities. Workers who trusted AI more tended to apply less critical thinking, whereas those with higher self-confidence were more likely to scrutinize AI-generated outputs!

The Risk: As AI becomes more sophisticated, workers may depend on AI rather than develop problem-solving skills.

4. Risk of Homogenization: AI’s Impact on Creativity. Since AI models are trained on existing data, they often converge toward similar outputs when given similar prompts. This could narrow perspectives rather than foster diverse, creative problem-solving.

The Risk: Over time, excessive reliance on AI-generated solutions could stifle original thinking and reduce innovation.

How AI & Data Literacy Can Help Address These Concerns

Although the study had a relatively small sample size, its findings highlight emerging trends in AI-driven decision-making. By understanding these challenges, we can proactively ensure that AI literacy and critical thinking skills become essential competencies in the era of artificial intelligence. This is precisely where the book AI & Data Literacy: Empowering Citizens of Data Science comes into play (Figure 2).

Figure 2: “AI & Data Literacy: Empowering Citizens of Data Science”

The book is designed to empower individuals and organizations with the essential knowledge and practical skills needed to harness AI effectively, responsibly, and critically. It provides a comprehensive roadmap for navigating the complexities of AI, ensuring users can maximize its benefits while mitigating risks, making informed decisions, and fostering a culture of ethical and strategic AI adoption.

Let’s examine how key chapters directly address the research’s concerns (Table 1).

| Topic | Chapters | Summary | Action Items |

| Raising Awareness: Understanding AI’s Influence on Decision-Making | Chapter 1: Why AI and Data Literacy? Chapter 2: Data and Privacy Awareness | AI subtly influences decisions through personalized news feeds, product recommendations, and auto-generated content. Understanding how AI models use personal data is the first step in becoming more intentional and critical in AI interactions. | – Recognize when AI is shaping your decisions. – Understand how AI models influence daily interactions. – Be proactive in verifying AI-generated content. |

| Preventing Over-Reliance on AI Through Critical Thinking | Chapter 5: Making Informed Decisions Chapter 6: Prediction and Statistics | AI can speed up decision-making, but over-reliance may lead to passive thinking. These chapters introduce decision-making frameworks like Decision Matrices and the OODA Loop to help users critically evaluate AI-generated insights. | – Probabilities are not facts—critically assess AI-driven conclusions. – Use decision frameworks to refine AI outputs. – Engage actively rather than passively accepting AI recommendations. |

| Encouraging Confidence in Human Judgment | Chapter 8: Ethics of AI Adoption Chapter 7: Value Engineering Competency | As AI becomes more advanced, there’s a risk of people undervaluing their own judgment. These chapters reinforce the importance of human oversight and ethical AI frameworks, ensuring accountability, fairness, and transparency in AI-driven decisions. | – AI should support human expertise, not replace it. – Evaluate AI recommendations ethically and align them with real-world objectives. – Trust human intuition in AI-assisted decision-making. |

| Promoting Diversity of Thought & AI Oversight | Chapter 9: Cultural Empowerment Chapter 10: ChatGPT Changes Everything | AI models can reinforce prevailing narratives rather than foster creativity. These chapters emphasize the importance of human oversight and diverse perspectives to ensure AI is an enabler of creativity rather than a gatekeeper of ideas. | As AI becomes more advanced, there’s a risk of people undervaluing their judgment. These chapters reinforce the importance of human oversight and ethical AI frameworks, ensuring accountability, fairness, and transparency in AI-driven decisions. |

Table 1: AI & Data Literacy

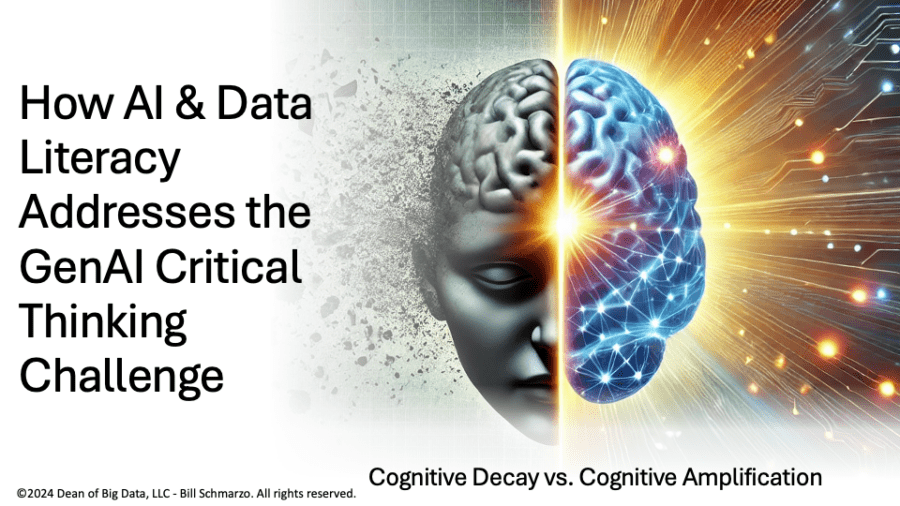

Cognitive Decay vs. Cognitive Amplification: The AI Divide

As AI tools like ChatGPT become increasingly accessible, they offer equal opportunity for everyone—from students and professionals to the average layperson. However, the true impact of these tools isn’t determined by their availability but by how individuals choose to use them. This is giving rise to a growing divide between two distinct groups of AI users:

- Cognitive Decay: This group includes individuals who passively accept AI-generated outputs without questioning or refining them. Over time, their reliance on AI as a substitute for thinking can lead to declining critical thinking skills, reduced problem-solving abilities, and, ultimately, intellectual stagnation. Instead of enhancing their skills, these users risk fully outsourcing their cognitive effort to AI.

- Cognitive Amplification: In contrast, this group actively engages with AI, seeing it as a thinking partner rather than a replacement for their reasoning. They employ AI to fuel natural curiosity, push creative boundaries, and stimulate a deeper exploration of ideas. These individuals view AI as a tool to enhance their cognitive abilities, allowing them to generate innovative solutions, challenge assumptions, and explore new possibilities more quickly and effectively.

The divide doesn’t stem from the tool itself but from intentionality and engagement. AI is neither inherently beneficial nor harmful; its value is shaped by how we interact. For those who approach AI with curiosity and a desire to expand their thinking, these tools become catalysts for intellectual growth and innovation. For those who use them to shortcut the thinking process, the result is often diminished cognitive skills over time.

Conclusion: AI as a Thinking Partner, Not a Thinking Replacement

Generative AI can enhance critical thinking if we use it correctly. But without AI and data literacy, we risk falling into passive dependence, trusting AI blindly, and limiting our cognitive growth. The key to thriving in the AI era is to adopt a mindset of active engagement. That means:

- Understanding AI’s strengths and limitations.

- Developing structured decision-making skills.

- Unleashing human expertise and curiosity while leveraging AI insights.

- Ensuring AI nurtures creativity rather than constraining it.

The book AI & Data Literacy: Empowering Citizens of Data Science provides a roadmap for this transformation. It helps us embrace AI as a thinking partner, not a thinking replacement.