New & Notable

Leveraging GenAI and LLMs in the insurance and reinsurance domains

J. Joseph Rusnak | March 12, 2025 at 10:29 amTop Webinar

Recently Added

Leveraging GenAI and LLMs in the insurance and reinsurance domains

J. Joseph Rusnak | March 12, 2025 at 10:29 amAbstract Large Language Models (LLMs) are a natural language processing tool under the category of generative artificial...

Beyond the Box Score: Insights from Play-by-Play Announcers Enhance Entity Propensity Models

Bill Schmarzo | March 10, 2025 at 7:02 amIn the blog “Economic Power of Entity Propensity Models Are Transforming the Game”, I talked about how my childhood ...

Using FinOps to optimize AI and maximize ROI

Eric Ethridge | March 6, 2025 at 2:37 pmThe rapid development and adoption of artificial intelligence (AI) has been incredible. When it comes to generative AI a...

Economic Power of Entity Propensity Models Are Transforming the Game

Bill Schmarzo | March 2, 2025 at 8:45 amGrowing up, I was fascinated by the Strat-O-Matic baseball game. This strategy-driven baseball simulation board game use...

Contemporary applications of ChatGPT

Jelani Harper | February 26, 2025 at 10:23 amNot so long ago, ChatGPT almost single-handedly broadened the adoption rates and general awareness of LLMs. Shortly ther...

Crafting an effective link-building strategy for small business success

Dan Wilson | February 25, 2025 at 1:01 pmIn today’s digital age, having a strong online presence is crucial for small businesses seeking to grow and thrive. On...

The secret to Deepgram’s speech-to-text model: Synthetic data generation

Jelani Harper | February 24, 2025 at 1:49 pmNova-3, Deepgram’s most effectual speech-to-text model to date, is equipped with a plethora of capabilities. It suppor...

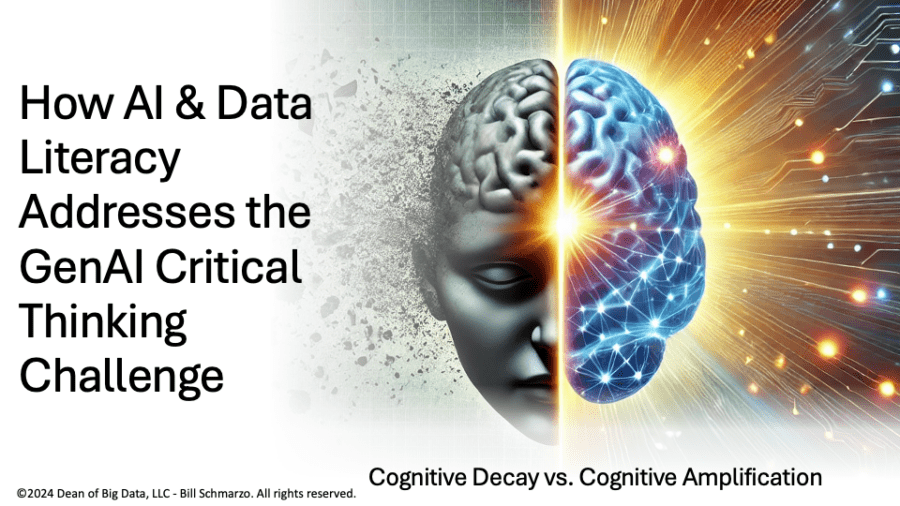

How AI & Data Literacy Addresses the GenAI Critical Thinking Challenge

Bill Schmarzo | February 23, 2025 at 9:01 amGenerative AI (GenAI) has revolutionized content generation, information processing, and decision-making. However, as AI...

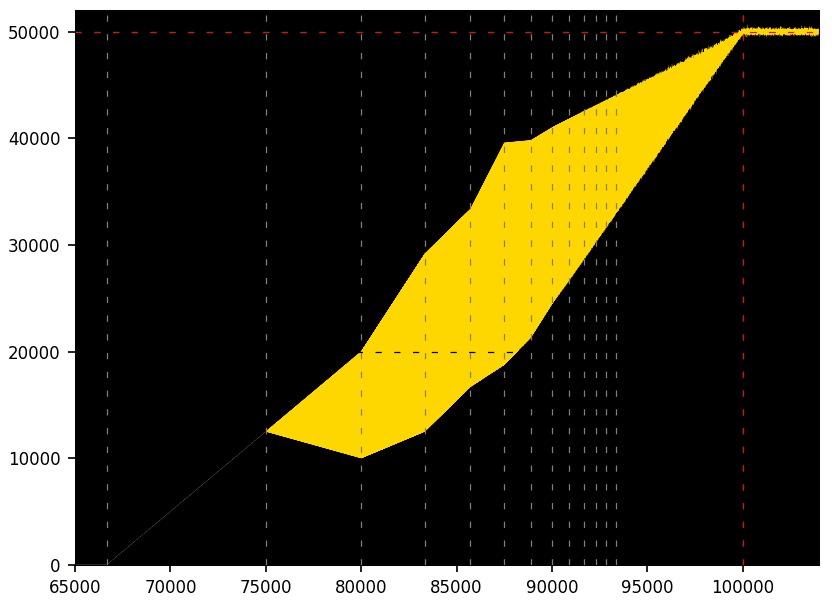

Spectacular Connection Between LLMs, Quantum Systems, and Number Theory

Vincent Granville | February 22, 2025 at 1:44 pmIn my ground-breaking paper 51 available here, I paved the way to solve a famous multi-century old math conjecture. The ...

How data integration can streamline healthcare operations

Rob Turner | February 20, 2025 at 11:12 amData integration optimizes how healthcare operations typically function. As a result, healthcare facilities benefit from...

New Videos

Davos World Economic Forum Annual Meeting Hightlights 2025

Interview w/ Egle B. Thomas Each January, the serene snow-covered landscapes of Davos, Switzerland, transform into a global epicenter for dialogue on economics, technology, and…

A vision for the future of AI: Guest appearance on Think Future 1039

As someone who has spent years navigating the exciting and unpredictable currents of innovation, I recently had the privilege of joining Chris Kalaboukis on his show, Think Future.…

Critical Decision Making for Enterprise AI

Interview with Vincent Koc, MIT Program Mentor Introduction: Bridging AI and Enterprise On this episode of the AI Think Tank podcast, I had the pleasure…