We discuss a new approach for selecting features from a large set of features, in an unsupervised machine learning framework. In supervised learning such as linear regression or supervised clustering, it is possible to test the predicting power of a set of features (also called independent variables by statisticians, or predictors) using metrics such as goodness of fit with the response (the dependent variable), for instance using the R-squared coefficient. This makes the process of feature selection rather easy.

Here this is not feasible. The context could be pure clustering, with no training sets available, for instance in a fraud detection problem. We are also dealing with discrete and continuous variables, possibly including dummy variables that represent categories, such as gender. We assume that no simple statistical model explains the data, so the framework here is model-free, data-driven. In this context, traditional methods are based on information theory metrics to determine which subset of features brings the largest amount of information.

A classic approach consists of identifying the most information-rich feature, and then grow the set of selected features by adding new ones that maximize some criterion. There are many variants to this approach, for instance adding more than one feature at a time, or removing some features during the iterative feature selection algorithm. The search for an optimal solution to this combinatorial problem is not computationally feasible if the number of features is large, so an approximate solution (local optimum) is usually acceptable, and accurate enough for business purposes.

Review of popular methods

We focus here on the metric used to assess how information-rich a feature (or a set of features) is, as this is the key to find the best features in your data set. Features may be be correlated, or redundant. The same is true with observations.

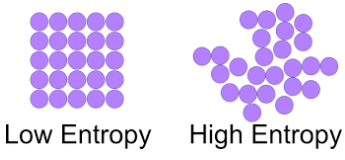

A fairly comprehensive review on this topic can be found here. The simplest, probably oldest metric to measure the quantity of information associated with a feature, is the Shannon entropy, see here. It can be extended to measure the quantity of information associated with a set of features, see this article on joint entropy. However, this applies to discrete features only. It has also been generalized to continuous features: it is then called differential entropy. However this metric is scale-dependent, and model-dependent. Though in practice, in a model-free context, any statistical distribution can be replaced by the empirical distribution computed on the observations, or using the observed empirical percentiles.

Another popular metric is the Akaide information criterion. It was introduced in 1973, in what became one of the most popular scientific articles of all times — in the top 100 citation index as of 2014. However, it is related to the likelihood function, and thus model-dependent. Related and somewhat equivalent to this criterion is the Kullback-Leibler divergence, but again having the same issue of being model-dependent.

In my more recent article on fast combinatorial feature selection (see here and in my Wiley book, page 224) I propose a data-driven, synthetic metric, called the predictive power of a feature.

New idea for feature selection

The idea is to add an artificial dependent variable (the response) to your data set, and perform feature selection as if you were dealing with a linear regression problem. That is, the criterion to select the features, would be based on a metric such as the residual error or R-squared, rather than using some kind of entropy metric. In short, you turn your problem into a problem of model fitting in a predictive analytics setting, which is easier. Another benefit is that the residual error or R-squared is not sensitive to changes in scale (re-scaling some variables) in your data set. Categorical variables such as gender can be replaced by dummy variables taking two values: 0 and 1. It also easily allows for cross-validation, selecting the features based on a subset of observations (the training set) and testing performance on the remaining data (the control set.)

All the regression coefficients could be set to 1 in the full model (involving all the features) to build the artificial response. Goodness-of-fit (e.g. R-square) is measured when an actual regression is performed on a subset of features. Features can be added one at a time as long as the goodness-of-fit metric continue to improve significantly when adding new features (by selecting features most efficiently accomplishing this goal), until you reach a pre-set, usually small number of “optimal” features.

Or you could test a large number of randomly generated regression coefficients for the response (via Monte Carlo simulations), and focus on those sets of regression coefficients that provides the best (or good enough) fit on a small set of features, still using the same goodness-of-fit criterion at each iteration, when selecting a new feature.

Testing on a dataset with known theoretical entropy

We illustrate here the concept explained in the previous section, on an artificial data set with known theoretical entropy attached to each feature. For simplicity, the data set has only two features. The data set consists of the first n = 47 digits of two numbers X(1) and X(2), expressed in two different bases: the digits of X(1) in base b(1), and the digits of X(2) in base b(2). The theoretical entropy attached to each feature is proportional to the logarithm of the base used for the feature in question. Using a number of digits (the number of observations) n larger than 50 causes accuracy issues (wrong digits) unless one uses high precision computing. This is discussed in details in my book Applied Stochastic Processes, Chaos Modeling, and Probabilistic Properties of Numeration Systems: see chapter 11.

We selected two numbers and bases causing some noticeable correlation between the two features, in order to better simulate a realistic data set. Auto-correlations within each feature were also strong. Our parameters are:

X(1) = log(3/2) , X(2) = SQRT(1/2), b(1) = 1.7, b(2) = 2.0.

Note that by using very large bases, you could produce observations (digits) that are very long, simulating actual continuous data, as opposed to binary data in this example. But then, you face again the accuracy issue (correctly computing the digits) described above.

Even with this small dataset, the classical Shannon entropy computed on the dataset, is equivalent to the theoretical entropy, in terms of deciding which feature is best. We also created an artificial response Y as discussed in the previous section, namely

Y = a(1) * Feature(1) + a(2) * Feature(2)

with the regression coefficients a(1) and a(2) set to 1.

We then computed the correlations c(1) between Y and Feature(1), and c(2) between Y and Feature(2). In most cases (we tested with various numbers and various bases) the highest correlation corresponds to the feature with the highest entropy, thus proving compatibility with an entropy-based approach on a data set with no dependent variable. In the few cases where this was not true, it was because the bases b(1) and b(2) were very close to each other, and the entropy values almost identical for the two features. Even in that case, the two correlations were also very close to each other. In that case, picking one feature over the other does not make a difference. Moreover, the approach discussed here is model-free, data-driven. Also unlike data reduction techniques such as PCA (principal component analysis) or data compression, this approach preserves the original features: it does not transform and recombine them, making it easier for interpretation purposes.

You can download the spreadsheet with the simulated data set and all computations, here.

For related articles from the same author, click here or visit www.VincentGranville.com. Follow me on on LinkedIn.

DSC Resources

- Free Book: Applied Stochastic Processes

- Comprehensive Repository of Data Science and ML Resources

- Advanced Machine Learning with Basic Excel

- Difference between ML, Data Science, AI, Deep Learning, and Statistics

- Selected Business Analytics, Data Science and ML articles

- Hire a Data Scientist | Search DSC | Classifieds | Find a Job

- Post a Blog | Forum Questions