In this blog post, I will discuss feature engineering using the Tidyverse collection of libraries. Feature engineering is crucial for a variety of reasons, and it requires some care to produce any useful outcome. In this post, I will consider a dataset that contains description of crimes in San Francisco between years 2003-2015. The data can be downloaded from Kaggle. One issue with this data is that there are only a few useful columns that are readily available for modelling. Therefore, it is important in this specific case to construct new features from the given data to improve the accuracy of predictions.

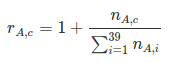

Among the given features in this data, the Address column (which is simply text) will be used to engineer new features. One can construct categorical variables from the Address column (there are a much smaller number of unique entries for addresses than the number of training examples) by one-hot encoding or by feature hashing. This is in principle possible, but creates around 20,000 new features. Given the size of the dataset, this makes training really slow. Instead, we can engineer features based on the crime counts in a given address . Looking through the kernels in Kaggle, I have come across an idea of creating ratios of crimes by Address. The idea is to construct numeric features using the ratio of each crime in a given category to the total crimes (of all categories) recorded in a given Address. Namely, I use the following as a numeric feature:

where n_(Ac) is the number of crimes of category c in a given address A. There are 39 different crime categories, which explains the limits of the sum in the denominator, while 1 is added to the ratio since I will compute the log of this feature eventually.

This idea is easy to implement (especially with Tidyverse functionalities), however requires some care. In a naive implementation, one would compute the crime by address ratios (defined above) for each Address in the training set. Then, these ratios can be merged with the testing set by the Address column. Doing so, one will immediately realize that this leads to overfitting. The reason is that we have used the target variable to construct the new features. As a result, the trained model found much higher weights for these features, since they are highly correlated to the target by construction. Thus, the model memorizes the data and cannot generalize properly when it encounters new data.

This looks pretty bad and it seems like constructing new features using the target is doomed. However, if one splits the training data into 2 pieces, and construct crime by address ratios from piece_1 and merge them with piece_2 (and repeat vice versa from piece_2 to piece_1) then the overfitting could be mitigated. The reason that this works is because the new features are constructed by using out-of-sample target values and so the crime by address ratios of each piece is not memorized.

As an illustration consider the following piece of example data:

|

Address

|

Category

|

|---|---|

| A | ARSON |

| A | ARSON |

| A | BURGLARY |

| B | ARSON |

| C | ASSAULT |

| C | BURGLARY |

| E | ASSAULT |

| D | TRESPASS |