Introduction

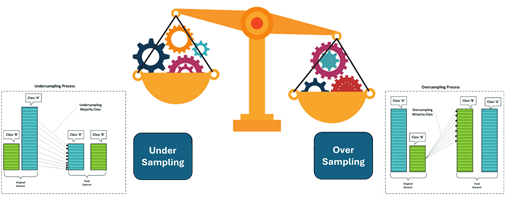

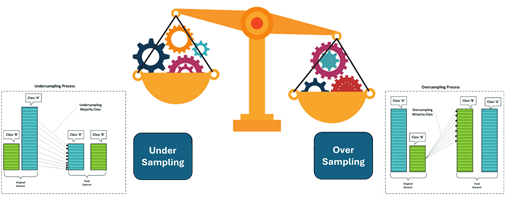

This article will address this issue using resampling techniques such as over-sampling and under-sampling, which help balance datasets and improve model performance. This core technique for balancing imbalanced datasets in machine learning uses over-sampling and under-sampling in machine learning for the datasets where one class significantly outweighs others. This imbalance can lead to biased models favouring the majority class, resulting in poor predictive performance for minority-class instances during the model in production.

We will explore their implementations, advantages, limitations, and impact on machine learning model performance.

- Random Oversampling

- Synthetic Minority Over-sampling Technique (SMOTE)

- Random Under-sampling

Figure 1: Balanced and imbalanced

Understanding the problem of imbalanced datasets

A dataset is imbalanced when one class (majority) has significantly more instances than another (minority).

This imbalance leads to:

- Poor Model Generalization: The model is biased toward the majority class.

- Skewed Evaluation Metrics: Accuracy becomes misleading as the model often predicts the majority class.

- Limited Learning of Minority Class Patterns: The minority class may not contribute enough to the training process.

Figure 2: Problem of Imbalanced Datasets

Common use cases where imbalanced datasets occur:

- Fraud detection (fraudulent transactions are rare)

- Medical diagnosis (certain diseases have fewer positive cases)

- Spam detection (spam emails are fewer than legitimate ones)

Fraud detection: Identifying rare fraudulent transactions

Fraud detection is a critical aspect of financial security that aims to identify fraudulent activities within vast volumes of legitimate transactions. One significant challenge in fraud detection is that fraudulent transactions are rare, making them difficult to spot while minimizing disruptions to regular customer activity.

The main challenge in fraud detection is an imbalance in data based on the scenario below.

- Fraudulent transactions make up a tiny fraction of overall transactions, leading to highly imbalanced datasets in fraud detection systems.

- Traditional machine learning models may struggle to identify fraudulent cases as everyday transactions overshadow them.

Impact of imbalanced datasets on fraud detection

Fraud detection is significantly influenced by imbalanced datasets, where fraudulent transactions comprise only a tiny fraction of total transactions. This imbalance presents several challenges for machine learning models, leading to biased predictions, poor generalization, and difficulty detecting rare fraud cases.

Class imbalance and its challenges

In fraud detection, the dataset typically consists of:

- Legitimate transactions (majority class) → 99% or more

- Fraudulent transactions (minority class) → Less than 1%

Since fraud cases are rare, standard machine learning models tend to favor the majority class (legitimate transactions) and may overlook fraudulent transactions.

Challenges introduced by imbalanced data

- Biased Model Learning

- Models tend to classify all transactions as legitimate because that minimizes overall error.

- Example: If a model predicts all transactions as legitimate, it might still achieve 99% accuracy, but it ultimately fails at detecting fraud.

- Poor Sensitivity to Fraud Cases (Low Recall)

- Even if some fraud cases exist, models trained on imbalanced data might fail to identify them, leading to low recall (high false negatives).

- Fraudulent activities go unnoticed, increasing financial losses.

- High False Positives & Customer Friction

- If the model compensates for imbalance by flagging more transactions as fraudulent, it may increase false positives (legitimate transactions wrongly flagged as fraudulent).

- This can frustrate customers whose genuine transactions get blocked.

Figure 3: Problem of Imbalanced Datasets (Use cases)

Medical Diagnosis: Medical diagnosis relies on machine learning models to detect diseases based on patient data. However, some diseases, such as rare cancers, genetic disorders, and certain infections, occur infrequently in the population. This creates a significant class imbalance problem, where most cases are healthy individuals (negative cases), while actual disease cases (positive cases) are rare.

Impact of Imbalanced Data on Medical Diagnosis

A. High False Negatives (Missed Diagnoses)

- Since positive cases are rare, machine learning models trained on imbalanced data may fail to detect them, classifying most patients as healthy (majority class).

- Example: A model diagnosing cancer might predict with 98% accuracy that a patient has cancer, but if only 2% of patients have cancer, it may miss most of them, leading to delayed treatment and worse outcomes.

B. High False Positives (Unnecessary Anxiety & Testing)

- If a model tries to compensate for imbalance by detecting more positive cases, it may flag too many healthy individuals as sick, leading to false positives.

- Example: A model for detecting a rare genetic disorder may mistakenly flag many patients who do not have the disease, leading to unnecessary medical tests and emotional distress.

C. Bias in Machine Learning Models

- Models trained on imbalanced medical datasets learn to predict the majority class (healthy cases) more confidently, overlooking the minority class (disease cases).

- Result: The model may fail to generalize and underperform when diagnosing actual patients

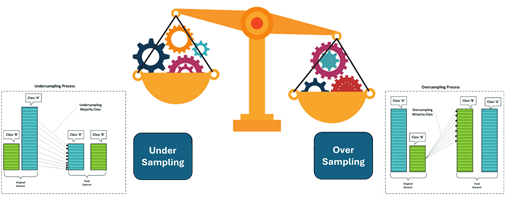

To mitigate these issues, we use resampling techniques, which fall into two broad categories:

- Oversampling: Increasing the number of minority class instances.

- Under-sampling: Reducing the number of majority class instances.

Oversampling Methods

What is Oversampling?

Oversampling is a technique that balances imbalanced datasets by increasing the number of samples in the minority class. This can be done in two main ways:

- Random Oversampling – Duplicates existing minority class samples to match the majority class.

- Synthetic Oversampling (e.g., SMOTE) – Creates new synthetic samples by interpolating between existing minority class instances instead of direct duplication.

Oversampling helps prevent models from being biased toward the majority class, improving their ability to detect rare events, such as fraud detection or rare diseases in medical diagnosis. However, it should be applied carefully to avoid overfitting.

Random Oversampling: Random oversampling involves duplicating instances of the minority class randomly until both classes are balanced. This prevents the model from being biased towards the majority class.

Implementation in Python Using Random Oversampling

from imblearn.over_sampling import RandomOverSampler

from collections import Counter

# Sample imbalanced dataset

X = [[1], [2], [3], [4], [5], [6], [7], [8], [9], [10], [11], [12], [13], [14],[15], [16], [17], [18]]

y = [0, 0, 0, 0,0,0,0,0,0,0,0,0, 1, 1, 1, 1, 1] # Balanced dataset in this case

# Display class distribution before oversampling

before_sampling = Counter(y)

print(“Class distribution before oversampling:”, before_sampling)

# Apply Random Oversampling

ros = RandomOverSampler(sampling_strategy=’auto’, random_state=42)

X_resampled, y_resampled = ros.fit_resample(X, y)

# Display class distribution after oversampling

after_sampling = Counter(y_resampled)

print(“Class distribution after oversampling:”, after_sampling)

Output

Class distribution before oversampling: Counter({0: 12, 1: 5})

Class distribution after oversampling: Counter({0: 12, 1: 12})

Pros

- It is simple and easy to implement.

- Helps models learn more from the minority class.

Cons

- This can lead to overfitting as duplicated samples do not introduce new patterns.

Synthetic Minority Over-sampling Technique (SMOTE)

Synthetic Minority Over-sampling Technique (SMOTE) is a data augmentation method that balances imbalanced datasets by generating synthetic samples for the minority class. Instead of simply duplicating existing samples, SMOTE creates new synthetic data points by interpolating between existing minority class instances. This helps machine learning models learn better decision boundaries and reduces overfitting caused by duplicate data. SMOTE is widely used in applications like fraud detection, medical diagnosis, and anomaly detection, where rare events need better representation. This generates new synthetic samples using interpolation rather than simple duplication.

It creates new data points by:

- Selecting a minority class instance.

- Finding its k-nearest minority class neighbors.

- Generating a new point along the line connecting the instance and its neighbor.

Implementation in Python Using SMOTE

from imblearn.over_sampling import SMOTE

from collections import Counter

# Sample imbalanced dataset

X = [[1], [2], [3], [4], [5], [6], [7], [8], [9], [10], [11], [12], [13], [14], [15], [16], [17], [18]]

y = [0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 1, 1, 1, 1, 1, 1] # Imbalanced dataset

# Display class distribution before SMOTE

before_smote = Counter(y)

print(“Class distribution before SMOTE:”, before_smote)

# Apply SMOTE

smote = SMOTE(sampling_strategy=’auto’, random_state=42)

X_smote, y_smote = smote.fit_resample(X, y)

# Display class distribution after SMOTE

after_smote = Counter(y_smote)

print(“Class distribution after SMOTE:”, after_smote)

Output

Class distribution before SMOTE: Counter({0: 12, 1: 6})

Class distribution after SMOTE: Counter({0: 12, 1: 12})

Pros

- Reducing overfitting by introducing synthetic but realistic samples.

- Enhances model generalization for the minority class.

Cons

- May create borderline or noisy samples.

- Can increase training time.

Under-sampling Methods

Under-sampling is a technique for balancing imbalanced datasets by reducing the number of samples in the majority class. This helps prevent machine learning models from being biased toward the dominant class.

Types of Under-sampling:

- Random Under-sampling (RUS): Randomly removes majority class samples to equalize class distribution.

- Cluster Centroid Under-sampling: Replaces majority class samples with their cluster centroids, preserving key information.

- NearMiss: Selects majority class samples closest to minority class instances, ensuring better class separation.

While under-sampling reduces dataset size and speeds up training, it risks losing valuable information from the majority class, potentially affecting model performance.

Random Under-sampling: As discussed earlier, it randomly removes instances from the majority class to balance class distribution.

Implementation in Python using random under-sampling

from imblearn.under_sampling import RandomUnderSampler

from collections import Counter

# Sample imbalanced dataset

X = [[1], [2], [3], [4], [5], [6], [7], [8], [9], [10], [11], [12], [13], [14], [15], [16], [17], [18]]

y = [0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 1, 1, 1, 1, 1] # Imbalanced dataset

# Display class distribution before undersampling

before_undersampling = Counter(y)

print(“Class distribution before undersampling:”, before_undersampling)

# Apply Random Undersampling

rus = RandomUnderSampler(sampling_strategy=’auto’, random_state=42)

X_resampled, y_resampled = rus.fit_resample(X, y)

# Display class distribution after undersampling

after_undersampling = Counter(y_resampled)

print(“Class distribution after undersampling:”, after_undersampling)

Output

Class distribution before undersampling: Counter({0: 12, 1: 5})

Class distribution after undersampling: Counter({0: 5, 1: 5})

Pros

- Reducing model training time by decreasing dataset size.

- Works well when the dataset is large.

Cons

- Risk of information loss: Important majority class samples may be removed.

- Can lead to underfitting.

Implementation in Python Using SMOTE

from imblearn.over_sampling import SMOTE

from collections import Counter

# Sample imbalanced dataset

X = [[1], [2], [3], [4], [5], [6], [7], [8], [9], [10], [11], [12], [13], [14], [15], [16], [17], [18]]

y = [0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 1, 1, 1, 1, 1, 1] # Imbalanced dataset

# Display class distribution before SMOTE

before_smote = Counter(y)

print(“Class distribution before SMOTE:”, before_smote)

# Apply SMOTE

smote = SMOTE(sampling_strategy=’auto’, random_state=42)

X_smote, y_smote = smote.fit_resample(X, y)

# Display class distribution after SMOTE

after_smote = Counter(y_smote)

print(“Class distribution after SMOTE:”, after_smote)

Comparing the impact of resampling on model performance

To understand the impact of resampling, let’s apply it to a classification model and compare performance before and after resampling.

Step 1: Create an imbalanced dataset

from sklearn.datasets import make_classification

import matplotlib.pyplot as plt

import seaborn as sns

# Generate imbalanced data

X, y = make_classification(n_classes=2, weights=[0.9, 0.1],

n_samples=1000, random_state=42)

# Plot class distribution

sns.histplot(y, discrete=True)

plt.title(“Original Class Distribution”)

plt.show()

Figure 4: Imbalanced dataset (histplot)

Step 2: Apply resampling and train a model

from sklearn.model_selection import train_test_split

from sklearn.ensemble import RandomForestClassifier

from sklearn.metrics import classification_report

# Split data

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.2, random_state=42)

# Apply SMOTE

smote = SMOTE(random_state=42)

X_train_smote, y_train_smote = smote.fit_resample(X_train, y_train)

# Train model

clf = RandomForestClassifier(random_state=42)

clf.fit(X_train_smote, y_train_smote)

# Evaluate

y_pred = clf.predict(X_test)

print(classification_report(y_test, y_pred))

Figure 5: Classification Report

# Plot class distribution

sns.histplot(y_train_smote, discrete=True)

plt.title(“Original Class Distribution”)

plt.show()

Figure 6: Figure 4: Balanced dataset (histplot)

Key observations

- Without resampling, the model may predict mostly the majority class.

- After applying SMOTE and Random Oversampling, the recall for the minority class improves significantly.

- Random undersampling can lead to the loss of valuable majority class samples but is useful when dataset size is a concern.

Choosing the Right Resampling Strategy

| Method | When to Use | Pros | Cons |

| Random Oversampling | Small datasets | Simple: preserves all data | Overfitting risk |

| SMOTE | Complex datasets | Generates diverse samples | Synthetic noise risk |

| Random Undersampling | Large datasets | Faster training | Information loss |

Conclusion

Resampling techniques play a crucial role in handling imbalanced datasets in machine learning. While oversampling (Random Oversampling, SMOTE) helps generate synthetic or duplicated samples, under-sampling (Random Under-sampling) removes excessive majority-class data to balance the dataset.

The choice of technique depends on the dataset size, problem complexity, and trade-offs between overfitting and underfitting. Experimenting with different methods and evaluating their impact on model performance using proper metrics (precision, recall, F1-score) is essential for optimal results.